We’ve previously found unexciting differences of <1% gains between x16 vs. x8 PCIe 3.0 arrangements, primarily relying on GTX 1080 Ti GPUs for the testing. There were two things we wanted to overhaul on that test: (1) Increase the count of GPUs to at least two, thereby placing greater strain on the PCIe bus (x16/x16 vs. x8/x8), and (2) use more powerful GPUs.

Fortunately, YouTube channel BitsBeTrippin agreed to loan GamersNexus its Titan V, bringing us up to a total count of two cards. We’ll be able to leverage these for determining bandwidth limitations in supported applications; unfortunately, as expected, most applications (particularly games) do not support 2x Titan Vs. The nature of being a scientific/compute card is that SLI must go away, and instead be replaced with NVLink. We must therefore rely on explicit multi-GPU via DirectX 12. This means that Ashes of the Singularity will support our test, and also left us with a list of games that might support testing: Hitman, Deus Ex: Mankind Divided, Total War: Warhammer, Civilization VI, and Rise of the Tomb Raider. None of these games saw both Titan V cards, though, and so we only really have Ashes to go off of. It goes without saying, but that means this test isn’t representative of the whole, naturally, but will give us a good baseline for analysis. Something like GPUPI may further provide a dual-GPU test application.

We also can’t test NVLink, as we don’t have one of the $600 bridges – but our work can be done without a bridge, thanks to explicit multi-GPU in DirectX 12.

It’s time to revisit PCIe bandwidth testing. We’re looking at the point at which a GPU can exceed the bandwidth limitations of the PCIe Gen3 slot, particularly when in x8 mode. This comparison includes dual Titan V testing in x8 and x16 configurations, pushing the limits of the 1GB/s/lane limits of the PCIe slots.

Testing PCIe x8 vs. x16 lane arrangements can be done a few ways, including: (1) Tape off the physical pins on the PCIe foot of the GPU, thereby forcing x8 m ode; (2) switch motherboard PCIe generation to Gen2 for half the bandwidth, but potentially introduce variables; (3) use a motherboard with slots which are physically wired for x8 or x16.

Our test platform includes 2x Titan V cards, the EVGA X299 Dark motherboard, an Intel i9-7980XE, and 32GB 3866MHz GSkill Trident Z Black. We were using the Titan Vs under air for these tests. When overclocked, they were set to a stable OC that was achievable on both cards -- +150 core and HBM2.

Ashes is being run at 4K with completely maxed settings. We set them to “Crazy,” then manually increment all options to the highest point, including 8xMSAA. This was required to ensure adequate GPU work, thereby reducing the potential for a CPU bottleneck.

PCIe 3.0 was introduced in 2010, brought with it an encoding scheme upgrade that reduced bandwidth overhead from 20% to 2%, and significantly improved transfer rates. We’ve had trouble saturating theoretical maximum PCIe bandwidth recently, with even single 1080 Ti cards not showing much more than a 1% difference – largely within error margins. This test should find the limits.

Ashes of the Singularity: Single vs. “SLI” Titan Vs

“SLI” in scare-quotes, here.

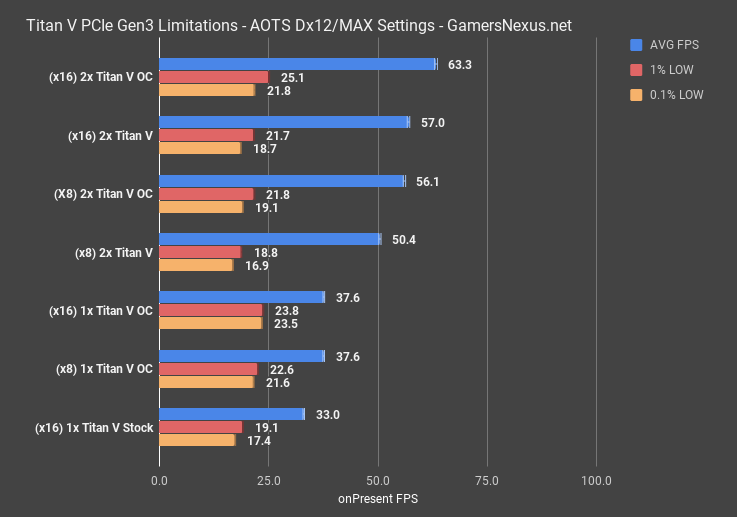

Starting first with our dual versus single Titan V benchmark, we overclocked the cards equivalently in each setup, then tested with 16 PCIe lanes available. The cards tested at 63.3FPS AVG for the dual configuration, with lows at 25 and 22FPS for 1% and 0.1% low frametimes. The single Titan V configuration operated at 37.6FPS AVG, immediately establishing that we are seeing large, noteworthy games. Unfortunately, Ashes is where they stop, as we were unable to get other games to detect both Titan Vs.

The stock cards compare at 33FPS AVG versus 57FPS AVG, which is a noteworthy gain of 72%.

Either way, we’re already seeing 68.4% gains from adding a second card, which is significant; no, the Titan V is not a “gaming card,” or even a gaming architecture, but it is scaling in games.

PCIe 3.0 x8/x8 vs. x16/x16 on Titan Vs

Moving on to the PCIe lane bandwidth testing, our charts now focus on x16 and x8 configurations, both overclocked to push the limits of the PCIe clock.

We’re measuring 63FPS AVG for two cards with an x16 interface, and measuring 56FPS AVG for two cards with an x8 interface. That’s about 12-13% of performance improvement with PCIe Gen3 x16, and is significant in illustrating that we’re nearing the end of Gen 3’s bandwidth, which is 15.75GB/s for 16 lanes. PCIe Gen 4 will push about 2GB/s per lane, and will resolve any potential issues, but nVidia is also using its $600 NVLink bridge to solve for this.

As for single cards, we did not notice any appreciable difference between single Titan V configurations in x8 versus single configurations in x16. It appears that we start encountering the most issues when transacting across two cards.

The question becomes whether or not you’d notice this in other applications. Not every application cares about PCIe bandwidth; mining is a great, modern example, where GPU miners use x1 slots for all their work. It’s possible that applications more tailored for the Titan V would not run into this issue, as the two cards may not need to transact between each other as much. If you use these types of devices for their intended, scientific purposes, please let us know and we can do more bandwidth testing – let us know what applications you use, too, as we aren’t experts in that area.

Conclusion: Finally Finding the Limits, but --

This isn’t representative of the whole. We’ve tested one game, here, and that’s about the limit of what is even compatible with 2x Titan Vs. Production software, like Blender or Premiere, won’t stress the PCIe interface in the same way – the cards don’t need to talk to each other, in these scenarios, and can operate independently on a tile-by-tile or frame-by-frame basis. Gaming puts more load on the PCIe bus as the cards transact more data to create each frame. These devices, as stated before, aren’t meant for gaming, so that’s largely a non-issue. They also aren’t really compatible in 2-way configurations, so that further eliminates the realism of this test.

What we are left with, however, is a somewhat strong case for waning PCIe bandwidth sufficiency as we move toward the next generation – likely named something other than Volta, but built atop it. SLI or HB SLI bridges may still be required on future nVidia designs, as it’s possible that a 1080 Ti successor could encounter this same issue, and would need an additional bridge to transact without limitations.

Editorial, Testing: Steve Burke

Video: Andrew Coleman