GDC 2016 marks further advancement in game graphics technology, including a somewhat uniform platform update across the big three major game engines. That'd be CryEngine (now updated to version V), Unreal Engine, and Unity, of course, all synchronously pushing improved game fidelity. We were able to speak with nVidia to get in-depth and hands-on with some of the industry's newest gains in video game graphics, particularly involving voxel-accelerated ambient occlusion, frustum tracing, and volumetric lighting. Anyone who's gained from our graphics optimization guides for Black Ops III, the Witcher, and GTA V should hopefully enjoy new game graphics knowledge from this post.

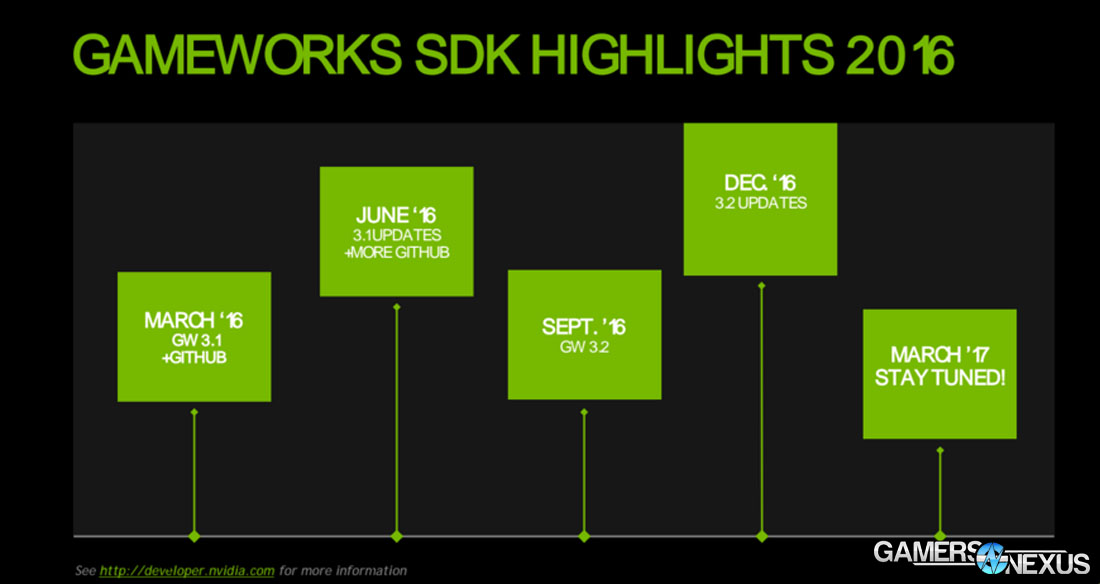

The major updates come down the pipe through nVidia's GameWorks SDK version 3.1 update, which is being pushed to developers and engines in the immediate future. NVidia's GameWorks team is announcing five new technologies at GDC:

Volumetric Lighting algorithm update

Voxel-Accelerated Ambient Occlusion (VXAO)

High-Fidelity Frustum-Traced Shadows (HFTS)

Flow (combustible fluid, fire, smoke, dynamic grid simulator, and rendering in Dx11/12)

GPU Rigid Body tech

This article introduces the new technologies and explains how, at a low-level, VXAO (voxel-accelerated ambient occlusion), HFTS (high-fidelity frustum-traced shadows), volumetric lighting, Flow (CFD), and rigid bodies work.

Readers interested in this technology may also find AMD's HDR display demo a worthy look.

Before digging in, our thanks to nVidia's Rev Lebaredian for his patient, engineering-level explanation of these technologies.

Volumetric Lighting Algorithm Update

Volumetric Lighting was introduced most heavily in Fallout 4 with inclusion of crepuscular rays (“godrays”). We previously did some benchmarking on godrays that ran debug filters to draw trace lines, which revealed the path of light being cast from various sources – a good look to understand the basics.

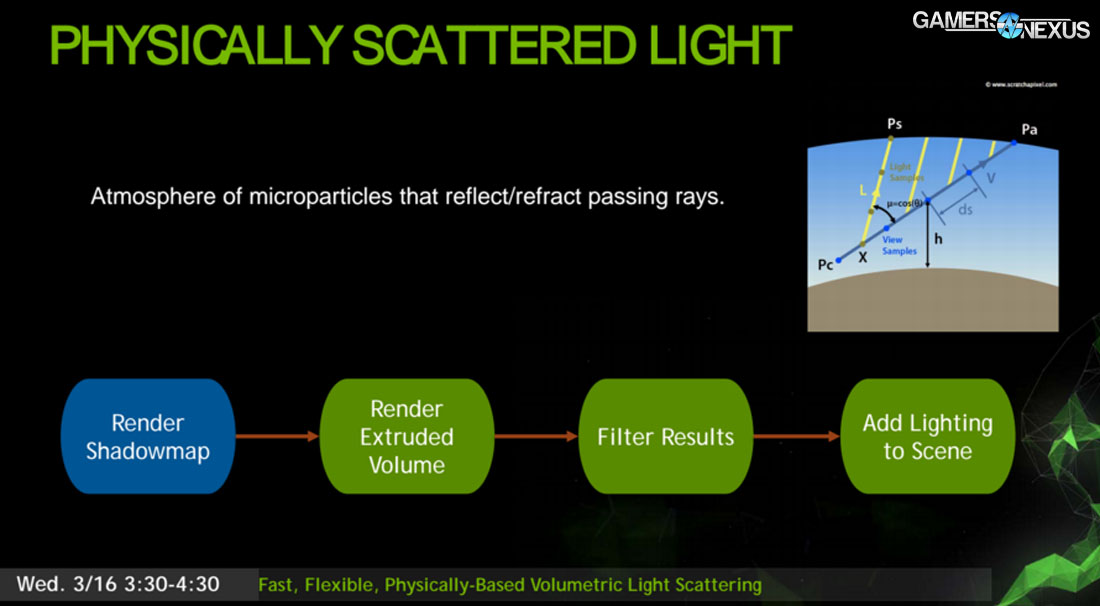

In the newest Volumetric Lighting algorithm update – releasing tomorrow at GDC 2016 – nVidia is demonstrating its move away from directional light with shadow mapping; the company will instead highlight a focus on extruded light volume geometry.

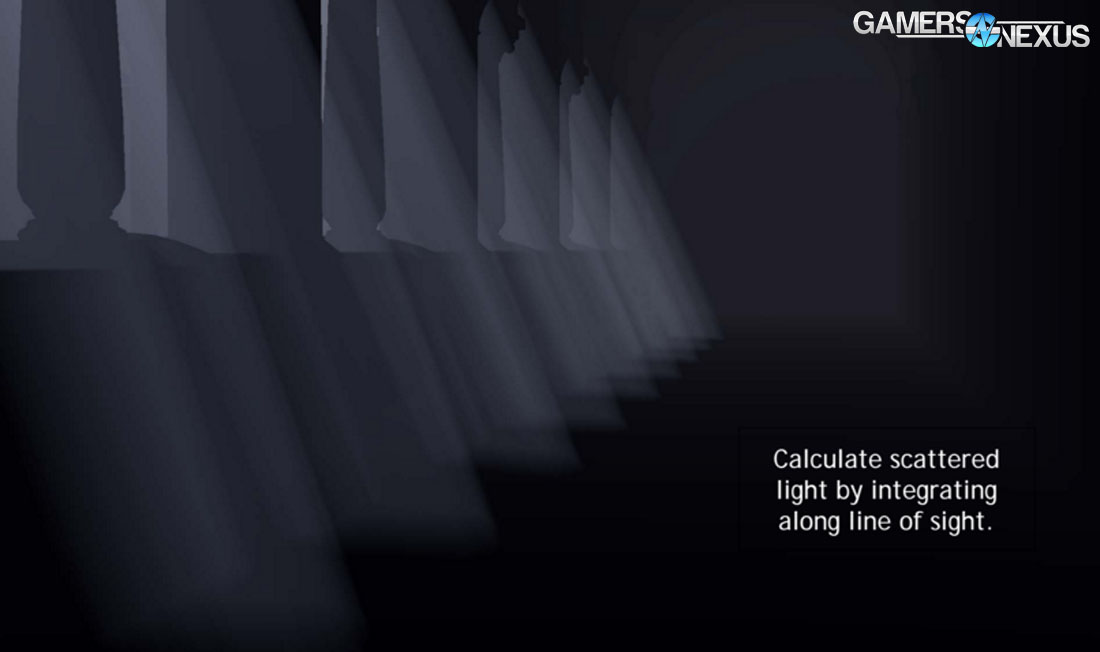

By extruding geometry for the light, the Volumetric Lighting algorithm is able to calculate scattered light (microparticles that reflect and refract rays) with an understanding of objects. Objects which partially or fully occlude light sources will be integrated in the calculation, ensuring appropriate shading where necessary (like the back side of an occluding pillar – which should be partly dark) and lighting where the rays hit. Rays gradually lose their power as they continue to increase distance from the source or pass through semi-transparent objects, all calculated in real-time on the GPU.

By adding scattered light to the scene with these crepuscular rays, the scenes rendered possess greater apparent depth and more accurate shading (and lighting) with responsivenes to LOS blockers.

Walking between buildings would be a fantastic example and almost begs for a NYC demo. Buildings can partly or fully occlude the sun's cast rays, or might have geometry (think: an arched bridge) which occludes only part of the rays. Volumetric Lighting allows for more accurate tracing of light in these examples.

Voxel-Accelerated Ambient Occlusion

Featured newly in Rise of the Tomb Raider, VXAO builds upon VXGI geometry data and can be used across all dimensions for voxelizing. Before getting into the voxel-accelerated “version” of ambient occlusion, a brief recap on what AO is:

Ambient lighting scatters light everywhere in the game world in a more-or-less uniform fashion. Equal amounts of light come from everywhere and meet at a single point, which makes for uneven shadows and lighting on certain surfaces. This is especially true for occluded surfaces that may be visible to the player, but not to light sources that are off-screen. NVidia's Rev Lebaredian used the first Toy Story movie as an example, where ambient light was used in addition to directional light, causing odd areas of unnatural brightness – Woody's mouth, for instance, was so well-lit that it appeared he'd had an LED-backlit tongue.

Ambient Occlusion methods introduced means to approximate shadowing of areas with varying levels of light contact, using individual shadow tracing in early CG film. This has historically been too “expensive” for use in real-time game rendering, but has made its way into gaming as GPUs and graphics tech have improved. If you've ever seen SSAO, HDAO, or HBAO, then you've seen iterations of modern, real-time AO. Screen-space ambient occlusion was one of the first “hacks” for AO, which assumed the entire world was limited to the Z-buffer, or the camera's perspective. The Z-buffer contains the distance of each pixel from the point-of-view of the camera, so screen-space ambient occlusion only traces shadows and calculates for the data currently on screen.

The downside to this approach is inherent to its Z-buffer limitation, which was the only “affordable” means through which the AO could be calculated: Objects which are unseen to the user – maybe a tower in the distance, just off-camera, will not be represented in SSAO calculations as those objects are not contained in the z-buffer. Here's an example:

Look underneath the tank. In the example using modern AO methods, the underside of the tank, some of the tread, and some of the barrels are brightly colored where it'd seem there would more appropriately be shadows. This is because the AO calculation doesn't know that more geometry exists to the tank; that geometry isn't being drawn (why should it be? It's unseen), but because the Z-buffer doesn't contain the geometric data, there are no traced shadows or light interactions occurring on-screen which would correspond to the tank's unseen triangles. That may be fine for the computer, but for human players who intuitively understand that there's a tank body, rear, and right flanks, a disconnect occurs that makes the shadowing of the scene look “off” or “cheap.”

AMD and nVidia have both spoken to this before, each introducing respective AO technologies that more intelligently calculate scenes (HDAO for AMD, HBAO+ for nVidia). The new technology is voxel-accelerated AO.

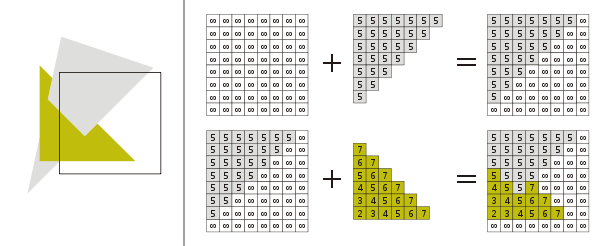

VXAO takes advantage of Maxwell (and up) hardware, similar to VXGI that we discussed previously. Voxel-accelerated ambient occlusion converts the screen space to voxels – a reduction in complexity from the existence of raw triangles – and then performs cone-tracing for its shadowing computation.

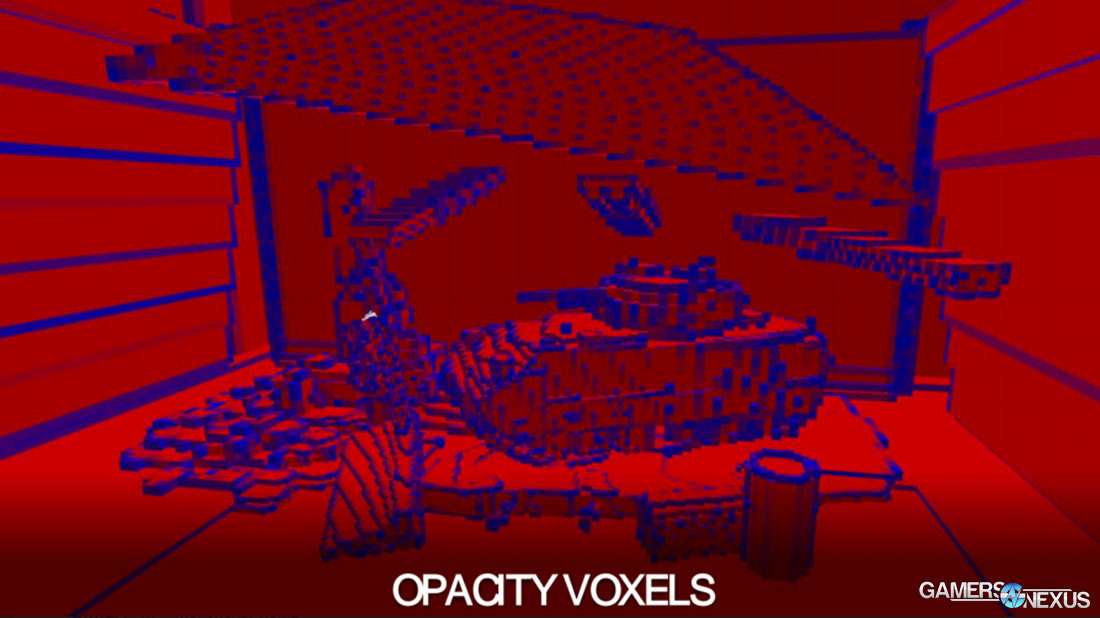

The pixel shader uses the Z-buffer as input and performs per-point calculation to approximate shadowing, if assuming the Z-buffer is all the geometry that exists. VXAO takes this geometric data and converts it into voxels (suggested reading: our vertices versus voxels article). Here's another sample image:

Each voxel is red or blue. Blue voxels are partially occluded – geometry at least partly fills that voxel – while red voxels are completely covered by the volume of geometry; they're opaque. The conversion to voxels generates a 3D representation of the scene, which the engine and GPU can now inject queries into. Cone-tracing is performed to draw lines from each point to calculate how much occlusion exists from respective points, traced into the hemisphere around that point.

This is significantly more affordable on GPU resources than performing the same calculations and tracing from the original scene's triangles, and is able to rely on Maxwell (and up) architecture for specialized processing.

Maxwell includes a fast geometry shader (called “Fast GS”). Fast GS bypasses the traditional requirement to rasterize a given triangle three times for voxelization into XYZ, and instead sends the data through the geometry pipeline and into multiple render targets.

Above is the in-game results comparison between SSAO and VXAO.

Furstum-Traced Hard Shadows with Soft Shadows & HFTS

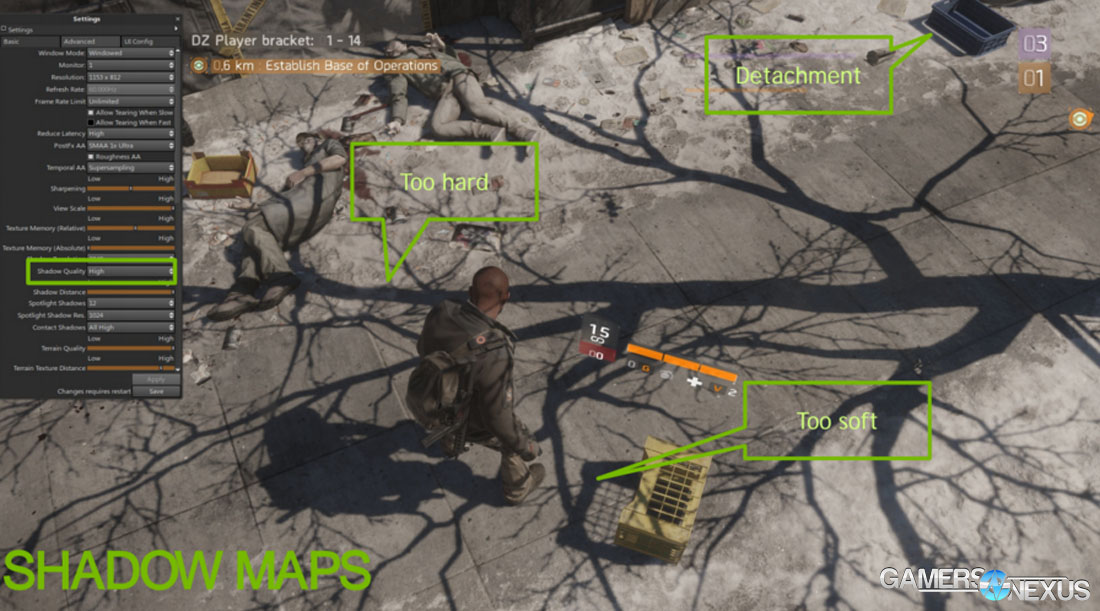

The above image is a scene taken from The Division, which features new HFTS technology that's enabled by GameWorks.

Look closely at the bin (top-right area) in the above image. Notice that there is a gap between the bin and the shadow cast upon the ground – an unnatural occurrence. Notice, too, that the tree shadow does not diffuse or grow softer with increased distance from its source, as would happen in the real world. The shadow is fairly consistent and retains its hard lines throughout its entirety.

Video game shadows are extrapolated from shadow maps, often made with a mix of tools and by-hand tuning. In the case of the bin, detachment is occurring because of a shadow mapping hack that approximates representation of geometry on the scene. Pixels contain a z-value from the light source's point-of-view, which can sometimes cause an interaction with the object's own shadow. To deal with this, developers push the z-value away from the source of light so that an object doesn't shadow itself – but the result is detachment, above, where the shadow gets pushed too far from some objects.

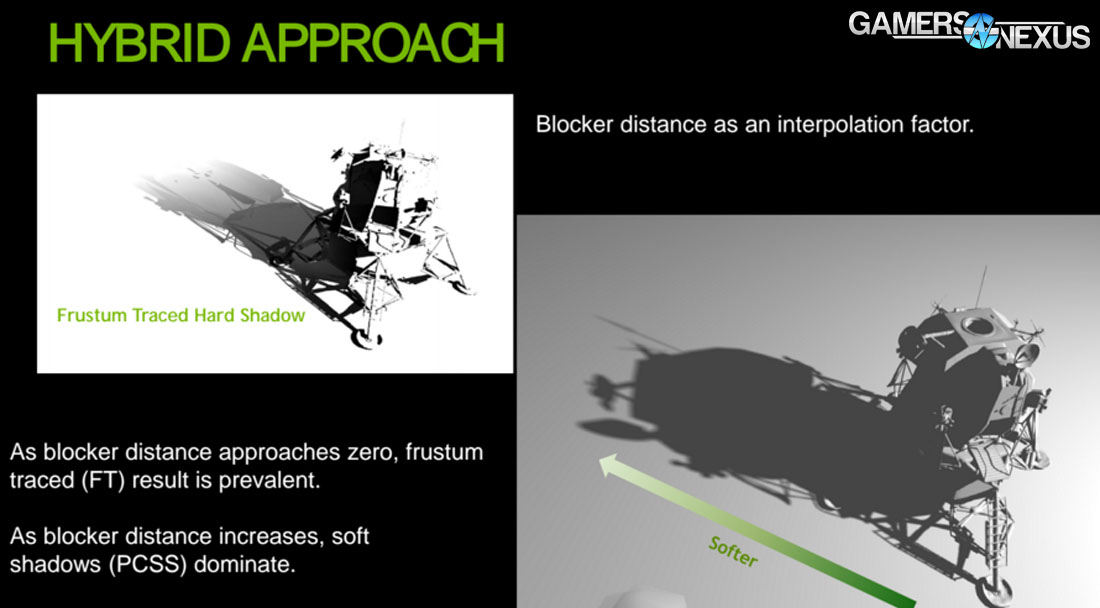

Frustum tracing extrudes volume from the perspective of the light source, along the frustum, and calculates where the shadow should land with greater precision. A “frustum” is the shape of geometry seen when looking from the perspective of a camera or light source; extruding from the viewpoint along that image plane creates a prism shape, called the “frustum.”

This new form of shadow generation – which happens in real-time – will create harder shadow lines where finely detailed geometry exists (reducing the possibility of 'marching ants,' or the aliasing effect), softer shadow lines where objects distance themselves from the source, and eliminate detachment of shadows from objects. The cost is greater than traditional shadow mapping, but not so great that it's unusable.

NVidia's approach to all of this is to blend PCSS (percentage closer soft shadows) when further from the occluder, and frustum hard shadows when objects are closer.

Combustible Fluid, Fire, Smoke, & Volume Rendering

Just Cause 3 has some of the most impressive explosion we've seen – but they're still not properly dynamic. Flying a plane through a plume of smoke and fire doesn't cause tendrils of smoke to cling to the plane's wings, nor flames to displace from the propeller. To perform such a fluid calculation is incredibly intensive on the GPU and expensive on development resources – so it makes sense to look toward SDKs for such a tool-set.

NVidia's Flow is the appropriate one for this instance. Flow is pushing for Unreal Engine integration in 2Q16 and has just released as a beta. The tech has a custom-tailored algorithm for combustion and advanced fluid simulation within game (fluid includes fire, smoke, air, and liquid).

Presently, developers divide bounding boxes into voxels for spot-calculation of fluid effects (let's take smoke, for an example). That box performs calculation per-voxel and intra-voxel, and exceeding the confines of the box means that either the fluid is clipped – we've all seen this, where smoke might clip into the side of a building and stop suddenly, for instance – or the box sizes changes. Changing the box size to be larger means, one, more additional computations, but two, it's going to expand the memory cost and abuse real-time resource budgets. The alternative is to expand the voxels, but decrease resolution and fidelity – so clipping is avoided, but there's a sudden and sharp change in effect quality.

These boxes present other problems when an effect might streak across diagonally – like a plane's contrail – which then wastes significant voxel space and resources.

The new “Flow” technology only allocates voxels that are relevant to the area of impact, eliminating the requirement of a bounding box. Flow z-allocates voxels as the fluid changes, and allows a fixed resolution while solving authoring problems – like defining boxes and limitations.

See the below video where we talk with Crytek about some CryEngine tech that relates to some of this coverage:

The real-world realization is smoke, fire, and fluid dynamics effects that interact with the player and environment. This is within the future of graphics – maybe not today (at least, not in games), but soon. Flying or walking through smoke clouds with smoke particles clinging to the character will be possible with real-time CFD. Flow won't be the only solution, but it's the strongest start we've seen.

GPU Rigid Body Technology

NVidia has tried rigid body tech a few times now, but this time, the team thinks it's finally here. The hope is to move rigid body physics to the GPU and keep the same fidelity as the CPU algorithm (PhysX). More important, if it all works, is switching between the CPU and GPU in the same run based upon load level. If the workload is small and suitable for the CPU – a low number of physical bodies, for instance – it'll be moved to the CPU; if there is a large number of bodies, it goes to the GPU.

We'll talk more about this one in the future, as the tech continues to expand.

Be sure to check out additional GDC coverage on the YouTube channel and website. Consider supporting us on Patreon if you like this deep-dive content.

- Steve “Lelldorianx” Burke.