RAM Performance Benchmark: Single-Channel vs. Dual-Channel - Does It Matter?

Posted on

Memory has a tendency to get largely overlooked when building a new system. Capacity and frequency steal the show, but beyond that, it's largely treated as a check-the-reviews component. Still, a few guidelines exist like not mixing-and-matching kits and purchasing strictly in pairs of two where dual-channel is applicable. These rules make sense, especially to those of us who've been building systems for a decade or more: Mixing kits was a surefire way to encounter stability or compatibility issues in the past (and is still questionable - I don't recommend it), and as for dual-channel, no one wanted to cut their speeds in half.

When we visited MSI in California during our 2013 visit (when we also showed how RAM is made), they showed us several high-end laptops that all featured a single stick of memory. I questioned this choice since, surely, it made more sense to use 2x4GB rather than 1x8GB from a performance standpoint. The MSI reps noted that "in [their] testing, there was almost no difference between dual-channel performance and normal performance." I tested this upon return home (results published in that MSI link) and found that, from a very quick test, they looked to be right. I never got to definitively prove where / if dual-channel would be sorely missed, though I did hypothesize that it'd be in video encoding and rendering.

In this benchmark, we'll look at dual-channel vs. single-channel platform performance for Adobe Premiere, gaming, video encoding, transcoding, number crunching, and daily use. The aim is to debunk or confirm a few myths about computer memory. I've seen a lot of forums touting (without supporting data) that dual-channel is somehow completely useless, and to the same tune, we've seen similar counter-arguments that buying anything less than 2 sticks of RAM is foolish. Both have merits.

Video - RAM: Testing Dual-Channel vs. Single-Channel Performance

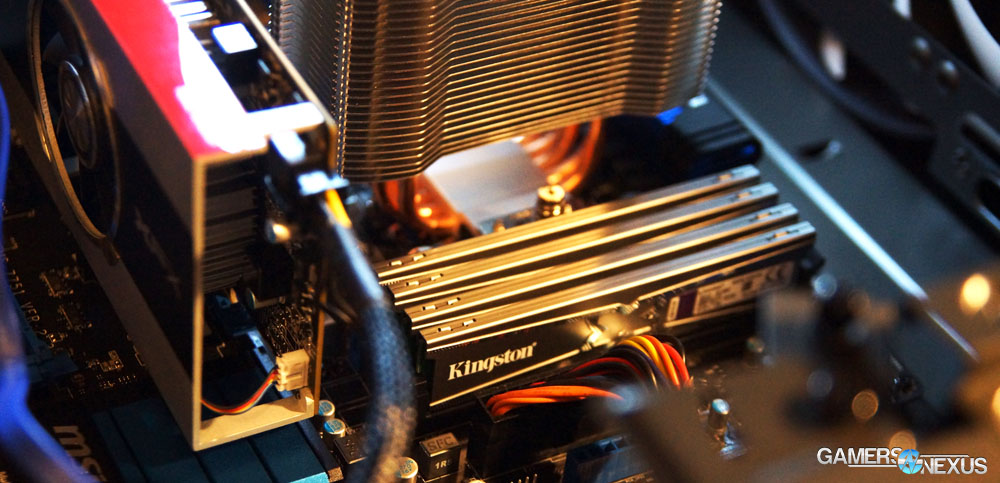

The above is the video component, wherein we show off our After Effects RAM preview FPS when dual-channel is used, along with most the other tests. The RAM featured is Kingston's HyperX 10th Anniversary RAM, which is a special version of their normal HyperX memory. More on this below.

Addressing Terminology: There Is No Such Thing as "Dual-Channel RAM"

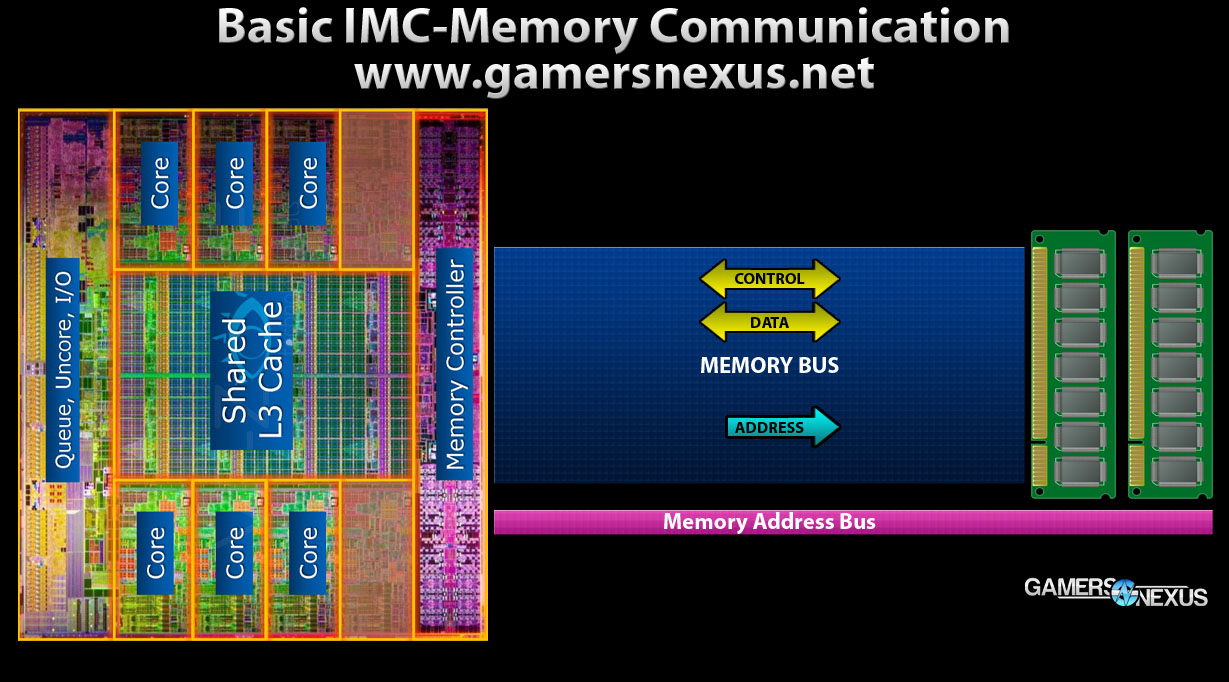

First of all, there's no such thing as "dual-channel memory." I want to get that cleared-up early. Memory channeling exists at the platform level, so a dual-channel chipset or IMC (Integrated Memory Controller, as in modern CPUs) may exist, but the memory itself does not have a special bit or chip that controls this. It is up to the motherboard and supporting platform to offer multiple channels.

Things get a little different once you enter quad-channels from a density perspective, but we won't get into that here. The short of it is that higher channel configurations (like with IB-E) give you the opportunity to go to higher densities, like 64 gigabits, which has a pretty large impact on performance potential. Again, that's out of the scope of our dual-channel / single-channel article.

What Are We Testing?

We're testing the performance of two sticks of 2x4GB (more on the platform specs & methodology below) in single- and dual-channel configurations. Please note that all tests were conducted with a discrete GPU and will not use the IGP present in

Further, note that channel configurations could (not tested) and frequency will have a significant impact upon APUs and IGPs, so these test results are strictly targeted at systems that don't rely heavily upon an integrated graphics chip. This is because APUs and IGPs do not have on-card memory, as a video card does, and thus must access system memory for their graphics processing; in this instance, DDR3 RAM is (1) physically farther from the GPU component of the CPU and (2) significantly slower than the GDDR5 memory found on video cards. This in mind, you'd want every advantage you can get with an IGP.

Again, this test strictly looks at memory performance when the IGP is not utilized.

Dual-Channel Architecture: How Dual-Channel RAM Platforms Work

If you already understand the basic, top-level concepts of multichannel platforms as it pertains to memory, you can skip this part and jump into the methodology section immediately following. For those unsure of exactly what "dual-channel" or "multichannel" means, read on.

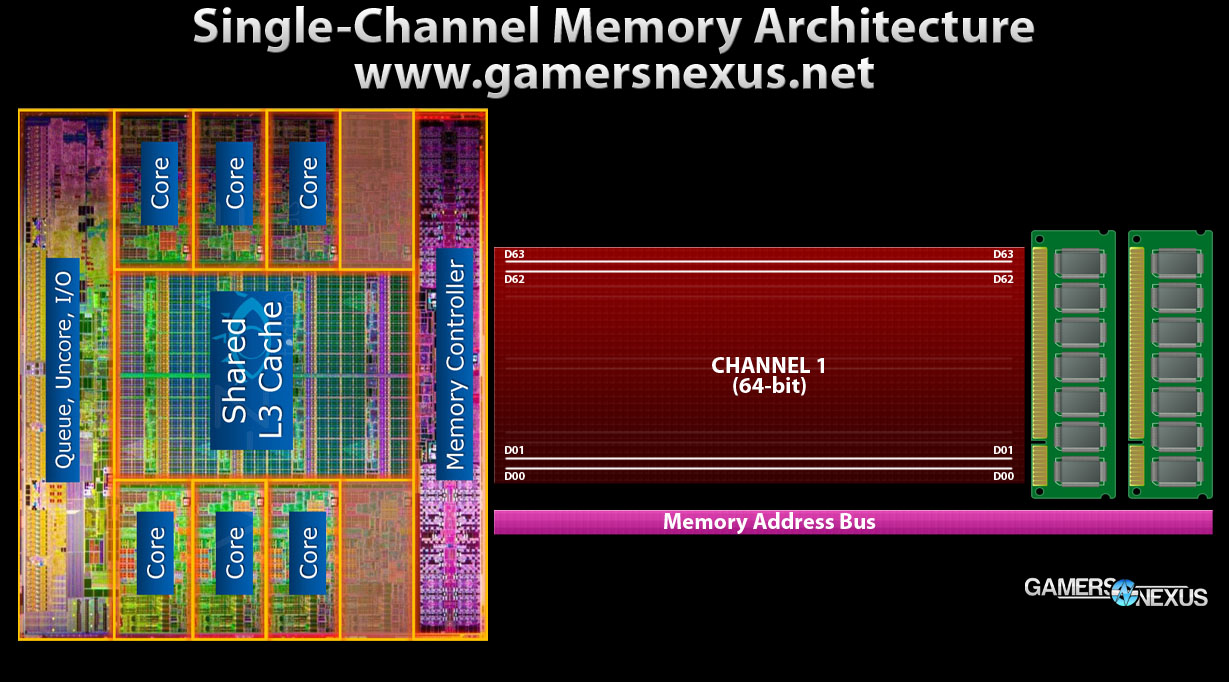

A single stick of RAM will operate on a single 64-bit data channel, meaning it can push data down a single pipe that is 64-bits in total width. The channel effectively runs between the memory controller or chipset and the memory socket; in the case of modern architectures, the memory controller is often integrated with the CPU, rather than acting as standalone board component.

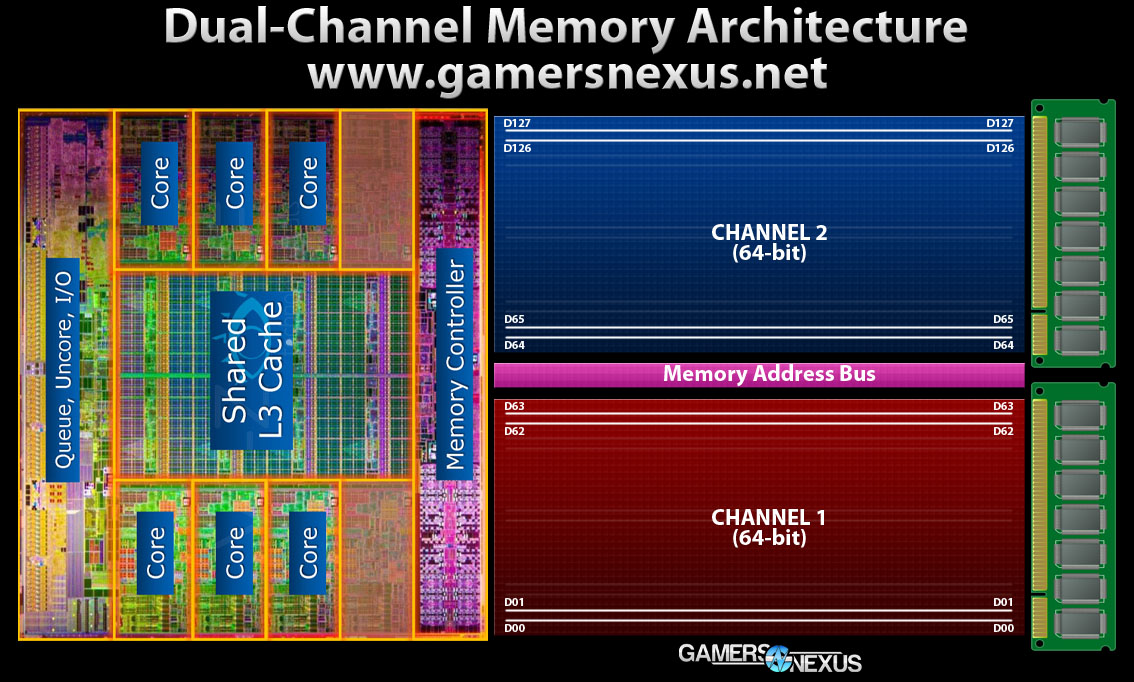

By utilizing multi-channel platforms -- something available on every modern build -- we multiply the effective channel width by the count of channels available. "Effective" is key. In the case of dual-channel configurations, we've now got 2x64-bit channels available to the memory. This means we've doubled the data traces running in the memory bus, and now have an effective 128-bit channel, which in turn doubles maximum theoretical bandwidth. This is why I made it a point to say that dual-channel platforms are what exist, not memory -- in the case of dual-channel, the board will host 128 physical traces to handle data communication between the IMC and RAM. This is compared against 64 for single-channel platforms and 256 for quad-channel platforms.

In this image, we see our physical data traces (wires) running between the memory and the IMC. You'll notice that there's room for 128 in the below image, as opposed to the 64 above. D0-D63 represent the first channel, D64-D127 represent our second channel. Modules can process 64 bits of data at any given time, and so dual-channel platforms will read and write to two modules simultaneously (saturating the 128-bit wide bus).

The memory will sort of ping-pong data down the channels, effectively doubling its potential "speed." This should not be confused with double-datarate memory (DDR), which operates independent of the channel configuration.

In order to use RAM in a dual-channel configuration, the memory must be socketed into matching memory banks and should be identical in spec. Technically, some boards will allow different spec memory in dual-channel configurations, but you'll be throttled to the slowest module and may experience instability. If you're running four sticks with two different models of memory, just stick the matching modules into a bank (bank 0 contains brand A; bank 1 contains brand B).

Up until very recently, you'd have to use bank 0 (slots 1 & 3, assuming we start counting at '1') to properly use dual-channel with only two sticks present. Slots 2 & 4 (bank 1) would then be filled upon upgrade.

RAM Test Methodology Pt. 1 - Synthetic & Real-World Tests Needed

Testing any component competently isn't a trivial feat. I used to do portables test engineering at a large computer manufacturer, where we devised some of the very first USB3.0 test cases and worked with Intel's first consumer-available SSDs (X25). This was somewhere around the Nehalem reign, so the architecture was relatively similar to what we deal with today. Those tests weren't easy to figure out, but with the understanding of how each product works and what software can stress its bottlenecks (without bottlenecking elsewhere - like the CPU, GPU, etc.), it's achievable.

My point is that RAM isn't too dissimilar from Flash testing in terms of test concerns and methodology. For these RAM tests, I specifically wanted to focus on the performance of multichannel configurations vs. normal operating frequency ("single-channel," we'll call it).

From our Kingston HQ test & assembly facility tour. Learn about this machine here.

From our Kingston HQ test & assembly facility tour. Learn about this machine here.

Applying both synthetic and real-world tests is important; without synthetic tests, we can't adequately isolate memory performance and make extrapolations / predictions for real-world tests. Being able to predict outcomes in the real-world is a keystone to scientific test methodology, so you'll almost always see synthetic benchmarks in our testing. That said, without real-world tests, it's tough to put things into perspective for users. Synthetic tests take some heat on occasion for being "unrealistic," but at the end of the day, a correctly-applied, correctly-built synthetic benchmark is paramount to performance analysis.

RAM frequency and channeling will have the biggest theoretical impact upon, obviously, memory-intensive applications. In this environment, those applications tend to be render, encoding, transcoding, simulation, and computation-heavy tasks (applying a filter in After Effects, for instance). I want to make clear that we are strictly testing multichannel performance between dual-channel and single-channel platforms and will not be testing triple-, quad-, or alotta-channel (that's a technical term) performance in this benchmark; we will also not be testing memory frequency herein, and so it will remain a defined constant in the test. I also want to make clear that the memory capacity remained constant throughout the entire test, as did the sticks tested. I will discuss how this was achieved below.

These tests were conducted with consumers in mind, but I will comment on the impact for developers and simulations briefly -- at least, as far as my professional experience will confidently allow.

Multichannel Performance Hypothesis

Before getting into specific tools and use-case scenarios, let's explore my hypothesis going into the lab.

Up until that MSI meeting I mentioned, it was my firm belief that dual-channel configurations should always be opted for in any system build that supported it. This theoretically doubles your memory's transfer capabilities, after all, so halving potential seemed unnecessary.

After my preliminary tests that indicated dual-channel performance might not be quite as substantial as I'd always thought, my considerations changed. Going into this benchmark, it was my hypothesis that:

- Dual-channel configurations would exhibit no noteworthy difference in gaming use-case scenarios.

- Dual-channel configurations would exhibit no noteworthy difference in system boot and daily I/O use cases.

- Dual-channel configurations would show significant advantage in livestream previews for intensive render applications.

- Dual-channel configurations would show significant advantage in render, encoding, and transcoding applications (for video and incompressible audio).

- Dual-channel configurations would show significant advantage in compiling applications (data archiving).

I validated some of these beliefs, but 'proved myself wrong' on a couple of them. We'll revisit each throughout the article.

Test Concerns: Capacity, Memory Saturation, and Synthetic Benchmarks

Going into the testing, I read several similar benchmarks that were performed by users across the web; we also did some collective team research on professional benchmarks performed in-house by memory manufacturers, who were very helpful in supplying test methodology revisions and their own results (shout to

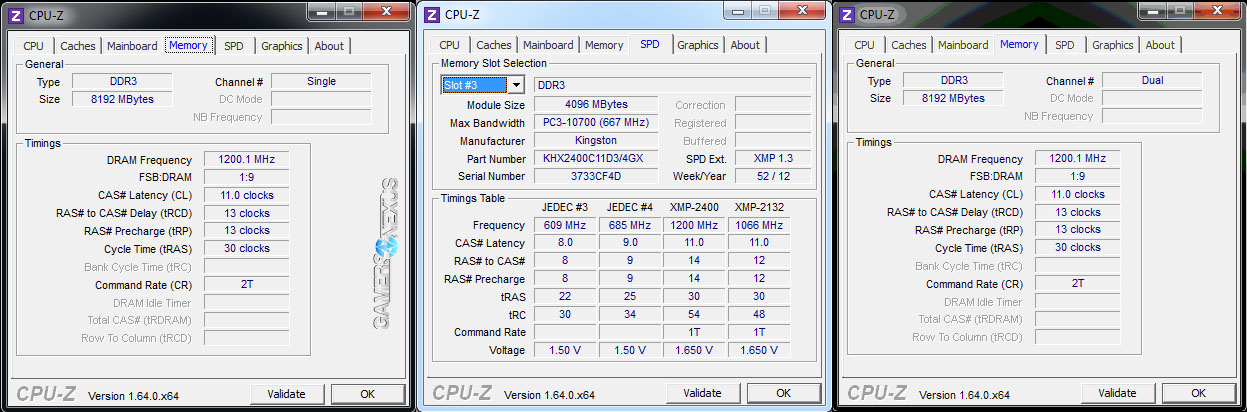

Although capacity (1x4GB vs. 2x4GB) should theoretically not present an issue in most of our tests, it would present a very clear limitation with After Effects and Premiere RAM previews and encoding. I also wanted to eliminate the doubt in anyone's mind. To keep capacity a constant and mitigate concerns of test invalidation, the tests were run in these configurations:

- 2x4GB; bank 0 (Dual).

- 2x4GB; mismatched banks (Single).

We used Kingston's 10th Anniversary HyperX RAM at 2400MHz, which is basically just a special-colored version of their normal HyperX RAM. I will define the RAM modules below.

By using mismatched slots with our motherboard, we're able to force single-channel operation for the second test setup. This means we can still run 8GB of RAM, so capacity is constant, and it also means we're still testing multi-channel performance vs. single-channel without eliminating a full stick.

So that one was solved.

Other concerns arose with memory saturation. We don't have to saturate all 8GB of RAM to test the speeds, but it is beneficial to saturate as much of the memory as possible to ensure it's pushed to the point where it actually benefits from faster read/write performance. Our only test that nearly filled the entire capacity was the After Effects RAM preview. The other ones saturated memory to a point we deemed to be sufficient for testing (often around half capacity, or 3-4GB).

Finally, synthetic benchmarks can sometimes show 30%, even 80% differences between the different configurations... but real-world testing could be 3-5%. We have explained each synthetic test's real-world applications in great depth below, so hopefully that'll help you understand when the results matter most and if they're relevant to you.

Please continue to page 2 for the test methodology, test tools, and test procedures; I defined each synthetic and real-world benchmark very carefully here, which will help in understanding the results.

RAM Test Methodology Pt. 2 - The Tools & Procedures

Where RAM goes to die. From our Kingston HQ tour last year.

Where RAM goes to die. From our Kingston HQ tour last year.

I wrote a full-feature test case for this bench that was followed to the 't,' so each test was executed under identical conditions (beyond our one variable that changes). For trade reasons, we aren't releasing the start-to-finish test case spreadsheet, but I'll walk through most of it here. If you'd like to replicate my results, this should provide adequate tools and information to do so (please post your own below!).

Some standard benchmarking practices will go undetailed. Starting with a clean image, for instance, doesn't need much explanation.

We ran the following tools in the order they are listed:

Synethetic:

- Euler 3D.

- MaxxMem.

- WinRAR.

- Handbrake.

- Cinebench.

- Audacity LAME (honorable mention; was not significant enough to use).

Real-World

- Shogun 2 Benchmark (load time).

- Shogun 2 Benchmark (FPS).

- Adobe Premiere encoding pass.

- Adobe After Effects live RAM preview pass.

Each test was conducted numerous times for parity. The shorter tests were run 5 times (Euler 3D, MaxxMem, Cinebench), the longer tests were run 3 times - because we do have publication deadlines (WinRAR, Handbrake, Premiere, After Effects), and the tests that produced varied results were run until we felt they were producing predictable outcomes (Shogun 2, Cinebench). All of these were averaged into a single subset of numbers for the tables.

Shogun 2 and Cinebench exhibited some performance quirks that threw the numbers off at first, but after a good deal of experimenting, I figured out why. Shogun 2 had just been installed, and its first launch of the bench was significantly slower than all subsequent launches (nearly 16%, in fact). My understanding is that the game unpacked some files upon first launch (maybe dX), and so these results were thrown out. The game continued to produce confusing load times, sometimes varying by nearly 11% on identical configurations. This was resolved by rebooting, verifying the game cache, rebooting, and starting the test again. After all of this, the results were almost perfectly consistent over a span of 15 tests and retests (within 1%, easily in margin of error).

Cinebench's performance declined heavily after each subsequent test. Severely. The first OpenGL single-channel pass reported 111FPS, the second 108, the third 106, the fourth 105, and then it flatlined around there. This was concerning. The test did this after numerous reboots and after changing to a dual-channel config. I eventually realized that the performance degradation only existed when the application continued testing without being terminated between tests. By this, I mean that the variance subsided when I started closing the program after each pass, then reopening it. This method was used for another 10-15 passes, all of which produced results within 1% of each other.

RAM was purged between all tests. No active memory management programs were present outside of the default, clean Windows installation.

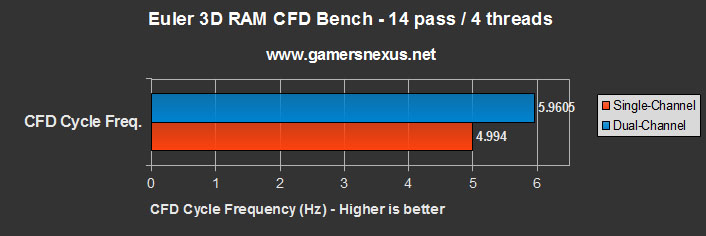

STARS Euler 3D: CFD Simulation Performance

Euler 3D is best-used when testing CPUs and memory. The program is freely available through Casealab.

Figure 1: AGARD 445.6 Mach Contours at Mach 0.960. Source.

Figure 1: AGARD 445.6 Mach Contours at Mach 0.960. Source.

Euler 3D performs heavy floating-point and multi-threaded tasks that leverage resources in a similar manner to heavy-duty compiling and simulation. This is done using Computational Fluid Dynamics (CFD) and measures output "as a CFD cycle frequency in Hertz." Caselab defines their software's test sequence with great specificity, stating:

"The benchmark testcase is the AGARD 445.6 aeroelastic test wing. The wing uses a NACA 65A004 airfoil section and has a panel aspect ratio of 1.65, a taper ratio of 0.66, and a 45 degree quarter-chord sweep angle. This AGARD wing was tested at the

The benchmark CFD grid contains 1.23 million tetrahedral elements and 223 thousand nodes. The benchmark executable advances the Mach 0.50 AGARD flow solution. Our benchmark score is reported as a CFD cycle frequency in Hertz."

A lot of these words are fairly intimidating, but most of them are explaining that the test case is simulating a specific aircraft component at a specific speed. Simulation like this is a computation-intensive task that tends to demand high-speed components and multithreaded processing. CFD uses a computer model to calculate fluid flow (air, in this case). My understanding is that the test uses a complex matrix to calculate fluid flow at multiple points, then iterates (over time) with change in flow at surrounding points. Assuming this is correct, it means that we have to store values and access them upon each iteration, so RAM speed becomes important.

I should note that I'm not an expert in CFD -- not anywhere remotely close -- but after speaking with GN's resident physics engineer (Tim "Space_man" Martin), that was our educated speculation.

Regardless, you don't need to know how it works to know that a higher frequency is better. We ran the test with settings of "14 / 4," where 14 is indicative of the count of passes and 4 is the active thread-count.

A higher frequency rating is better in this test.

Anyone performing simulation or computation-intensive compiling tasks will benefit from these numbers; if you're in these categories, use this benchmark to judge how much multi-channeling matters to you.

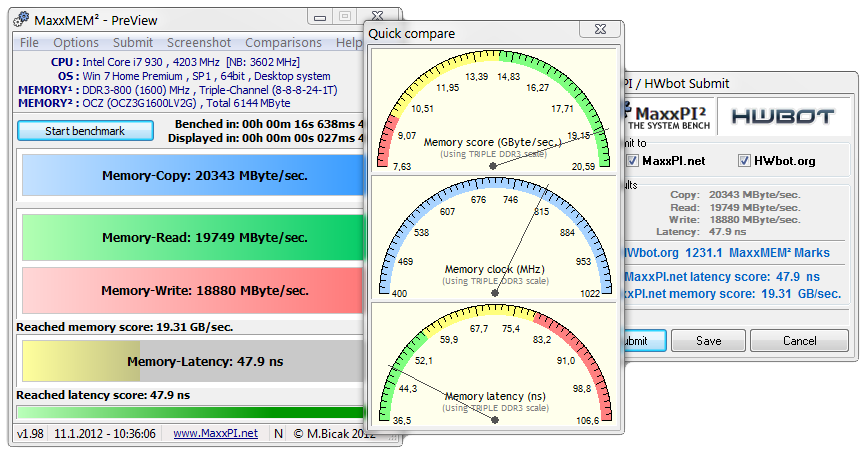

MaxxMem: Memory Copy, Read, Write, Latency, & Bandwidth Performance

MaxxMem is another synthetic test. I won't spend as much time here since it's a bit easier to understand.

NOTE: These aren't our results. Just a program screenshot.

NOTE: These aren't our results. Just a program screenshot.

MaxxMem tests memory bandwidth by writing to and reading from memory blocks that are sized in powers of 2, starting at 16MB and scaling up to 512MB. MaxxMem executes numerous passes and averages the result, though we did our own multiple passes to ensure accuracy. MaxxMem attempts to address CPU cache pollution concerns upon reads/writes, which can become a problem with some real-world tests that will skew results if improperly tested. The test uses "an aggressive data prefetching algorithm" to test theoretical bandwidth caps during read/writes.

WinRAR Compression: Large File Compression Speed Test

WinRAR is a pretty standard test for memory and CPUs. Fast memory enables rapid swapping during I/O to ensure the disk isn't hit too frequently once the process initializes. This is very much a 'real-world' test, but I've listed it as 'synthetic' since I'd imagine most users don't regularly perform large file compression.

Our tests were conducted with heavy compression settings and with 22GB of files, output as a WinRAR archive.

Server architects, web servers, database servers, and computers that perform regular log file archival, indexing, or similar tasks will directly be represented by this test.

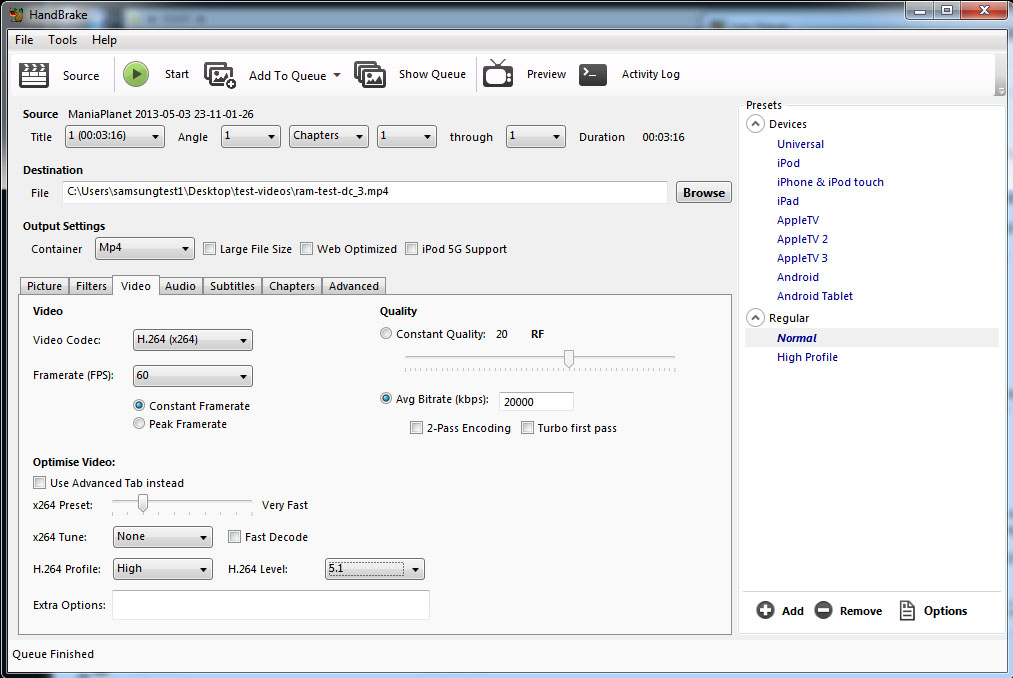

Handbrake, Audacity, & Cinebench

These two just get quick mentions. Handbrake is a multithreaded transcoding program that has one video format / codec as input and another as output. We put a 9GB AVI recorded with FRAPS into Handbrake and spat out an h.264 (5.1 high profile @ 1080) MP4 at a continuous 60FPS with a 20Mbps datarate. In theory, this is CPU- and memory-intensive and will see the benefits of faster memory.

Audacity gets a small, honorable mention. I thought that using a 4096Kbps high-quality WAV file (recorded with our field reporting equipment) and asking LAME to convert it to MP3 would be pretty abusive, but it wasn't. The output times were almost identical -- within 0.5% of each other. I do not feel comfortable publishing the results in an official fashion, but both single- and dual-channel performance hovered around 18.5 seconds for processing time. I feel like audio compression or conversion could be pretty intensive on memory, but I am not enough of an audio expert to know without more extensive research.

Cinebench is a pretty fun synthetic test to use. We relied upon the OpenGL benchmark, since that was the only one that would have any amount of impact from RAM differences. It runs a video and logs the FPS - pretty straight-forward stuff. This is more dependent upon the GPU and CPU than anything, but had some RAM variability.

Impact of Memory Speed on Adobe After Effects Live Preview & Premiere Encoding

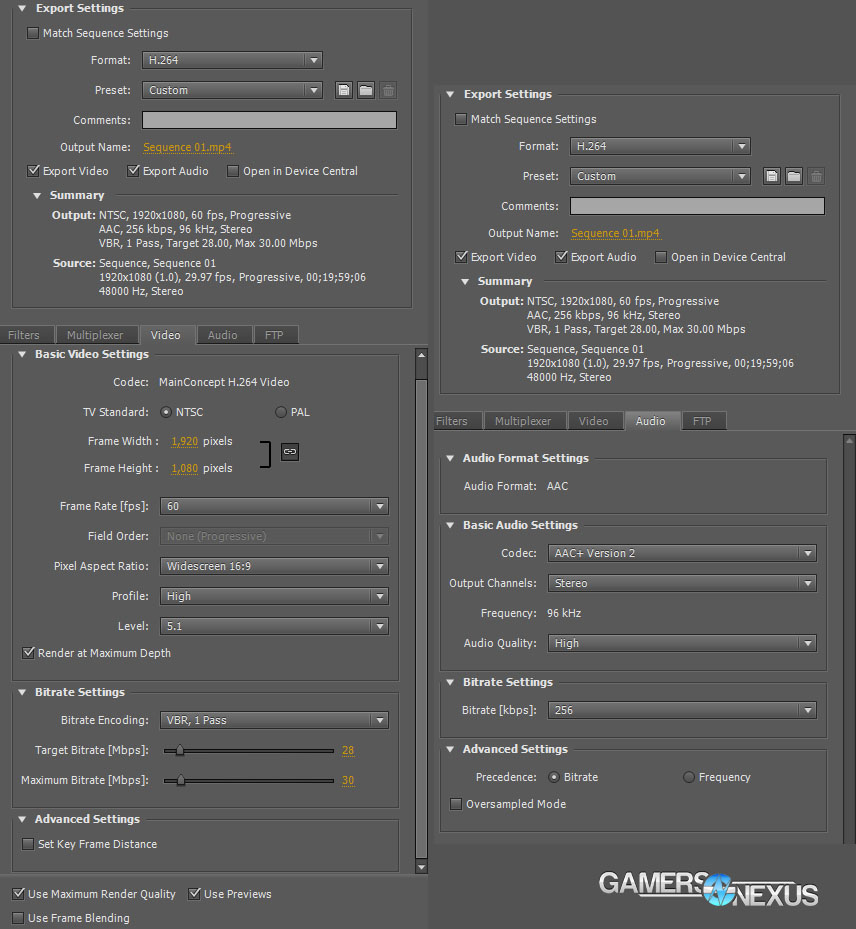

I'm responsible for maintaining our entire YouTube channel here at GamersNexus (and do a good deal of hobbyist mountain bike video editing in my free time), so the video editing tests were of most interest to me. Premiere is an easy one, the test settings consisted of:

We rendered out a complex 10s clip that featured four 1080@60p videos shot at 24- & 28Mbps. The clips had color correction and other post-processing effects applied and were rendered at maximum depth.

To test the performance of dual-channel vs. single-channel memory configurations with After Effects, we put together another 4x1080@60p set of videos (down-scaled into quadrants) at 20-28Mbps. Two of the videos had color and exposure correction effects applied. We then previewed the video with RAM (RAM Preview) to check for RAM Preview stuttering or low framerates (expected). A video camera was set up to monitor the RAM Preview info panel for live FPS metrics. We used an external video camera so the results would be independent of the screen capture. You can view the test in video form on page 1.

Testing Platform

We have a brand new test bench that we assembled for the 2013-2014 period! Having moved away from our trusty i7-930 and GTX 580, the new bench includes the below components:

| GN Test Bench 2013 | Name | Courtesy Of | Cost |

| Video Card | XFX Ghost 7850 | GamersNexus | ~$160 |

| CPU | Intel i5-3570k CPU | GamersNexus | ~$220 |

| Memory | 16GB Kingston HyperX Genesis 10th Anniv. @ 2400MHz | Kingston Tech. | ~$117 |

| Motherboard | MSI Z77A-GD65 OC Board | GamersNexus | ~$160 |

| Power Supply | NZXT HALE90 V2 | NZXT | Pending |

| SSD | Kingston 240GB HyperX 3K SSD | Kingston Tech. | ~$205 |

| Optical Drive | ASUS Optical Drive | GamersNexus | ~$20 |

| Case | NZXT Phantom 820 | NZXT | ~$220 |

| CPU Cooler | Thermaltake Frio Advanced Cooler | Thermaltake | ~$60 |

For this test, the CPU was configured at 4.2GHz (instead of our usual 4.4GHz) with a vCore of 1.265V. The RAM was set to 2400MHz and run in bank 0 or mismatched banks.

Please continue to the final page to view the dual-channel vs. single channel memory platform benchmark results.

Test Results: Dual-Channel vs. Single-Channel RAM Platforms - Synthetic

Let's start with synthetic multi-channel platform tests and then move into real-world stuff. All of these tests were defined, explained, and detailed in their use-case scenarios and methodologies on the previous page. Please read that page before asking questions or making claims about the below tests. It is very well thought-out and probably addresses your question directly.

Euler 3D

We saw a fairly substantial performance difference between single- and dual-channel configurations with Euler 3D. The dual-channel configuration performed approximately 17.7% better than the single-channel configuration. This advantage will carry over in simulation applications, especially when dealing with CFD or similarly heavy-duty computations, simulations, and compilers. Engineers and scientists will theoretically benefit here (see: Signal Integrity, parametric analysis, crosstalk analysis, electromagnetics simulation, etc.).

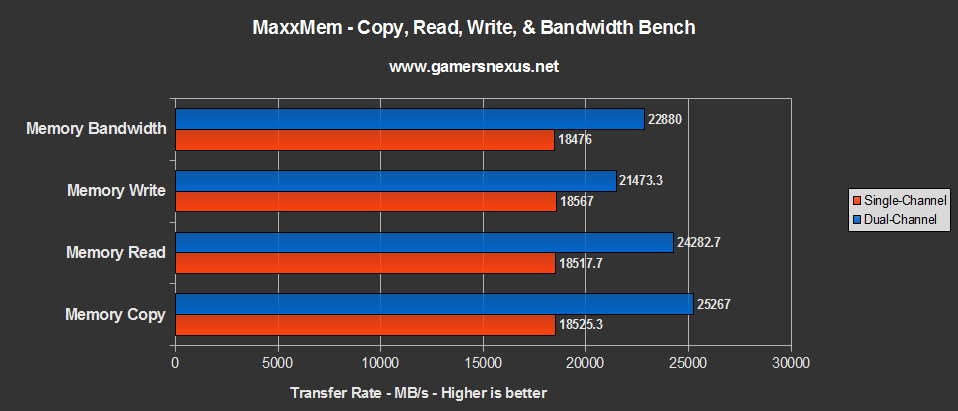

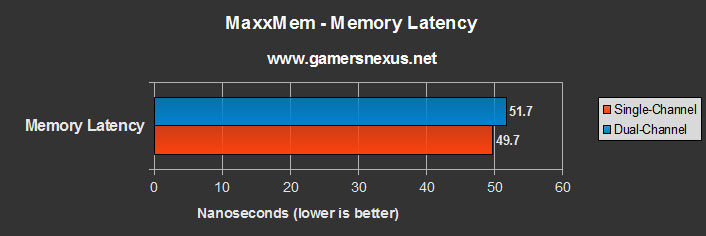

MaxxMem

MaxxMem measures memory copy, memory read, memory write, memory latency, and memory bandwidth performance. The MemCP, MemRD, and MemWR stats are measured-out in MB/s (bytes, not bits); latency is measured in nanoseconds (ns); bandwidth is measured in GB/s, though I've converted it to MB/s for simplified charts.

We saw substantial performance differences between single- and dual-channel configurations in this testing. Memory copy, read, and write tests were heavily-advantaged with the dual-channel configuration, performing 30.79%, 26.94%, and 14.52% better in each, respectively. The total memory bandwidth also rested 21.3% higher than single-channel -- again, pretty substantial. Latency with dual-channel was consistently higher, perhaps due to additional overhead.

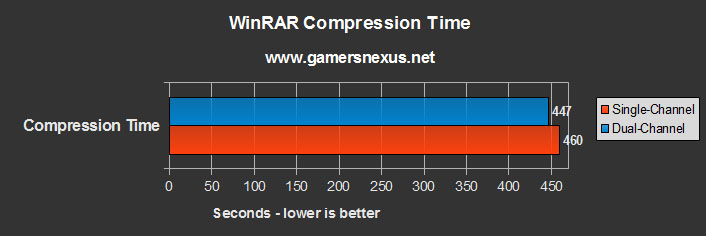

WinRAR File Compression

With our WinRAR archive benchmark, we saw single-channel take 460s (7m 40s) to compress the 9GB data archive, meanwhile the dual-channel platform performed ~2.87% faster at 447s (7m 27s). This difference could potentially be fairly substantial in specific real-world environments (think: enterprise) where heavy, constant file archival is being performed. Web & db servers are an excellent real-world example where you'd want that 2.87% speed boost.

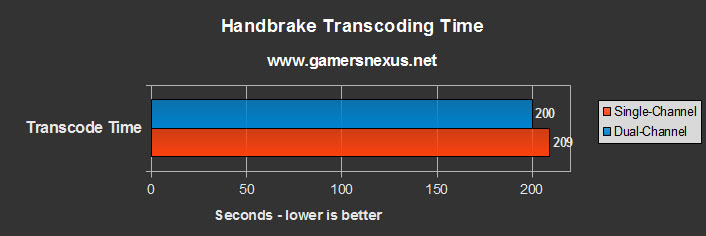

Handbrake Video Transcoding

I defined our Handbrake settings in the previous page, so check that if you're curious about what's actually being tested. We saw a consistent 4.4% advantage in the favor of dual-channel platforms while transcoding files with Handbrake. The single-channel platform took 209s (3m 29s) vs. the dual-channel platform's 200s (3m 20s). Extrapolating this to a larger, more complex transcoding task, the impact could be relatively noteworthy. I don't think most users do that level of heavy-duty passes on their video ripping / transcoding, though.

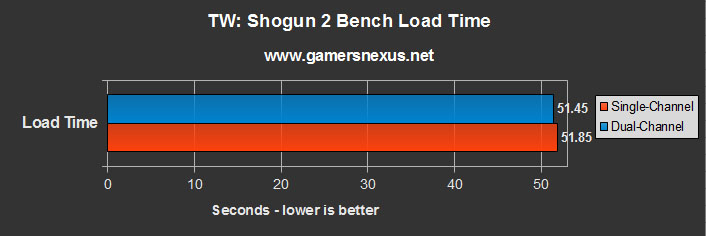

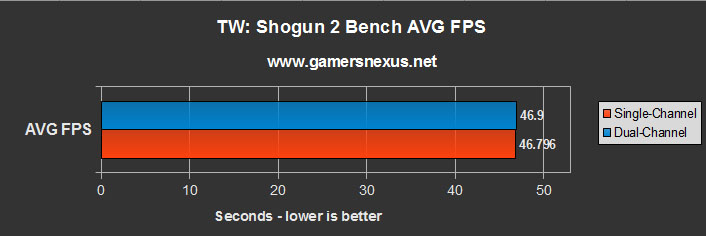

Shogun 2 Benchmark Load Time & FPS

I really didn't expect a lot here, but that's sort of what I set out to illustrate. These tests are totally uninteresting. The load time was about 0.78% faster with dual-channel platforms, but this is well within margin of error. For point of clarity, the results averaged were:

Single-Channel: 51.82, 51.78, 51.98, 51.83.

Dual-Channel: 51.76, 51.45, 50.41, 52.17.

Like I said, easily within normal system fluctuations. I also tested Skyrim's load time with several high-fidelity mods loaded, which should have theoretically hammered RAM and I/O for file retrieval, but saw effectively zero advantage between dual- and single-channel performance.

The FPS results are even less interesting. A 0.21% delta between the channel performance is within margin of error, once again, and can be effectively thought of as 0 noticeable difference.

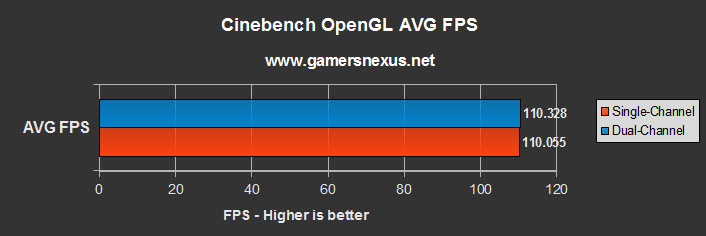

Cinebench OpenGL Performance

Cinebench, like Shogun, wasn't really added to show a delta as much as it was added to illustrate a point: That multi-channel memory platforms have very little impact on specific tasks, like gaming and some types of live rendering. The delta between single- and dual-channel configurations was 0.25% in favor of single-channel.

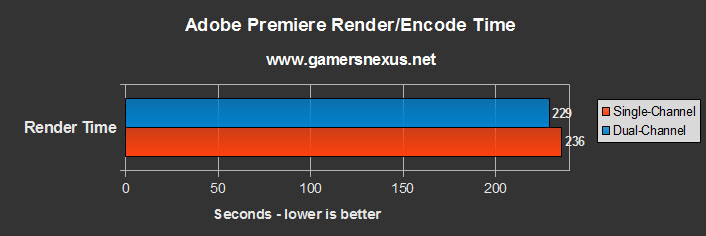

Adobe Premiere Encoding Pass

Here's where it gets a bit more interesting. Our single-channel encoding pass results averaged out to around 236s (3m 56s); dual-channel averaged out at 229s (3m 49s), for a delta of about 3.01% in favor of dual-channel memory configs. Not massive, but for people who dedicate tremendous time doing rendering, it could be a big difference. Still, that's only a 2-minute gain per hour of rendering downtime. I often have systems rendering for 20 hours per day during conventions, so that could be upwards of 40 minutes saved, which starts to get significant.

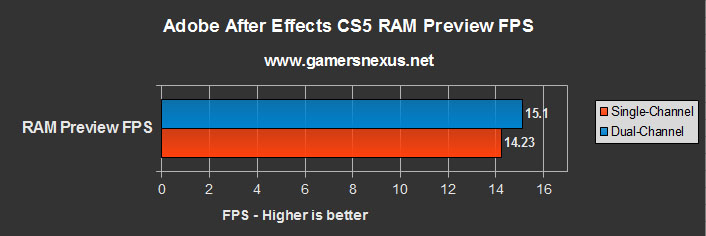

Adobe After Effects Live RAM Preview Framerate Performance

We saw nearly a 6% difference (5.94%) between RAM previews with single- and dual-channel RAM in Adobe After Effects, favoring dual-channel configurations. This starts getting be somewhat noticeable. The average FPS of live playback with the single-channel platform was 14.227; the average FPS of live playback with the dual-channel platform was 15.098. Larger differences might be spotted under some specific test conditions, but it ultimately depends on what you're doing in AE. It certainly wasn't real-time (60FPS), but a single frame per second can feel like a big difference when you're staring at this stuff for days on end.

The Verdict: What Do These Results Mean; Is Dual-Channel "Worth It?"

That was a helluvalot of testing and methodology discussion, but I hope it was for a good cause - I wanted to ensure there were no questions about how we performed these tests. More importantly, I wanted to ensure that others can replicate and add to my results. If you're running this yourself for some specific game or program that I didn't test, please feel free to list your specs and software tested below!

Despite all that I thought I knew leading up to our MSI meeting last July, dual-channel just isn't necessary for the vast majority of the consumer market. Anyone doing serious simulation (CFD, parametric analysis) will heavily benefit from dual-channel configurations (~17.7% advantage). Users who push a lot of copy tasks through memory will also theoretically see benefits, depending on what software is controlling the tasking. Video editors and professionals will see noteworthy advantages in stream (RAM) previews and will see marginal advantages in render time. It is probably worth having in this instance -- in the very least, I'd always go dual-channel for editing / encoding if only for future advancements.

Gamers, mainstream users, and office users shouldn't care. Actually, at the end of the day, the same rule applies to everyone, simulation pro or not: It's density and frequency that matters, not channeling. Quad- and better channels theoretically have a more profound impact, but this is in-step with the increased density of kits that are targeted for quad-channel platforms. If you want to push speed, density and frequency should be at the top of your list. Generally, when you're spending that kind of money, you're going with a multi-channel kit of two or more anyway, but the point still stands.

I'd love to test the real-world impact of dual-vs.-single-channel memory configs on a server platform, but that starts exiting my realm of expertise and would require extensive research to feel confident in. If any of you are knowledgeable in the virtualization or server spaces, please let us know below if you think we'd see a bigger impact in those worlds.

As for whether it's "worth it" to get a kit of two, the answer is generally going to be yes -- but primarily because it's rare not to find a good deal with two sticks. If you're on a budget or an ultra-budget and are trying to spare every $5 or $10 you can, then perhaps grab a single stick of RAM. It feels so wrong saying that, but we have to trust the results of this test, and the results say that it simply doesn't matter for those types of users. Anyone building a ~$500 or cheaper system shouldn't spend the time of day being concerned about 2x4GB vs. 1x8GB as long as the price works out in their favor. Price is the biggest factor here, and with recent fluctuations, you're just going to have to check the market when you're buying.

This was a big undertaking. We normally don't go through such depth to detail test methodology. Please provide some feedback below if you'd like to see similar depth in the future. If you think it's too much and would rather we get to the results faster, let us know about that, too.

- Steve "Lelldorianx" Burke.