NVidia’s Titan Xp 2017 model video card was announced without any pre-briefing for us, marking it the second recent Titan X model card that took us by surprise on launch day. The Titan Xp, as it turns out, isn’t necessarily targeted at gaming – though it does still bear the GeForce GTX mark. NVidia’s Titan Xp followed the previous Titan X (that we called “Titan XP” to reduce confusion from the Titan X – Maxwell before that), and knocks the Titan X 2016 out of its $1200 price bracket.

The Titan Xp 2017 now firmly socketed into the $1200 category, we’ve got a gap between the GTX 1080 Ti at $700 MSRP ($750 common price) of $450-$500 to the TiXp. Even with that big of a gap, though, diminishing returns in gaming or consumer workloads are to be expected. Today, we’re benchmarking and reviewing the nVidia Titan Xp for gaming specifically, with additional thermal, power, and noise tests included. This card may be better deployed for neural net and deep learning applications, but that won’t stop enthusiasts from buying it simply to have “the best.” For them, we’d like to have some benchmarks online.

NVidia Titan Xp 2017 Specs vs. GTX 1080 Ti

| Tesla P100 | Titan Xp 2017 | GTX 1080 Ti | GTX 1080 | GTX 1070 | |

| GPU | GP100 Cut-Down Pascal | GP102-450 | GP102-350-K1 | GP104-400 Pascal | GP104-200 Pascal |

| Transistor Count | 15.3B | 12B | 12B | 7.2B | 7.2B |

| Fab Process | 16nm FinFET | 16nm FinFET | 16nm FinFET | 16nm FinFET | 16nm FinFET |

| CUDA Cores | 3584 | 3840 | 3584 | 2560 | 1920 |

| GPCs | 6 | 6 | 6 | 4 | 3 |

| SMs | 56 | 30 | 28 | 20 | 15 |

| TPCs | 28 TPCs | 30 | 28 | 20 TPCs | 15 |

| TMUs | 224 | 240 | 224 | 160 | 120 |

| ROPs | 96 (?) | 96 | 88 | 64 | 64 |

| Core Clock | 1328MHz | 1481MHz | 1481MHz | 1607MHz | 1506MHz |

| Boost Clock | 1480MHz | 1582MHz | 1582MHz | 1733MHz | 1683MHz |

| FP32 TFLOPs | 10.6TFLOPs | 12.1TFLOPs (1/32 FP64) | ~11.4TFLOPs | 9TFLOPs | 6.5TFLOPs |

| Memory Type | HBM2 | GDDR5X | GDDR5X | GDDR5X | GDDR5 |

| Memory Capacity | 16GB | 12GB | 11GB | 8GB | 8GB |

| Memory Clock | ? | 11Gbps | 11Gbps | 10Gbps GDDR5X | 4006MHz |

| Memory Interface | 4096-bit | 384-bit | 352-bit | 256-bit | 256-bit |

| Memory Bandwidth | ? | ~547.2GB/s | ~484GB/s | 320.32GB/s | 256GB/s |

| Total Power Budget ("TDP") | 300W | 250W | 250W | 180W | 150W |

| Power Connectors | ? | 1x 8-pin 1x 6-pin | 1x 8-pin 1x 6-pin | 1x 8-pin | 1x 8-pin |

| Release Date | 4Q16-1Q17 | 04/06/17 | 3/10/17 | 5/27/2016 | 6/10/2016 |

| Release Price | - | $1,200 | $700 | Reference: $700 MSRP: $600 Now: $500 | Reference: $450 MSRP: $380 |

Above is the specs table for the Titan Xp and the GTX 1080 Ti, helping compare the differences between nVidia’s two FP32-focused flagships.

Clarifying Branding: GeForce GTX on Titan Xp Card

The initial renders of nVidia’s Titan Xp led us to believe that the iconic “GeForce GTX” green text wouldn’t be present on the card, a belief further reinforced by the lack of “GeForce GTX” in the actual name of the product. Turns out, it’s still marked with the LED-backlit green text. If you’re curious about whether a card is actually a Titan Xp card, the easiest way to tell would be to look at the outputs: TiXp (2017) does not have DVI, while Titan X (Pascal, 2016) does have DVI out. Further, the Titan Xp 2017 model uses a GP102-450 GPU, whereas Titan X (2016) uses a GP102-400 GPU.

A reader of ours, Grant, was kind enough to loan us his Titan Xp for review and inevitable conversion into a Hybrid mod (Part 1: Tear-Down is already live). Grant will be using the Titan Xp for neural net and machine learning work, two areas where we have admittedly near-0 experience; we’re focused on gaming, clearly. We took the opportunity to ask Grant why someone in his field might prefer the TiXp to a cheaper 1080 Ti, or perhaps to SLI 1080 Ti cards. Grant said:

“Data sizes vary and the GPU limits are based on data size and the applied algorithm. A simple linear regression can be done easily on most GPUs, but when it comes to convolutional neural networks, the amount of math is huge. I have a 4 gig data set that cannot run its CNN on the 1080 Ti, but it can do it on the Titan X.

Also, for us, CUDA cores matter a lot. And the good machine learning algorithms, even Google's tensorflow, need CUDAs and nVidia-specific drivers to use GPU.

SLI does not do anything for us. Multiple GPUs can be used to split-up data sets and run them in parallel, but it's tricky as hell with neural networks. With multiple cards, it's better for us to run one algorithm on one card and another algo on the next card.

Even Google created their own version of a GPU for deep learning that can be farmed much better than any nVidia option.”

According to this user, at least, the extra 1GB of VRAM on the TiXp is beneficial to the workload at hand, to the point that a 1080 Ti just wouldn’t even execute the task. This may explain part of why the 1080 Ti seemingly had such an odd memory pool: Yes, of course the GPU has a more limited bus, but there’s a reason for that. NVidia might have wanted to keep machine learning-class users in Titan-level hardware, rather than splitting supply of a 1080 Ti between audiences.

Whatever the reason for the Titan Xp, we’re testing it for gaming, because people will still buy it for gaming. The extra 1GB VRAM is irrelevant in our use case, but we’ve still got potential performance gains from other differences.

NVidia Titan Xp Tear-Down

Our tear-down for the Titan Xp is already live, seen here:

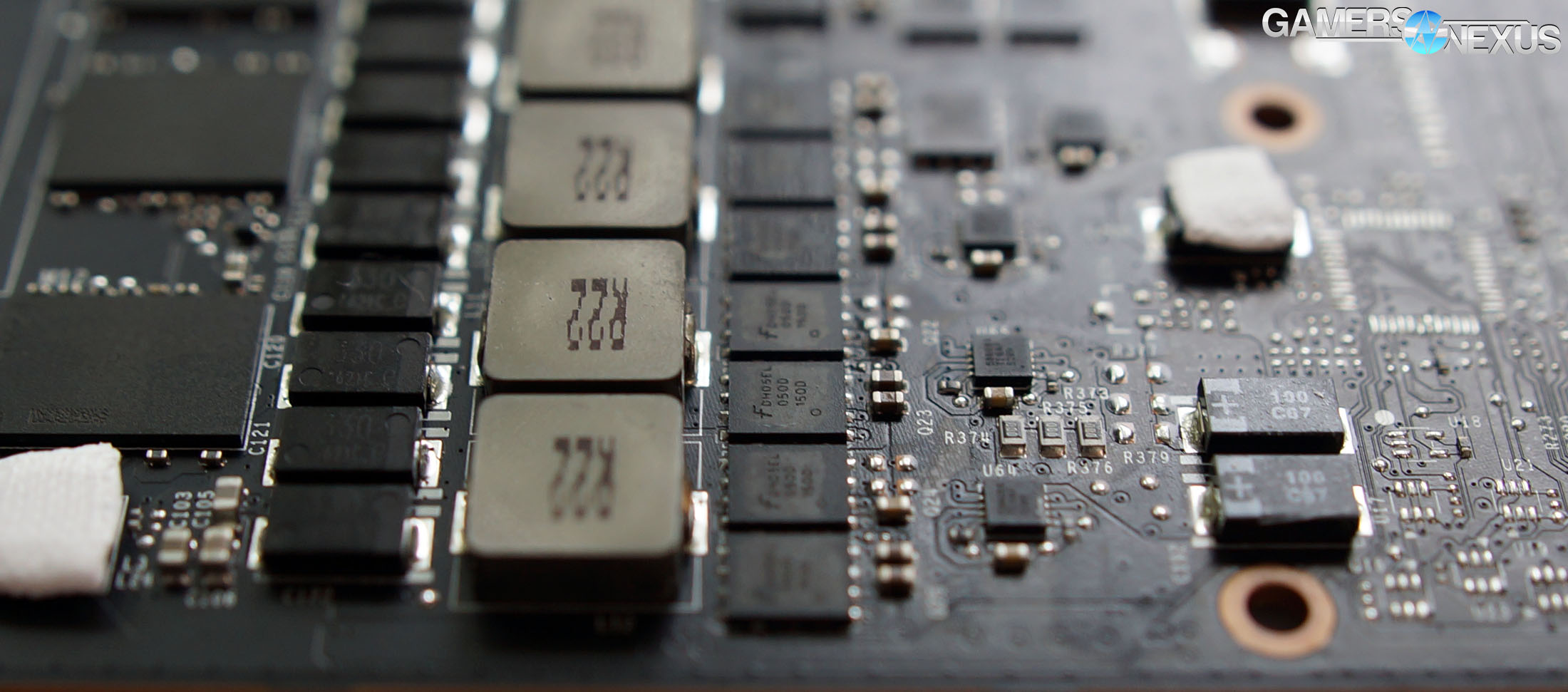

The card is basically a GTX 1080 Ti FE card. We’d recommend checking out our PCB & VRM component-level analysis of the GTX 1080 Ti FE card for more information on the Titan Xp, seeing as they’re mostly the same board.

The cooler leverages the same nVidia blower-style layout found on FE cards. There’s an aluminum heatsink with nickel-plated copper coldplate and vapor chamber for direct GPU contact, with the aluminum baseplate making up the bulk of the heat transfer needs for the power components. The blower fan takes care of both the VRM and GPU (and VRAM), and we measured several power+VRAM components during test to better understand thermal behavior of the product.

Overclock Stepping Table

Here’s our overclock progression table, including pass/fail for each step. The last numbers marked as “pass” will be used during benchmarking:

| Peak Clock (MHz) | AVG Clock (MHz) | Core Offset (MHz) | MEM CLK (MHz) | MEM Offset (MHz) | Power Target | Voltage | Fan | TMP | Pass/Fail |

| 1810 | 1759 | 0 | 1425.6 | 0 | 100 | 0.93 | 2950 | 74 | P |

| 1835 | 1785 | 0 | 1425.6 | 0 | 120 | 0.98 | 2950 | 80 | P |

| 1873 | 1840 | 100 | 1425.6 | 0 | 120 | 0.98 | 2950 | 85 | P |

| 1924 | 1898 | 150 | 1425.6 | 0 | 120 | 0.98 | 3400 | 81 | P |

| 1962 | 1940 | 200 | 1425.6 | 0 | 120 | 0.98 | 3400 | 80 | P |

| - | - | 250 | 1425.6 | 0 | 120 | 0.98 | 3400 | 80 | F |

| 2076 | 1974 | 225 | 1425.6 | 0 | 120 | 0.98 | 3400 | 70 | F |

| 2114 | 1974 | 225 | 1425.6 | 0 | 120 | 1.08 | 3400 | 80 | F |

| 1974 | 1949 | 200 | 1475 | 200 | 120 | 0.98 | 3400 | 80 | P |

| 1974 | 1949 | 200 | 1539 | 450 | 120 | 0.98 | 3400 | 80 | P |

| 1974 | 1949 | 200 | 1553 | 500 | 120 | 0.98 | 3400 | 80 | P |

| 1974 | 1936 | 200 | 1575 | 600 | 120 | 0.98 | 3400 | 80 | F |

All testing for this review was conducted prior to tear-down (other than where thermocouples were required, obviously). The Hybrid mod results will be posted separately.

Let’s get into the testing.

Continue to Page 2 for GPU test methodology.

GPU Testing Methodology

For our benchmarks today, we’re using a fully rebuilt GPU test bench for 2017. This is our first full set of GPUs for the year, giving us an opportunity to move to an i7-7700K platform that’s clocked higher than our old GPU test bed. For all the excitement that comes with a new GPU test bench and a clean slate to work with, we also lose some information: Our old GPU tests are completely incomparable to these results due to a new set of numbers, completely new testing methodology, new game settings, and new games being tested with. DOOM, for instance, now has a new test methodology behind it. We’ve moved to Ultra graphics settings with 0xAA and async enabled, also dropping OpenGL entirely in favor of Vulkan + more Dx12 tests.

We’ve also automated a significant portion of our testing at this point, reducing manual workload in favor of greater focus on analytics.

Driver version 378.78 (press-ready drivers for 1080 Ti, provided by nVidia) was used for all nVidia devices. Version 17.10.1030-B8 was used for AMD (press drivers). The Titan Xp used version 381.

A separate bench is used for game performance and for thermal performance.

Thermal Test Bench

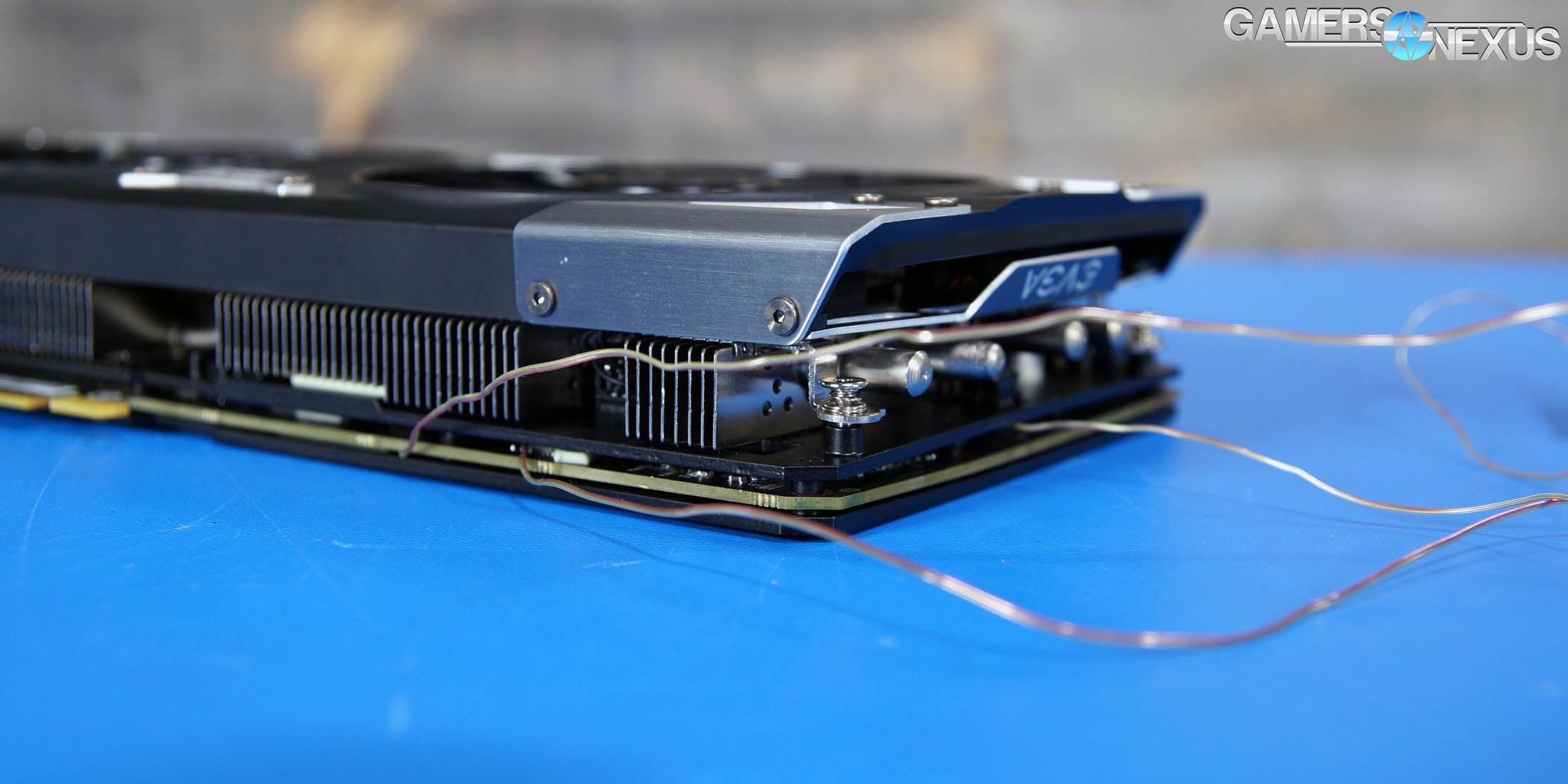

Our test methodology for the is largely parallel to our EVGA VRM final torture test that we published late last year. We use logging software to monitor the NTCs on EVGA’s ICX card, with our own calibrated thermocouples mounted to power components for non-ICX monitoring. Our thermocouples use an adhesive pad that is 1/100th of an inch thick, and does not interfere in any meaningful way with thermal transfer. The pad is a combination of polyimide and polymethylphenylsiloxane, and the thermocouple is a K-type hooked up to a logging meter. Calibration offsets are applied as necessary, with the exact same thermocouples used in the same spots for each test.

Torture testing used Kombustor's 'Furry Donut' testing, 3DMark, and a few games (to determine auto fan speeds under 'real' usage conditions, used later for noise level testing).

Our tests apply self-adhesive, 1/100th-inch thick (read: laser thin, does not cause "air gaps") K-type thermocouples directly to the rear-side of the PCB and to hotspot MOSFETs numbers 2 and 7 when counting from the bottom of the PCB. The thermocouples used are flat and are self-adhesive (from Omega), as recommended by thermal engineers in the industry -- including Bobby Kinstle of Corsair, whom we previously interviewed.

K-type thermocouples have a known range of approximately 2.2C. We calibrated our thermocouples by providing them an "ice bath," then providing them a boiling water bath. This provided us the information required to understand and adjust results appropriately.

Because we have concerns pertaining to thermal conductivity and impact of the thermocouple pad in its placement area, we selected the pads discussed above for uninterrupted performance of the cooler by the test equipment. Electrical conductivity is also a concern, as you don't want bare wire to cause an electrical short on the PCB. Fortunately, these thermocouples are not electrically conductive along the wire or placement pad, with the wire using a PTFE coating with a 30 AWG (~0.0100"⌀). The thermocouples are 914mm long and connect into our dual logging thermocouple readers, which then take second by second measurements of temperature. We also log ambient, and apply an ambient modifier where necessary to adjust test passes so that they are fair.

The response time of our thermocouples is 0.15s, with an accompanying resolution of 0.1C. The laminates arae fiberglass-reinforced polymer layers, with junction insulation comprised of polyimide and fiberglass. The thermocouples are rated for just under 200C, which is enough for any VRM testing (and if we go over that, something will probably blow, anyway).

To avoid EMI, we mostly guess-and-check placement of the thermocouples. EMI is caused by power plane PCBs and inductors. We were able to avoid electromagnetic interference by routing the thermocouple wiring right, toward the less populated half of the board, and then down. The cables exit the board near the PCI-e slot and avoid crossing inductors. This resulted in no observable/measurable EMI with regard to temperature readings.

The primary test platform is detailed below:

| GN Test Bench 2015 | Name | Courtesy Of | Cost |

| Video Card | This is what we're testing | - | - |

| CPU | Intel i7-5930K CPU 3.8GHz | iBUYPOWER | $580 |

| Memory | Corsair Dominator 32GB 3200MHz | Corsair | $210 |

| Motherboard | EVGA X99 Classified | GamersNexus | $365 |

| Power Supply | NZXT 1200W HALE90 V2 | NZXT | $300 |

| SSD | OCZ ARC100 Crucial 1TB | Kingston Tech. | $130 |

| Case | Top Deck Tech Station | GamersNexus | $250 |

| CPU Cooler | Asetek 570LC | Asetek | - |

Note also that we swap test benches for the GPU thermal testing, using instead our "red" bench with three case fans -- only one is connected (directed at CPU area) -- and an elevated standoff for the 120mm fat radiator cooler from Asetek (for the CPU) with Gentle Typhoon fan at max RPM. This is elevated out of airflow pathways for the GPU, and is irrelevant to testing -- but we're detailing it for our own notes in the future.

Game Bench

| GN Test Bench 2017 | Name | Courtesy Of | Cost |

| Video Card | This is what we're testing | - | - |

| CPU | Intel i7-7700K 4.5GHz locked | GamersNexus | $330 |

| Memory | GSkill Trident Z 3200MHz C14 | Gskill | - |

| Motherboard | Gigabyte Aorus Gaming 7 Z270X | Gigabyte | $240 |

| Power Supply | NZXT 1200W HALE90 V2 | NZXT | $300 |

| SSD | Plextor M7V Crucial 1TB | GamersNexus | - |

| Case | Top Deck Tech Station | GamersNexus | $250 |

| CPU Cooler | Asetek 570LC | Asetek | - |

BIOS settings include C-states completely disabled with the CPU locked to 4.5GHz at 1.32 vCore. Memory is at XMP1.

We communicated with both AMD and nVidia about the new titles on the bench, and gave each company the opportunity to ‘vote’ for a title they’d like to see us add. We figure this will help even out some of the game biases that exist. AMD doesn’t make a big showing today, but will soon. We are testing:

- Ghost Recon: Wildlands (built-in bench, Very High; recommended by nVidia)

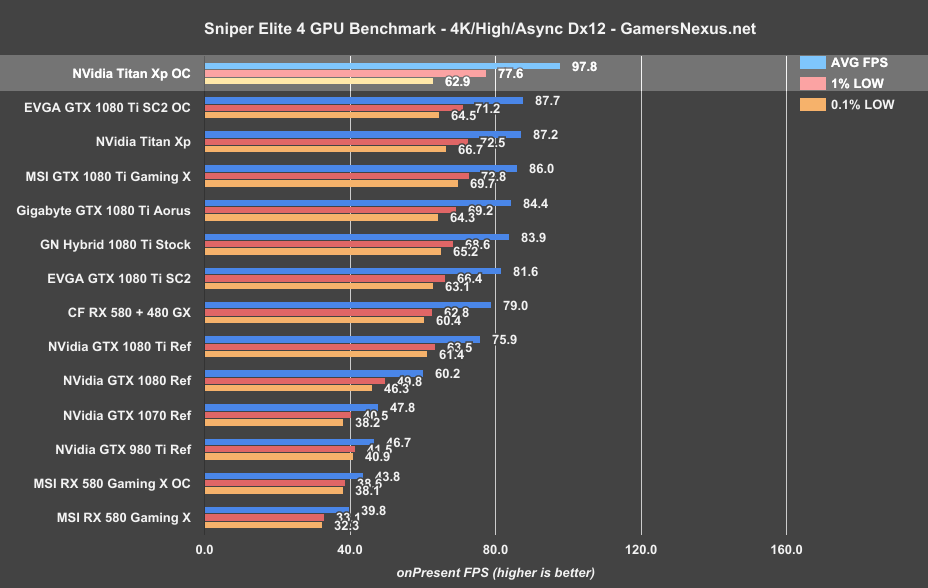

- Sniper Elite 4 (High, Async, Dx12; recommended by AMD)

- For Honor (Extreme, manual bench as built-in is unrealistically abusive)

- Ashes of the Singularity (GPU-focused, High, Dx12)

- DOOM (Vulkan, Ultra, 0xAA, Async)

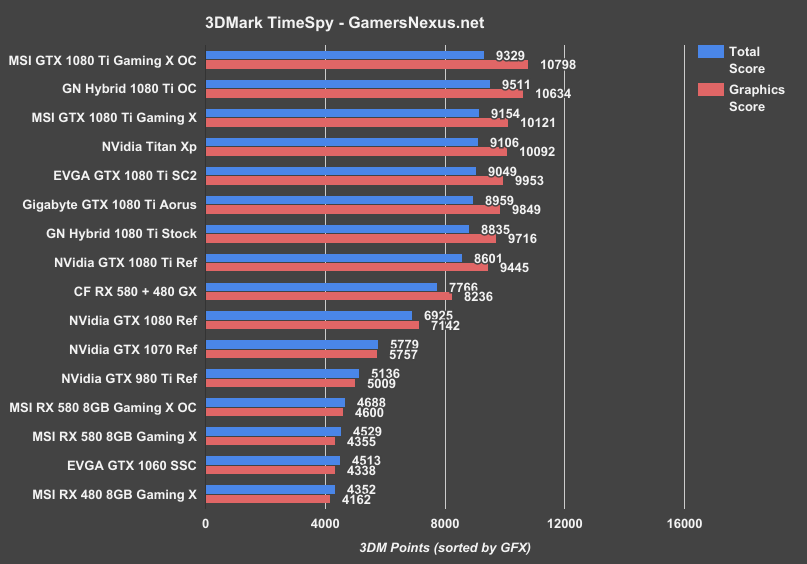

Synthetics:

- 3DMark FireStrike

- 3DMark FireStrike Extreme

- 3DMark FireStrike Ultra

- 3DMark TimeSpy

For measurement tools, we’re using PresentMon for Dx12/Vulkan titles and FRAPS for Dx11 titles. OnPresent is the preferred output for us, which is then fed through our own script to calculate 1% low and 0.1% low metrics (defined here).

Power testing is taken at the wall. One case fan is connected, both SSDs, and the system is otherwise left in the "Game Bench" configuration.

Continue to Page 3 for power, thermals, & noise.

NVidia Titan Xp Power Draw vs. 1080 Ti SC2

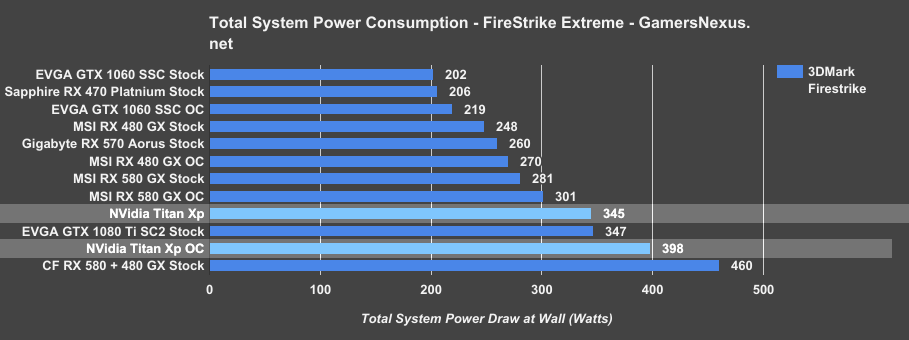

Looking at total system power measured at the wall, the Titan Xp system draws about the same power as the 1080 Ti SC2 card when both are stock – each system around 345W. Overclocking the Titan Xp gets it to about 400W total system power draw, for an increase of about 15% over the stock card. The only configuration that draws more power than this is the CrossFire RX 580 + 480 grouping, at 460W, or about 16% more power draw.

Looking at For Honor next, the Titan Xp stock configuration draws about 355W, about 10W behind the 1080 Ti SC2 AIB card with boosted power budget. Overclocking the Titan Xp pushes its power draw to 375W total system consumption, for an increase of about 6% in this particular workload.

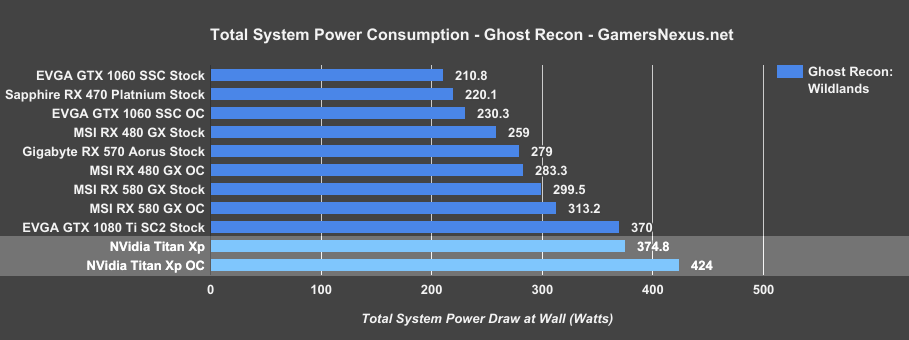

Ghost Recon: Wildlands posts total system power draw at around 370-375W for the stock SC2 and stock Titan Xp, with the overclocked Titan Xp jumping to 424W. That’s an increase of about 13% power consumption from the overclock.

Idle power consumption for the full system is at about 75W for the Titan Xp system, and about 73W for the EVGA 1080 Ti SC2 system. Given our less tight tolerances for power testing compared to other testing, this is effectively identical.

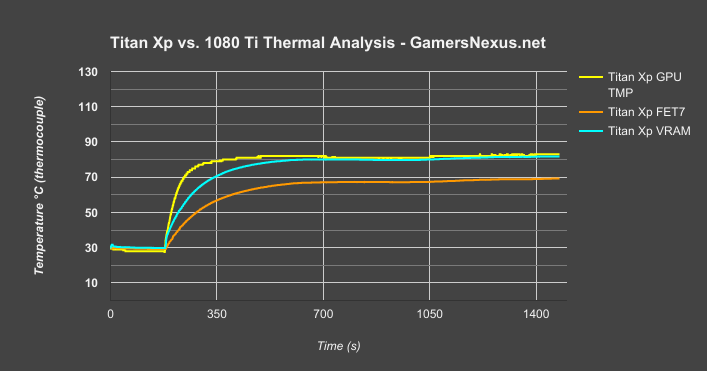

NVidia Titan Xp Temperature vs. Frequency & Fan Speed

Let’s get into the part we’re most interested in with this class of card: Thermals. Traditionally, the nVidia Founders Edition coolers have been the most limiting aspect of the card. This can typically be compensated for with higher fan RPMs at the cost of noise, but our hybrid mods resolve both issues with one go.

The Titan Xp doesn’t do too poorly here, though. We’re still hitting a thermal wall around 84C, with the fan stopping at about 55% total speed when under load. Clock fluctuations and fan fluctuations are shockingly steady with this card, but we’re still losing at least 100MHz off the clock as soon as thermals start rising. Our range is about 120MHz, with the important point being that the clock remains steady after it’s dropped that 100MHz. That’s good and bad; it’s not ideal to drop clocks for thermals, but it’s good that the card is maintaining a fixed speed rather than attempting to boost back up and being forced down again. That keeps frametimes more consistent, in the least. Our Hybrid mod will talk about this more and attempt to keep the higher clock speed.

Keep in mind that this is a power virus workload, so the clock does not enumerate in the same way that it would during a gaming workload – clocks will be lower here, but we’re just looking for stability, not max speed.

Moving now to a thermal analysis chart, we’re looking at component temperatures using thermocouples mounted to MOSFETs and VRAM components. We can compare against the 1080 Ti FE momentarily.

The Titan Xp FET #7 holds its temperature at around 68-70C, with its VRAM temperature around 80C – this is pretty common for the VRAM temperature, though we haven’t expanded that testing to the 1080 Ti FE just yet. GPU temperatures for the Titan Xp are in the 84C range, as you’d expect, which is roughly equal to the temperature of the 1080 Ti FE ($700) card.

The temperatures are all roughly the same once we’re under load, which makes sense, seeing as the Titan Xp is using a PCB that’s basically a 1080 Ti FE PCB. The FE PCB, for what it’s worth, was one that we praised fairly highly in our component-level VRM analysis.

Fans ramp at exactly the same profile between the TiXp and the 1080 Ti, if you were curious, as do temperatures and clocks – though the 1080 Ti runs a higher clock. The Ti GPU temperature is 1-2C higher in the beginning of the test, but the cards get closer over time.

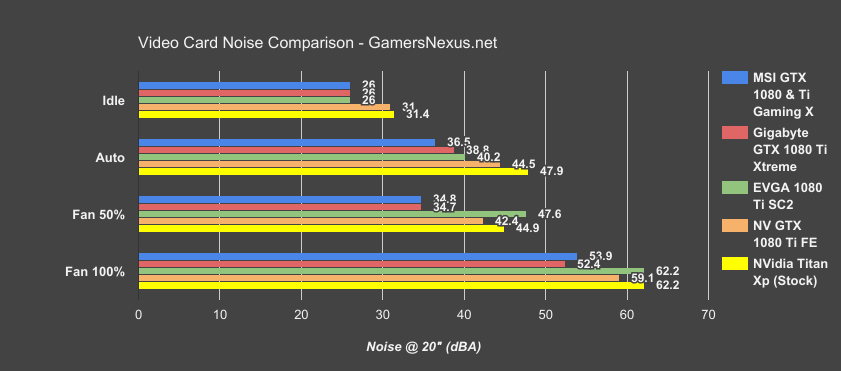

NVidia Titan Xp Noise Levels

For noise testing, we’re measuring at 20” away and doing so with a passively cooled system. The only component making noise is the GPU.

Fan speed at idle is the same for the Titan Xp as for all the other Founders Edition cards of this generation. We’re running at around 23% idle, with AIB partner cards demonstrating the noise floor with passive operation under idle conditions. The Titan Xp maintains an output of about 31dBA idle, which should be covered up by the case fans in most systems.

Auto speeds land the Titan Xp at 47.9dBA when operating in the 55% fan speed range, compared to AIB partner 1080 Ti cards in the 30s and 40s. This demonstrates the value of those AIB cards, but none will exist for the Titan Xp.

At 50% fan speed, we’re looking at a noise output of around 44.9dBA, with MSI and Gigabyte around the same speeds and EVGA a bit higher.

You’d ideally never run a 100% fan speed on any of these GPUs, but we’ve included the numbers to demonstrate the maximum noise possible.

Continue to Page 4 for synthetics.

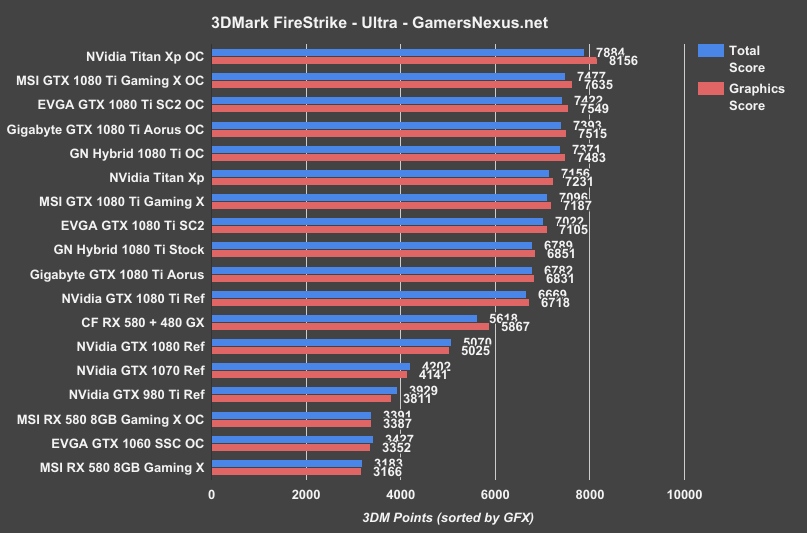

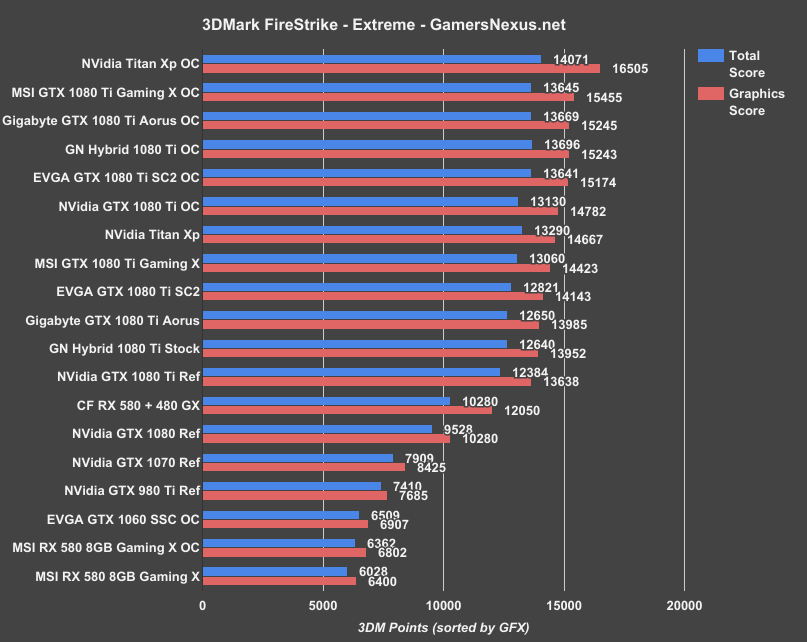

3DMark & Synthetic Benchmarking

We're using 3DMark for synthetic benchmarking here, though won't begin data analysis until the next page. These charts are just for fans of 3DMark (like benchmarkers and overclockers) who like having the synthetic metrics on hand.

We're using 3DMark Firestrike - Normal, 3DMark Firestrike - Extreme, and 3DMark Firestrike - Ultra for testing, alongside TimeSpy. Continue to the next page for data analysis of games.

Continue to Page 5 for gaming benchmarks & conclusion.

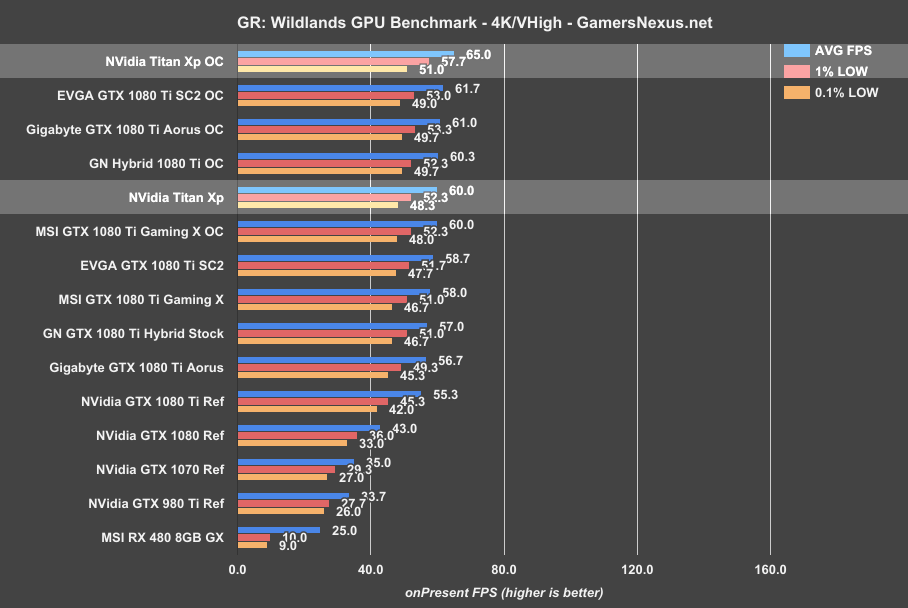

Ghost Recon: Wildlands Benchmark – Titan Xp 2017 vs. 1080 Ti

Moving on to FPS benchmarks, we don’t expect to see extraordinary gains over the 1080 Ti cards, but we’ll see if that $500 difference makes any impact in our gaming tests.

Starting with Ghost Recon: Wildlands at 4K with Very High settings, the nVidia Titan Xp stock card performs at around 60FPS AVG, with lows at 52 and 48FPS. This puts the card tied with the overclocked 1080 Ti Gaming X ($750) and our own overclocked Hybrid 1080 Ti FE mod, leading stock 1080 Ti cards by a couple percent. Versus the 1080 Ti FE reference card, we’ve got about an 8% gain from 55.3FPS to 60FPS AVG. Over the AIB partner 1080 Ti SC2 that we just reviewed, configured stock, we see a percentage gain of about 2.2% AVG, with frametimes effectively equal. That means you’re spending over $200 per percentage point gained in performance.

Overclocking doesn’t change much. The TiXp takes the lead at 65FPS AVG, followed next by the 1080 Ti SC2 (review here) at 62FPS AVG. The percentage difference here is 5% -- so we’re only paying $100 per percentage point once overclocking is permitted.

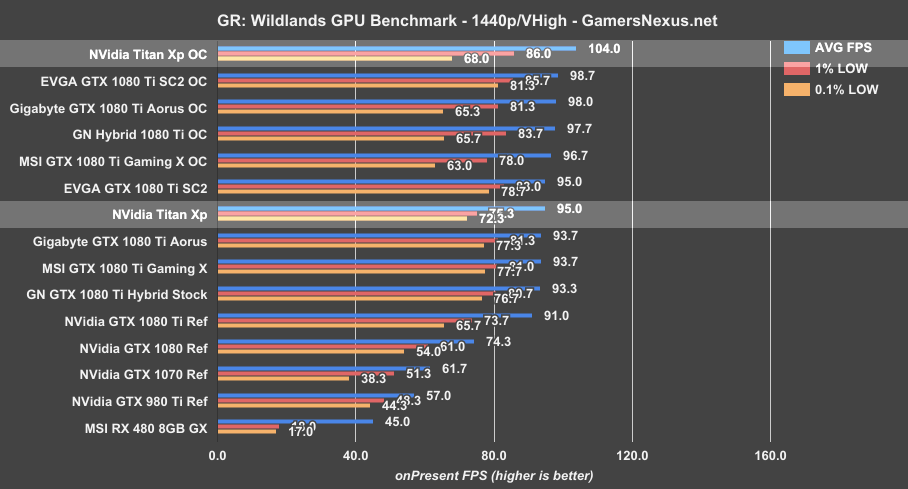

At 1440p, the Titan Xp performs identically in averages to the 1080 Ti SC2 card and close to the Gigabyte Xtreme Aorus ($750) cards, both priced $720 to $750 currently. The Titan Xp falls slightly behind the 1080 Ti cards in frametimes, though not in any meaningful way.

Overclocking boosts the TiXp to 104FPS AVG, though we run into thermal and power limits that threaten clock stability and, therefore, frametime performance. We’re at 104FPS AVG versus 99FPS AVG on the closest 1080 Ti OC card. That’s about a 5% lead again, consistent with the 4K results.

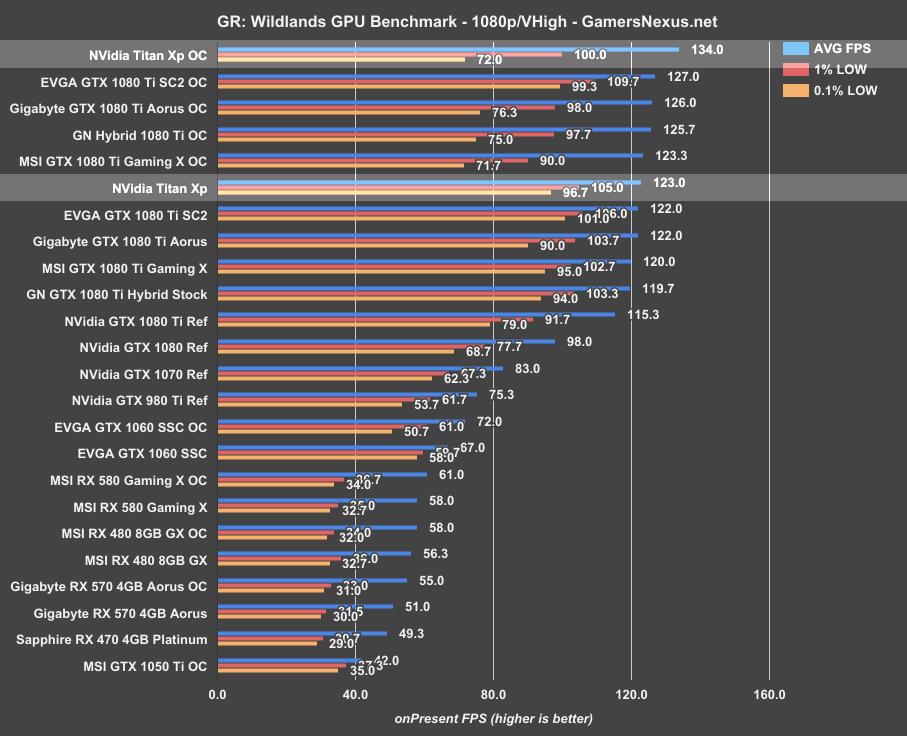

We see the same performance at 1080p, with the Titan Xp overclock faltering in frametime consistency in exchange for a 5% lead over EVGA’s SC2 in averages.

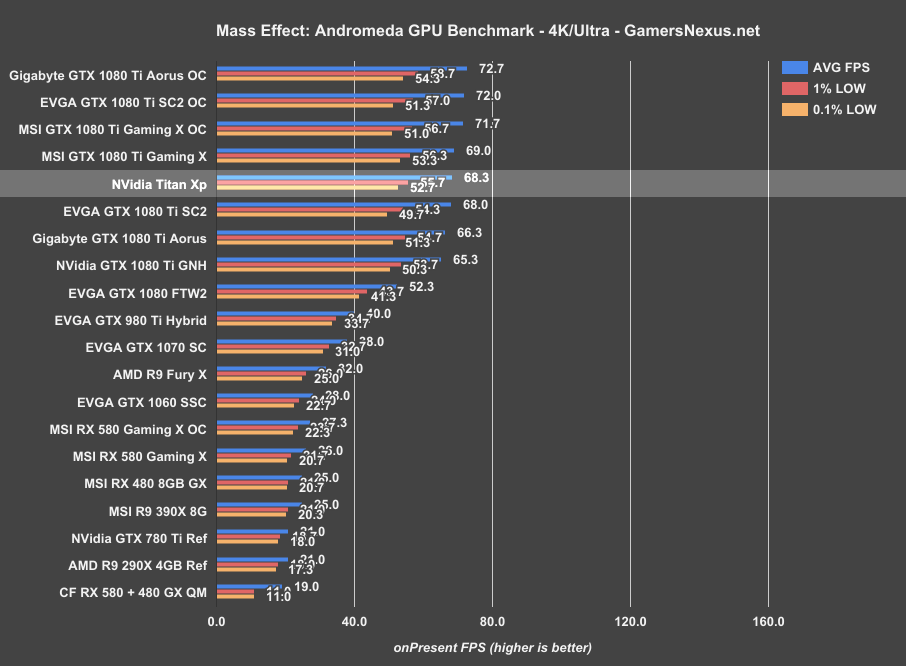

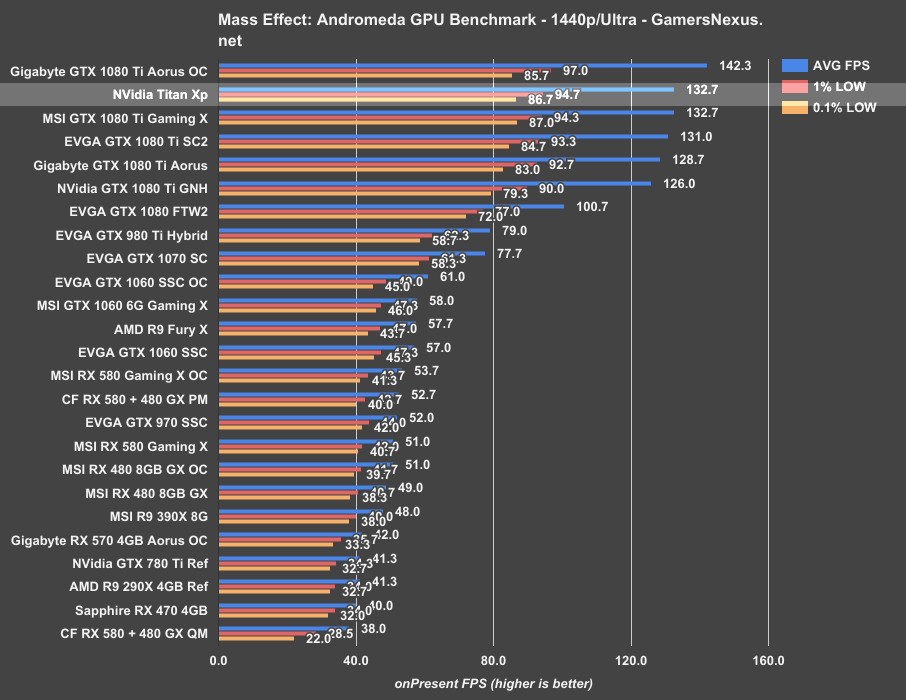

Mass Effect: Andromeda – Titan Xp vs. 1080 Ti Benchmark

Mass Effect: Andromeda at 4K positions the Titan Xp at 68FPS AVG, just behind the 1080 Ti Gaming X and ahead of the 1080 Ti SC2. These three cards are effectively equal in performance, given standard deviation run-to-run.

At 1440p, the Titan Xp ties again with the MSI 1080 Ti Gaming X and slightly leads the SC2, all around 131-133FPS AVG.

DOOM (Vulkan) Benchmark – Titan Xp vs. 1080 Ti

Running DOOM at 4K with Vulkan and Ultra settings, the Titan Xp stock card runs around 94FPS AVG, ahead of the 1080 Ti reference card by about 5%. The Titan Xp is effectively tied with the 1080 Ti Reference once the thermal constraint is removed, as seen in our Hybrid mod, and falls behind the higher clock-rates of all the other cards on the bench. Overclocking the Titan Xp boosts it to 108FPS in this clock-sensitive game, particularly benefiting from a big memory overclock. We gain about 3% over the 1080 Ti Xtreme Aorus, and that’s rounding up, leading to about a $160 cost per percentage point.

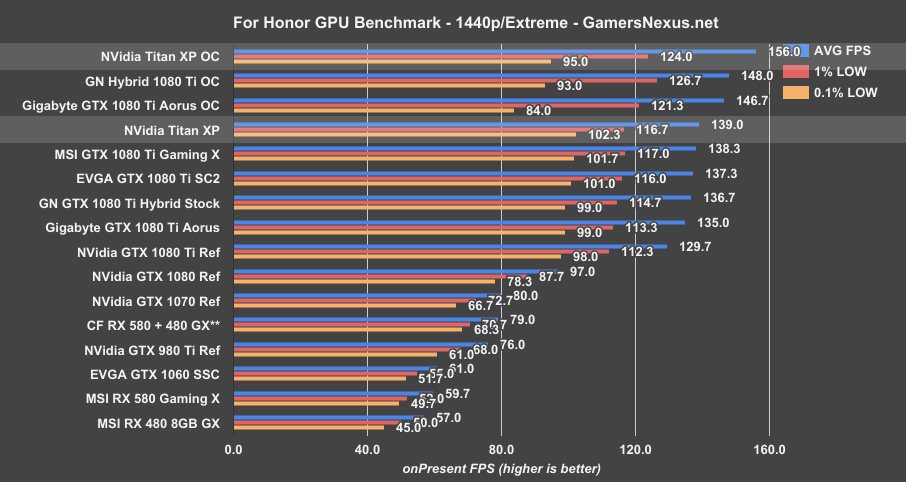

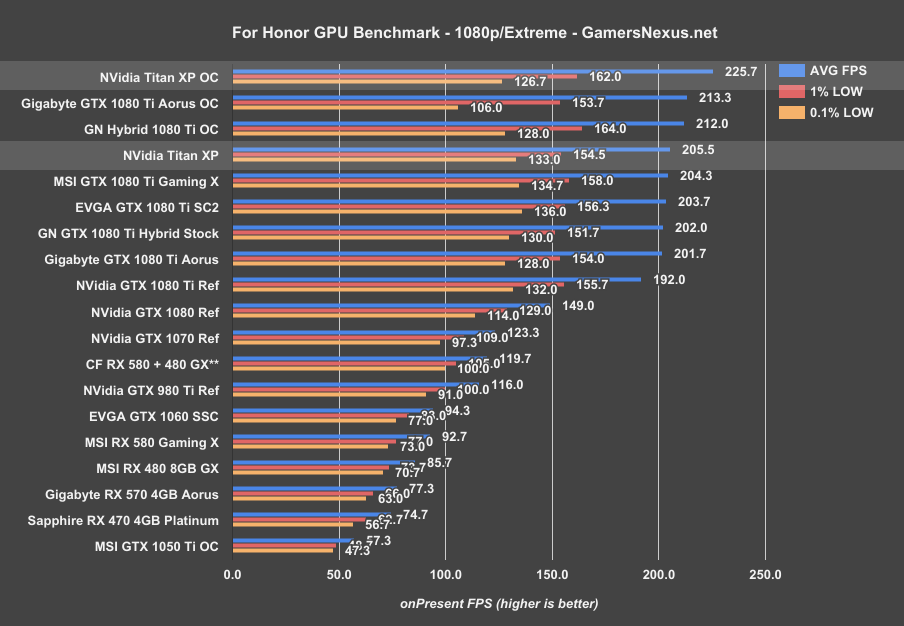

For Honor Benchmark – Titan Xp vs. 1080 Ti

Running For Honor at 4K, the Titan Xp stock performs at around 72FPS AVG, tied with the Gaming X in averages but behind marginally in lows. The 1080 Ti SC2 runs a 71FPS AVG, tailing the Titan Xp by 2.2% again.

Overclocking in this game proves problematic for nearly all of the devices we’ve tested, with stability becoming an issue without backing off the OC in substantial ways. Still, the TiXp manages to cling to the top spot just barely.

Ashes of the Singularity (Dx12) Benchmark – Titan Xp vs. 1080 Ti

Sniper Elite 4 (Dx12) – Titan Xp vs. CrossFire RX 580+480, 1080 Ti

Sniper Elite 4 at 4K resolution with Dx12 and Async Compute allowed our CrossFire RX 580 + RX 480 combo to outperform a reference 1080 Ti thanks to fringe multi-GPU optimization in this game. The Titan Xp runs at 87FPS AVG in this title, just ahead of the Gaming X at 86FPS AVG and just behind the overclocked 1080 Ti SC2 card. We’ve got a lead of about 14.9% over the reference 1080 Ti, or about 10.4% ahead of the CrossFire cards in this particularly well-optimized title.

Overclocking gets us a reasonable lead of 11.5% over the SC2 overclocked card, bringing our cost-per-percentage point increase down to $40, since that’s apparently a new metric with this card.

Conclusion: NVidia Titan Xp for Gaming or 1080 Ti?

This thing clearly isn’t best used for gaming. Again, that won’t stop people from buying it because it’s “the best,” and nVidia does still advertise its gaming capabilities a few times on the product page.

The thing is, at $200 per percentage point gained in some of these games, it’s a pretty tough argument. You’re talking differences that are nearly obscured by standard deviation test-to-test, even when accounting for excursions from the mean; in fact, in some cases, the AIB partner model 1080 Ti cards clock high enough that performance is effectively identical to the Titan Xp. This becomes even more true when accounting for manufacturing variance chip-to-chip.

Overclocking does keep the TiXp on top of the charts, but it’s often minimal. Sniper Elite is the only game where the TiXp saw any reasonable lead over the 1080 Ti, but even then, it’s not a good bargain.

If buying this for the extra 1GB VRAM for code execution with neural nets and deep learning, we’d advise looking up reviews for that application. This card may well be a performance king in that department, particularly at the price. For our audience, though, we simply cannot recommend the Titan Xp for gaming use cases. A 1080 Ti makes far more sense.

Editor-in-Chief: Steve Burke

Video Producer: Andrew Coleman