Watch Dogs PC GPU Benchmark: GTX 750 Ti, R9 270X, GTX 770, GTX 780 Ti, More

Posted on

The Watch Dogs launch has been a worrisome one for PC hardware enthusiasts. We've heard tale of shockingly low framerates and poor optimization since Watch Dogs was leaked ahead of shipment, but without official driver support from AMD and limited support from nVidia, it was too early to call performance. Until this morning.

At launch, AMD pushed out its 14.6 beta drivers alongside nVidia's 337.88 beta drivers. Each promised performance gains in excess of 25% for specific GPU / Watch Dogs configurations. As we did with Titanfall, I've run Watch Dogs through our full suite of GPUs and two CPUs (for parity) to determine which video cards are best for different use cases of the game. It's never as clear-cut as "buy this card because it performs best," so we've broken-down how different cards perform on various settings.

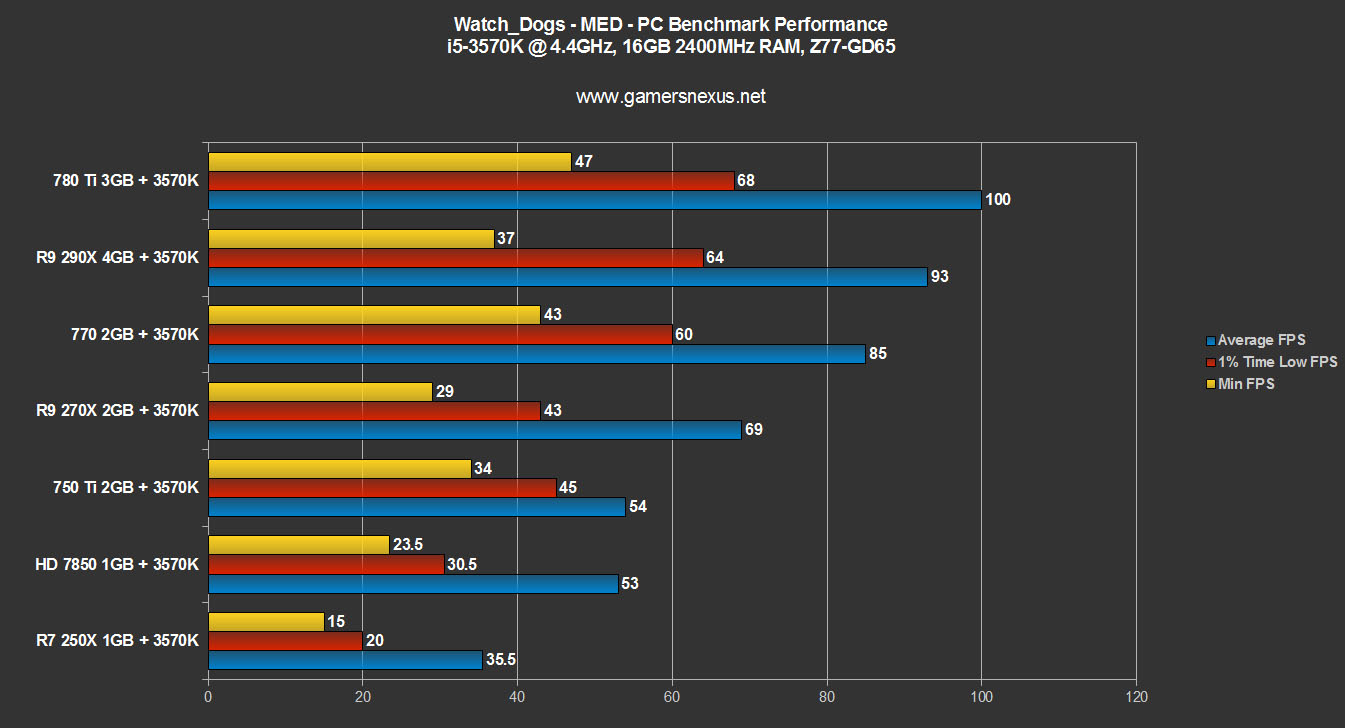

In this Watch Dogs PC video card & CPU benchmark, we look at the FPS of the GTX 780 Ti, 770, 750 Ti, R9 290X, R9 270X, R7 250X, and HD 7850; to determine whether Watch Dogs is bottlenecked on a CPU level, I also tested an i5-3570K CPU vs. a more modern i7-4770K CPU. The benchmark charts below give a good idea of what video cards are required to produce playable framerates at Ultra/Max, High, and Medium settings.

Update: Our very critical review of Watch Dogs is now online here.

Watch Dogs has a number of performance-hitting graphics settings in the options menus. After some playing around, I was able to determine how each item impacts framerate and what settings should be tweaked for optimized FPS. We'll look into some of those below.

As an aside of sorts, I wanted to bring attention to nVidia's inherent edge here. Just like AMD did with Tomb Raider, nVidia worked closely with Ubisoft to develop Watch Dogs for its video hardware. During Tomb Raider's development, GN staff was made aware that nVidia had no early access to the game and would "get it at the same time as [we did]." I'm curious if something similar happened to AMD here, given the GameWorks partnership and GPU bundle.

Watch Dogs Benchmark - Video Overview & Max / ULTRA Graphics Settings

Watch Dogs Graphics Settings Explained

Watch Dogs is technically a GameWorks title, though that doesn't mean you need nVidia hardware to appreciate some of the technology. Ubisoft has assigned the Disrupt engine to Watch Dogs, an in-house solution to aid in multiplayer and AI mapping in an open world. The engine also brings with it some graphics innovations, like real-time dynamic reflections, subsurface scattering to illuminate skin, deferred lighting, all manner of ambient occlusion / AA tech, ray-tracing, and more.

The intense focus on lighting and shadowing brings with it additional load on the GPU, often placing the onus of proper optimization (sans GFE) on the end-user. Because we actually like to understand the settings here, rather than just hitting an 'optimize' button, let's go through each of the major options.

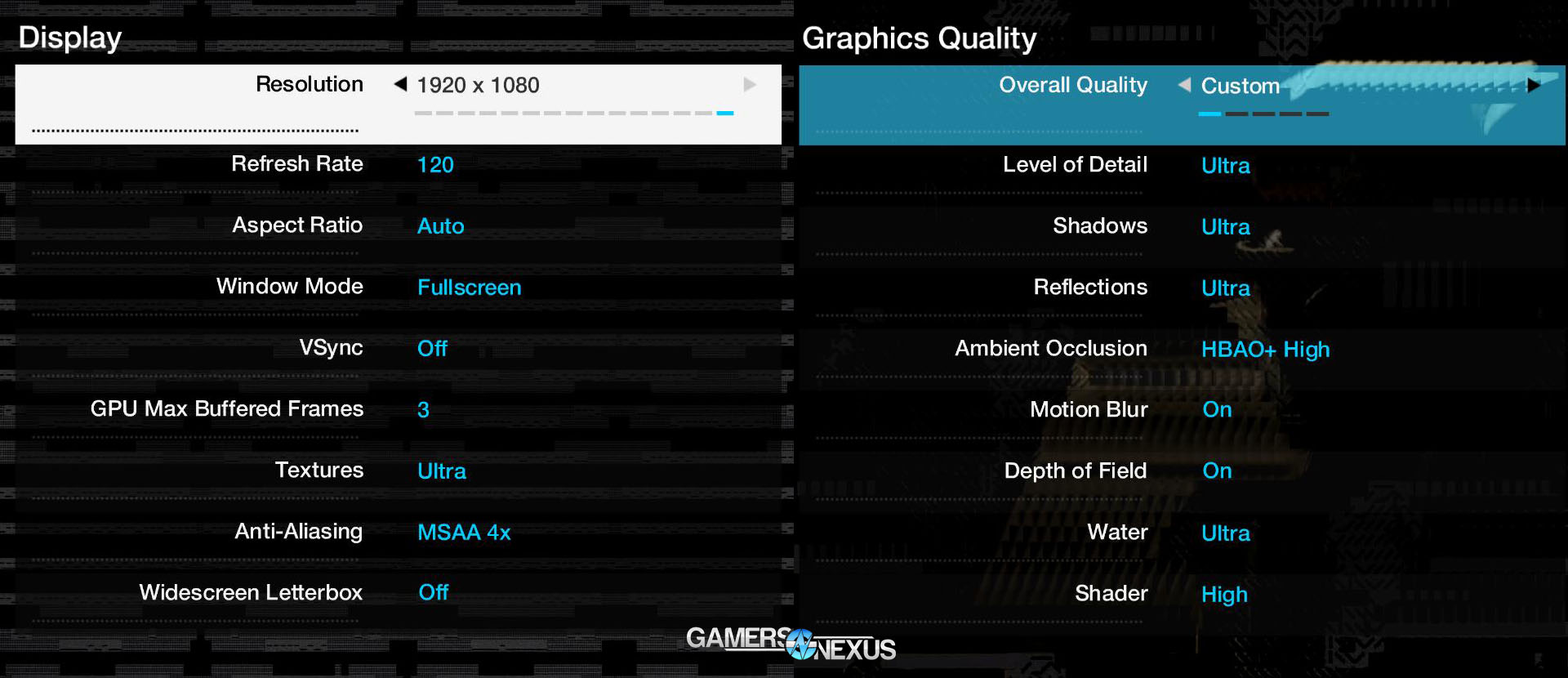

Display Settings -- Max Buffered Frames, Textures, Anti-Aliasing

The 'display' section of the graphics settings presents only a few non-obvious options:

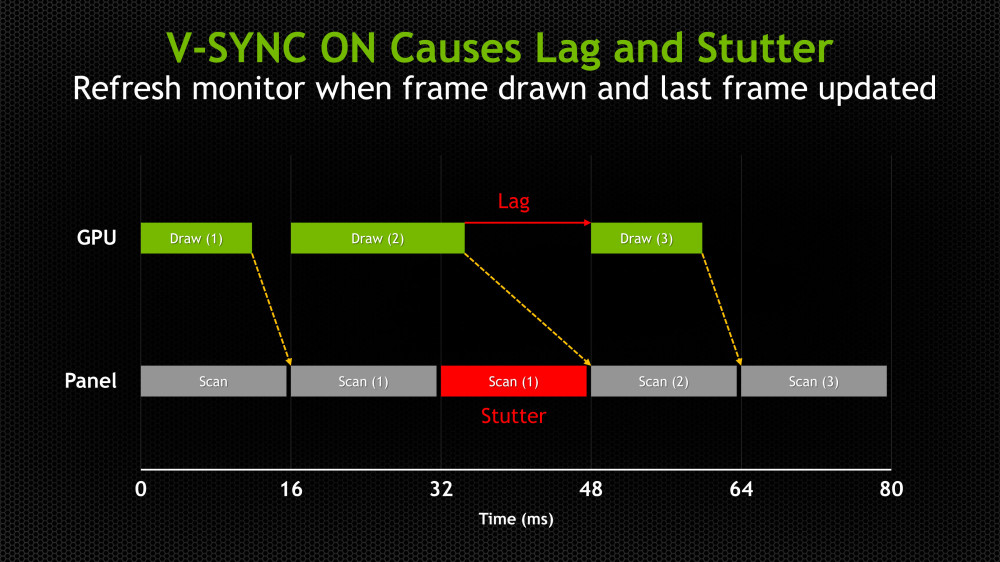

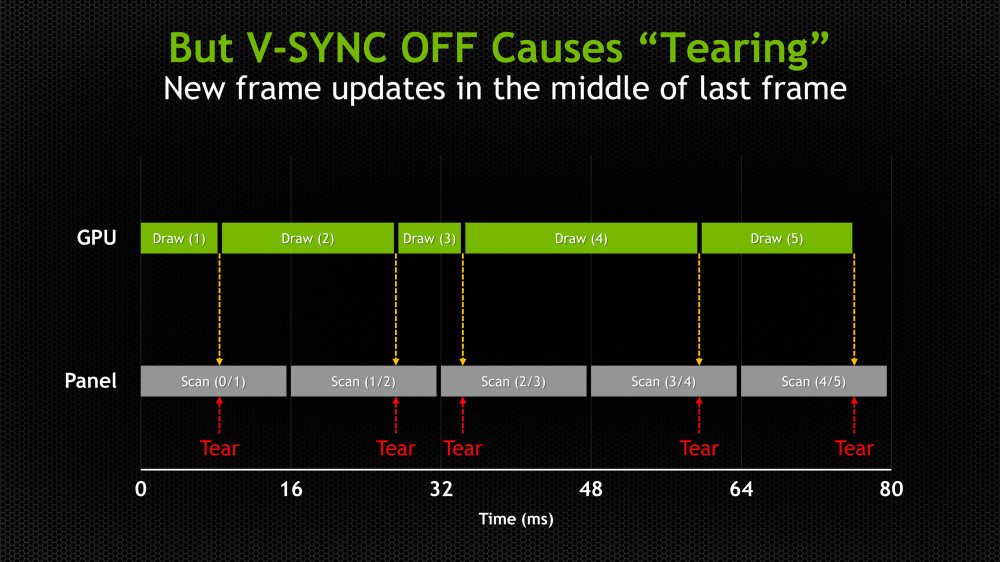

GPU Max Buffered Frames: The amount of frames we permit the GPU to pre-render before being shipped to the display. This will aid in decreasing visible tearing / stuttering, as we've explained previously. This number should generally not go above 3 unless you've got a massive framebuffer. Only increment this number if stuttering becomes a significant issue. Buffered frames will consume additional video memory.

V-Sync: Synchronization of the display's vertical refresh rate with the video rendering device. Enabling v-sync will help to reduce tearing, but will introduce stuttering in its place. We would advise that you disable this entirely. It's easier to compensate mentally for torn frames than for missing frames. The link immediately prior explains v-sync and other similar technologies.

Textures: The resolution of the game's textures. Watch Dogs strongly encourages 3GB of video RAM for 'Ultra' texture resolution and 2GB for 'High' texture resolution.

Anti-Aliasing: There are several types of anti-aliasing in the world of game graphics settings. Within Watch Dogs, we're presented with FXAA, SMAA, Temporal SMAA, TXAA, and MSAA. Each variant of anti-aliasing works to achieve a similar objective: smooth edges to produce a more cinematic scene.

FXAA attempts to do this by applying post-process anti-aliasing, a low-cost, high-performance anti-aliasing method that aids in the presentation of thin objects in game (barbed wire, for instance). FXAA has minimal impact to GPU performance. Skyrim stands as a prime example of another game that makes use of FXAA as a low-cost anti-aliasing solution for better scalability to low-end hardware.

SMAA is another solution that is heavily software-accelerated. Enhanced subpixel morphological anti-aliasing is designed to produce more accurate silhouettes, sharper features of thin objects, and remain minimally intensive on system resources.

You've likely seen MSAA before. Multi-sample anti-aliasing is resolved most heavily on a hardware-level and is one of the most aggressive anti-aliasing techniques in games. MSAA comes in 2x, 4x, and 8x flavors in Watch Dogs. After speaking with nVidia and doing some of our own testing, we've determined that it's often best to opt for MSAA 4x or better, ignoring 2x almost entirely. 4x requires a good deal of video equipment, but for GPUs that struggle to output at 4x MSAA, it is advisable to opt instead for temporal SMAA. Temporal SMAA will produce a better overall image than 2x MSAA and will do so at significantly less cost to the GPU, yielding superior performance overall. MSAA is VRAM-intensive and will punish video cards that live below 2GB capacity.

TXAA is an NVidia-developed, hardware-accelerated AA solution that takes advantage of Kepler's unique encoding and rendering architecture. TXAA aggressively attacks object edges to smooth them with more efficacy than the other methods, but requires Kepler or Maxwell architecture to utilize. TXAA is not as VRAM-intensive as MSAA. TXAA has a tendency to look slightly more 'washed-out' and less defined than MSAA, but depending on the game, this can be a desirable effect. Too much detail begins to look fake -- similar to jacking-up the 'detail' setting when sharpening a photo in PhotoShop.

Watch Dogs Graphics Quality Settings -- LOD, AO, DOF

The following options are available at various levels on the "Graphics Quality" screen:

- Level of Detail

- Shadows

- Reflections

- Ambient Occlusion

- Motion Blur

- Depth of Field

- Water

- Shader

I'm not going to delve into each of these individually, but we'll hit a couple of the key items.

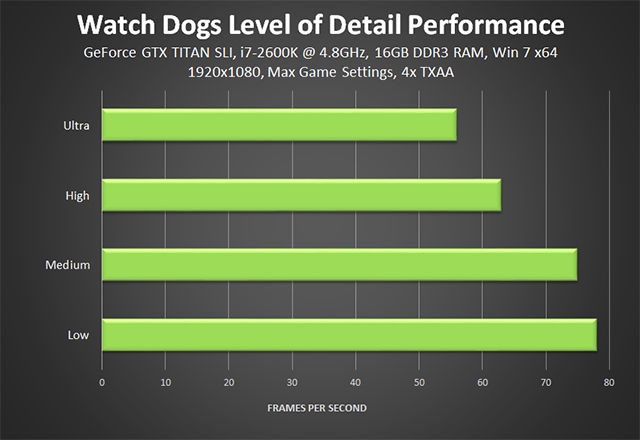

Level of Detail: LOD controls the visual fidelity of everything in the game. Regardless of your settings, there are various levels of detail applied to objects based on player vantage point and distance. A vehicle 100m away requires significantly less detail than the one you're driving, for instance, and thus will be rendered with a lower level of detail (fewer polys, among other changes). LOD in Watch Dogs will cause the most violent performance swings of all the other settings, perhaps short of some AA options. Lowering LOD eliminates unnecessary scene elements from being rendered. The overall liveliness / grittiness of the world will drop with each LOD decrement, but performance gains are often necessary and sacrifices must be made. Here's a benchmark that nVidia did strictly modifying LOD while retaining all other settings:

We spoke in more depth about LOD with Chris Roberts during our old Star Citizen technology interview & more recent tech follow-up.

Shadows, Reflections: These two items are self-explanatory. I'm listing them here anyway because they're two of the most immediate items to decrease in the event of an underperforming GPU. Reflections will have heavy impact on GPU performance given the game's propensity for dark, rainy environments that lend themselves to reflected light. An example of reflections within Watch Dogs would be car lights reflecting off of a damp road.

Ambient Occlusion: AO settings determine the realism with which surface shadows are cast. Both nVidia and AMD have in-house solutions (HBAO and HDAO, respectively), but only nVidia's is present within Watch Dogs. MHBAO is available as an alternative. In our testing, whenever an nVidia video card is present (Kepler or newer), HBAO+ (High) should be enabled at all times. HBAO+ always outperformed MHBAO by marginal amounts—a frame or two—on nVidia hardware.

Test Methodology

To produce the best real-world results without introducing test inconsistencies, we laid-out a set circuit that was executed on each pass of the test. FRAPS was used for frametime, average FPS, 1% Low Time FPS, and minimum FPS (.1%, eliminating outliers); FRAFs was deployed as our metrics interpretation software. Each test iteration underwent three passes of the two-minute course. Results were averaged. The course involved one minute of driving in a fast vehicle (around the block, moving to new cells) and one minute of free movement around and atop the Owl Motel. Understandably, the driving element taxed resources most heavily.

We used two test benches for this article. The primary bench is listed immediately below and hosts an overclocked i5-3570K IB CPU at 4.4GHz (42x) @ 1.265 vCore.

| GN Test Bench 2013 | Name | Courtesy Of | Cost |

| Video Card | (This is what we're testing). XFX Ghost 7850 | GamersNexus, AMD, NVIDIA, CyberPower. | Ranges |

| CPU | Intel i5-3570k CPU Intel i7-4770K CPU (alternative bench). | GamersNexus CyberPower | ~$220 |

| Memory | 16GB Kingston HyperX Genesis 10th Anniv. @ 2400MHz | Kingston Tech. | ~$117 |

| Motherboard | MSI Z77A-GD65 OC Board | GamersNexus | ~$160 |

| Power Supply | NZXT HALE90 V2 | NZXT | Pending |

| SSD | Kingston 240GB HyperX 3K SSD | Kingston Tech. | ~$205 |

| Optical Drive | ASUS Optical Drive | GamersNexus | ~$20 |

| Case | Phantom 820 | NZXT | ~$130 |

| CPU Cooler | Thermaltake Frio Advanced | Thermaltake | ~$65 |

The secondary test bench, a Zeus Mini provided for review by CyberPower (review impending), was borrowed temporarily to determine whether a CPU upgrade to the 4770K would uncover a CPU throttle.

Both systems were kept in a constant thermal environment (21C - 22C at all times) while under test. 4x4GB memory modules were kept overclocked at 2400MHz. All case fans were set to 100% speed and automated fan control settings were disabled for purposes of test consistency and thermal stability.

A 120Hz display was connected for purposes of ensuring frame throttles were a non-issue. The native resolution of the display is 1920x1080. V-Sync was completely disabled for this test.

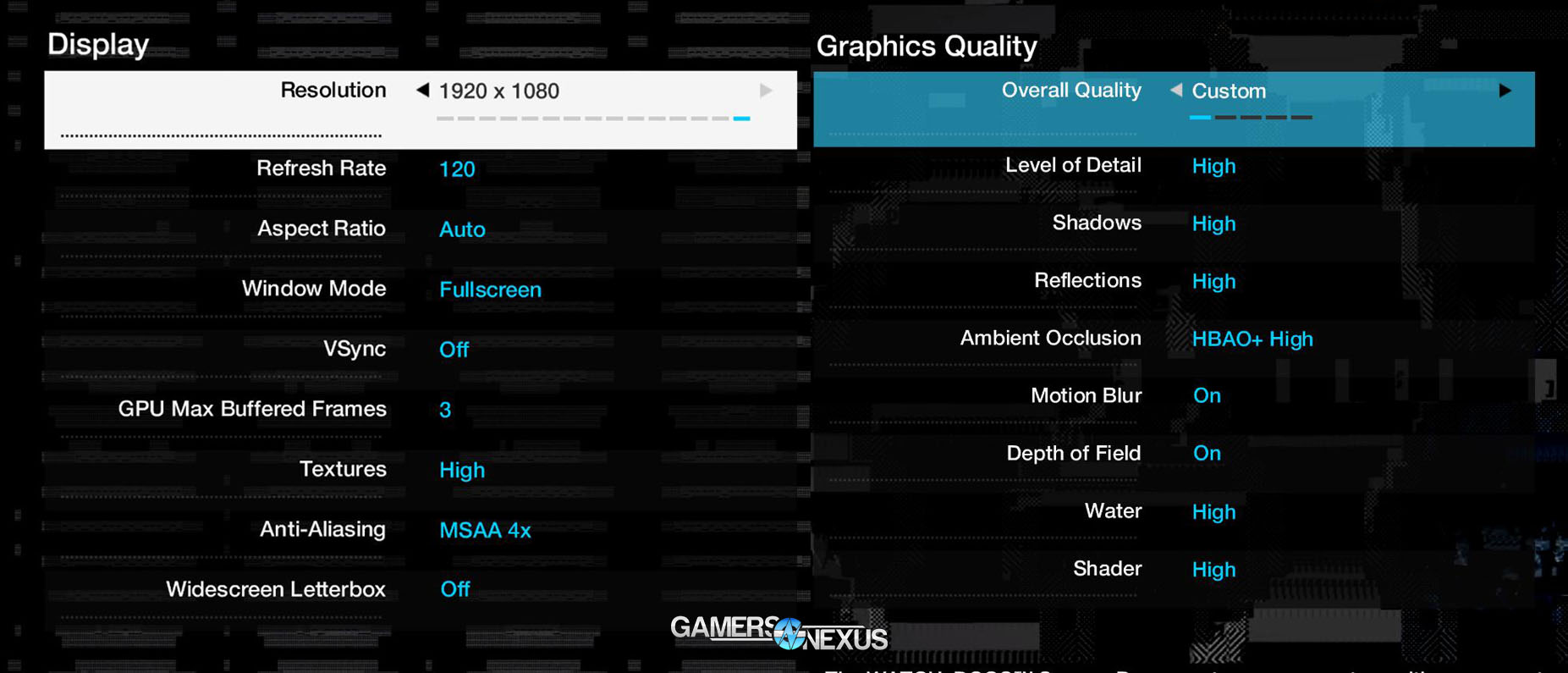

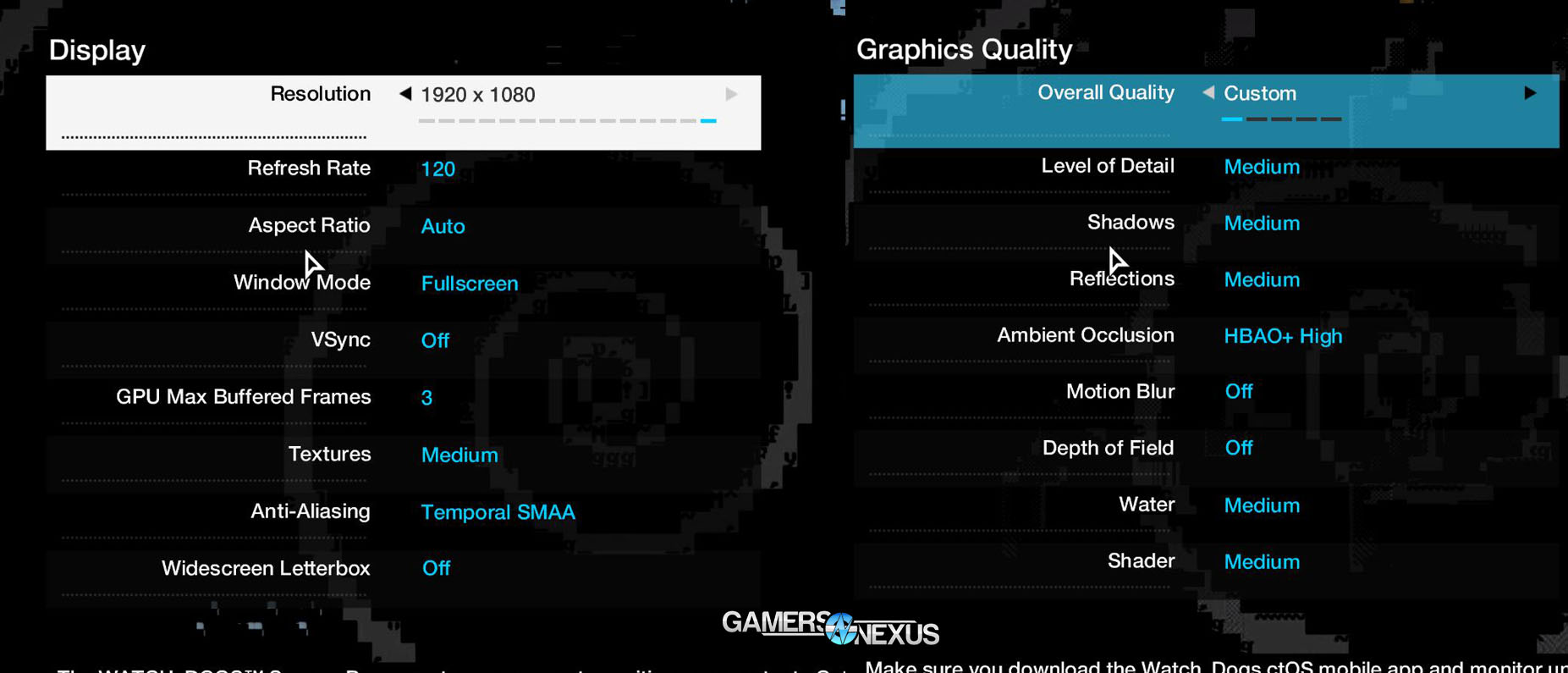

We ran the following settings for all discrete devices under test (DUT):

HBAO+ (High) was enabled for all nVidia devices. MHBAO was utilized for AMD devices. These were tested independently for performance and it was determined that those settings were the most fair to each product.

A few additional tests were performed as one-offs to test various graphics settings for impact.

The video cards tested include:

- Intel HD4000 3rd-Gen IGP (last-gen).

- AMD Radeon R9 290X 4GB.

- AMD Radeon R9 270X 2GB (we're using reference).

- AMD Radeon HD 7850 1GB.

- AMD Radeon R7 250X 1GB (equivalent to HD 7770).

- NVidia GTX 780 Ti 3GB.

- NVidia GTX 770 2GB (we're using reference).

- NVidia GTX 750 Ti Superclocked 2GB.

The HD4000 failed all tests and will not be included on the results charts. All devices under test were provided by AMD and nVidia for review purposes, with the exception of the 7850 & HD4000, which we provided.

Watch Dogs PC Benchmarks: GTX 750 Ti vs. 250X, R9 270X, GTX 770, GTX 780 Ti, R9 290X

Let's jump straight into it:

From our Titanfall benchmark:

- Average FPS: This is the number you care most about. This is the most realistic representation of what you'll experience with this video card in Titanfall.

- Minimum FPS: The lowest FPS ever reported (<0.01% of the total frame count). This is an outlier by nature and is less realistic of a measurement than the next item.

- 1% Time Low FPS: The (low) FPS displayed 1% of the time; a representation of how low your 'lag spikes' will go when limited by video hardware. This will have serious impact on streaming and video capture.

Side note: I saw a 10.5% improvement in Catalyst 14.6 (shown) over Catalyst 14.4.

We were unfortunately unable to test SLI and CrossFire performance at this time due to hardware limitations, but it is something we're looking at permanently adding in the near future (post-Computex).

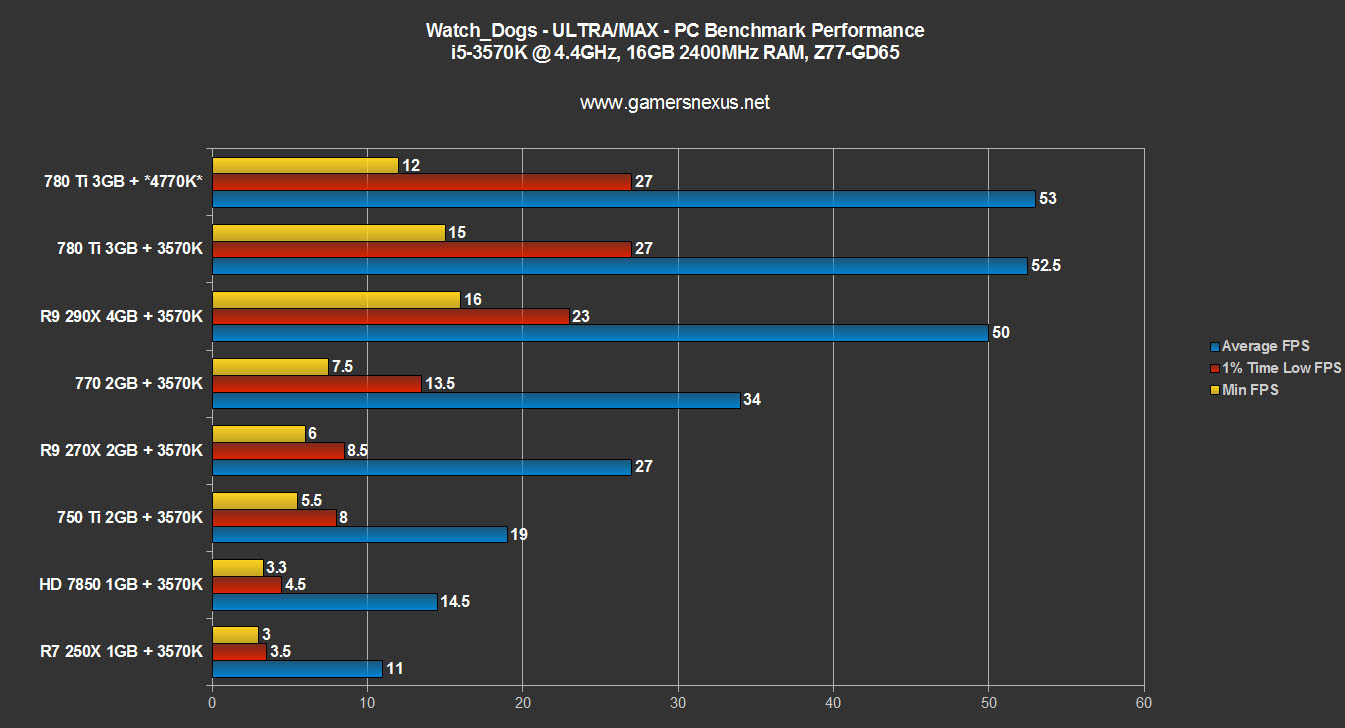

After previewing our initial results with the 3570K and 780 Ti, which struggled to maintain an FPS above 50 on Ultra, I decided to throw the 4770K into the mix with the same card. Barely 1FPS difference across the board. If the CPU is the throttle, it's a damn big throttle; I don't have X79 systems available for further validation, but we're exiting real-world testing at that point anyway. I tested the 4770K with a few other cards/settings and saw similarly uninteresting results. I can guarantee that the 780 Ti is most certainly not throttling us here, being that it is a $700+ video card, and the 4770K is a top-of-the-line CPU as far as the mainstream consumer market goes. Performance sort of asymptotes as we approach the high-end cards -- normally we see a more linear progression. If those two components together can't output 60FPS, I am inclined to believe that Watch Dogs has significant underlying optimization and performance limitations that are on the side of the game's developers. Further driver releases may unlock more potential, but there's something strange going on within Watch Dogs that is limiting performance.

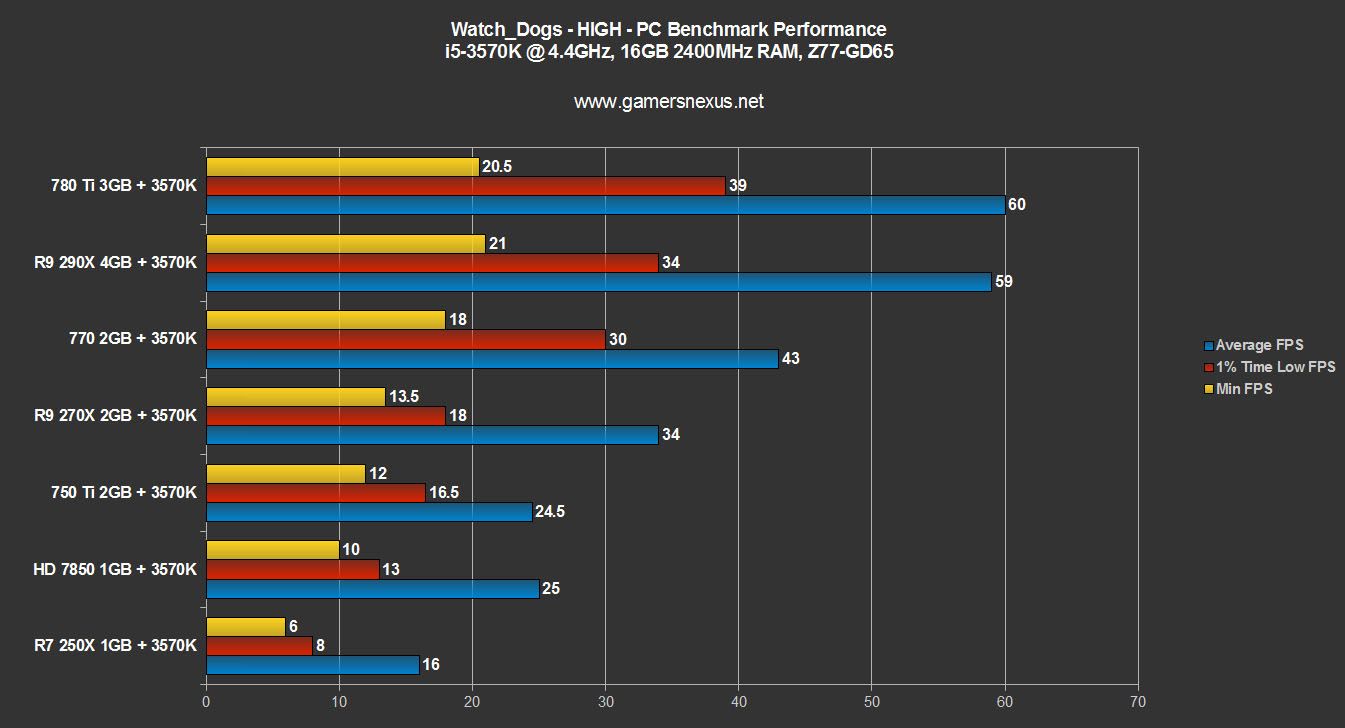

The game just doesn't look good enough to warrant such a restrictive output. Even dropping down to 'high' settings across the board, including texture resolution, we still hover right around 60FPS on the 780 Ti. The 4GB R9 290X looms behind. The rest of the GPUs are positioned as natural order would demand.

Now, the charted tests were conducted using some preset variables and aren't necessarily representative of optimal settings. I would recommend that you read some of the above info on different settings and attempt tweaking those to produce better performance. In testing, I found that opting for Temporal SMAA saw a 46% increase (from 24.5 to 39 average FPS) over MSAA 4x with high settings. This shows that a few key tweaks will allow high/ultra hybrid settings while dropping more taxing items to lower tiering.

But still, the limited performance and overall stuttering of the game is questionable. I even attempted to eliminate a storage bottleneck by introducing RAID SSDs, but still, I saw occasional stutters / visual lag when moving around on foot.

Conclusion: What's the best video card for Watch_Dogs?

Watch Dogs has serious optimization concerns. I can't comment on how the game actually plays right now since all I've done is run the same course for an entire night (and that's not exciting), but if you're set on buying it, my recommendation would be to just buy based on budget for this one. There's no decisive winner here. NVidia does tend to outperform AMD in a dollar-for-dollar matchup, but the globally handicapped performance leaves a lot to be desired and is relatively brand agnostic.

I will say that the GTX 750 Ti performed extremely well on medium settings and would do better with some card-specific tweaking. For buyers on a stricter budget, that'd be my go-to right now.

It seems to me that as long as you've got a 4670K/3570K or equivalent and better, you'll be just fine. No need to spring for a 4770K or other i7 CPU just for Watch Dogs, seeing as there was virtually 0 gain in all of my tests.

Either way, I foresee many optimization patches and driver updates on the horizon.

- Steve "Lelldorianx" Burke.