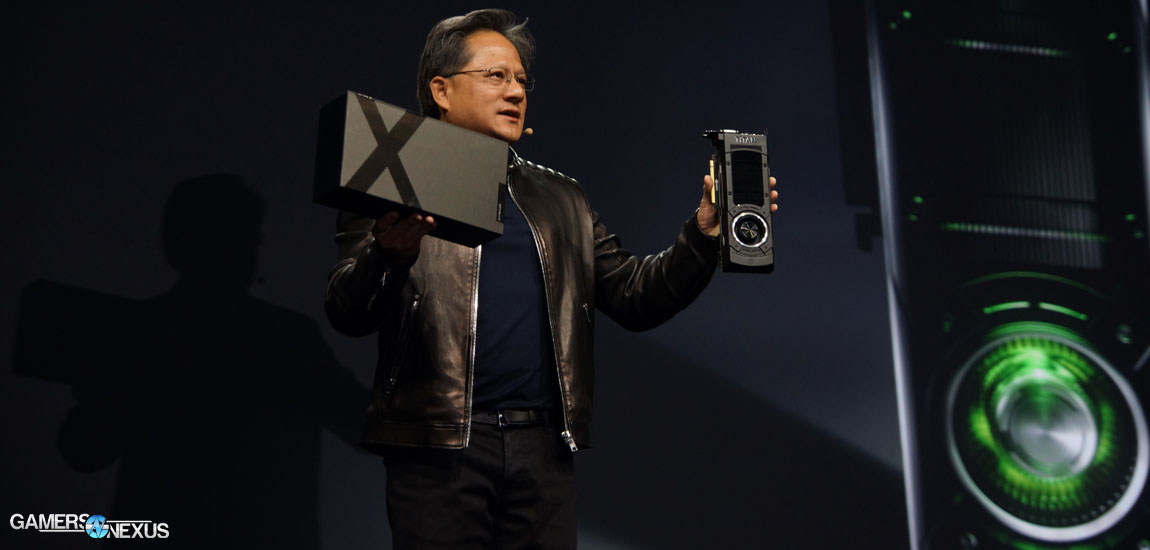

Our full GTX Titan X ($1000) FPS benchmarks are pending publication following nVidia's GPU Technology Conference (GTC), though CEO Jen-Hsun Huang's keynote today marks the official and immediate launch of the GTX Titan X video card. The keynote was live-streamed.

Moments ago, the new GTX Titan X's official specifications were unveiled at GTC 2015, to include core count, the GPU, and price. We've compared the Titan X's spec to the GTX 980, & 780 Ti below. The Titan X will be available here (Newegg) at some point. The card should be available via NVIDIA's official store momentarily.

NVIDIA GeForce GTX Titan X vs. GTX 980 & 780 Ti Video Card Specs

| GTX Titan X | GTX 980 | GTX 780 Ti | |

| GPU | GM200 | GM204 | GK-110 |

| Fab Process | 28nm | 28nm | 28nm |

| Texture Filter Rate (Bilinear) | 192GT/s | 144.1GT/s | 210GT/s |

| TjMax | 91C | 95C | 95C |

| Transistor Count | 8B | 5.2B | 7.1B |

| ROPs | 96 | 64 | 48 |

| TMUs | 192 | 128 | 240 |

| CUDA Cores | 3072 | 2048 | 2880 |

| Base Clock (GPU) | 1000MHz | 1126MHz | 875MHz |

| Boost Clock (GPU) | 1075MHz | 1216MHz | 928MHz |

| GDDR5 Memory / Memory Interface | 12GB / 384-bit | 4GB / 256-bit | 3GB / 384-bit |

| Memory Bandwidth (GPU) | 336.5GB/s | 224GB/s | 336GB/s |

| Mem Speed | 7Gbps | 7Gbps (9Gbps effective - read below) | 7Gbps |

| Power | 1x8-pin 1x6-pin | 2x6-pin | 1x6-pin 1x8-pin |

| TDP | 250W | 165W | 250W |

| Output | 3xDisplayPort 1xHDMI 2.0 1xDual-Link DVI | DL-DVI HDMI 2.0 3xDisplayPort 1.2 | 1xDVI-D 1xDVI-I 1xDisplayPort 1xHDMI |

| MSRP | $1000 | $550 | $600 |

As with our Titan Z post last year, it's worth highlighting that this is not primarily a gaming card, despite its branding. The card's primary functions will serve on the production and media side, though it can be deployed for gaming use cases. Unlike the Titan Z, however, the Titan X is a single-precision focused card and drops full double-precision performance. In light of this, Jen-Hsun Huang indicated that the Titan Z will remain the top-of-the-line card for double-precision performance.

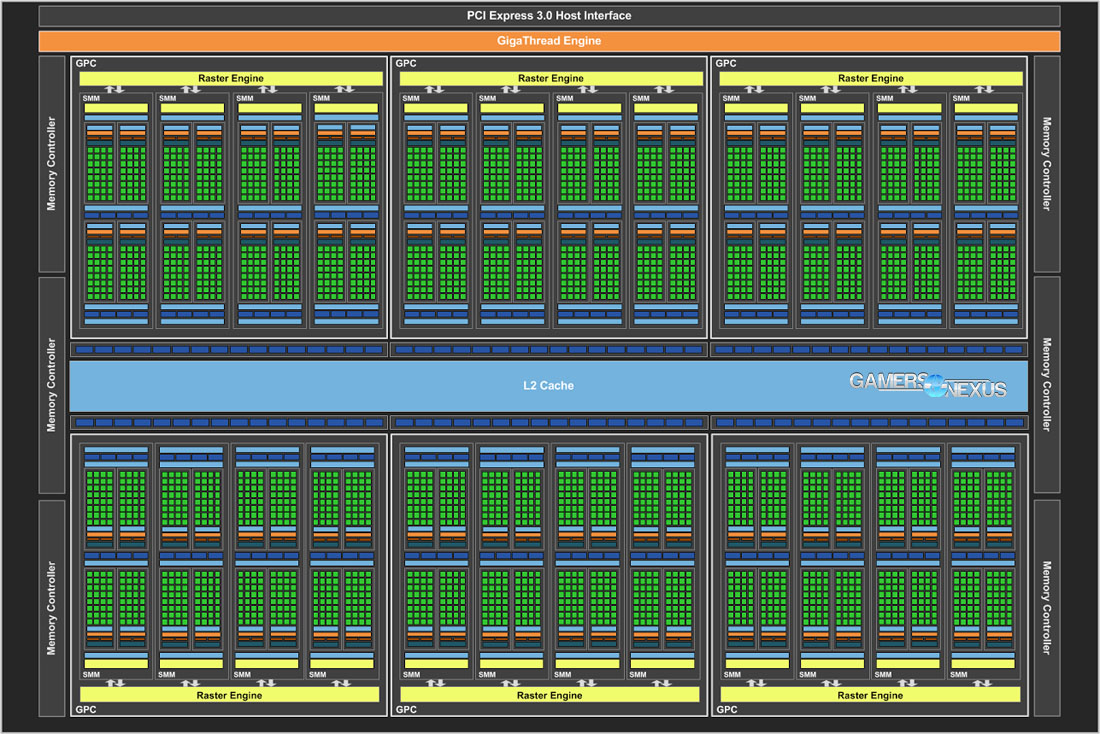

Architecture

The GTX Titan X hosts a GM200 GPU, an 8 billion transistor chip with 3072 CUDA Cores on Maxwell architecture. As noted in our GTX 980 review, Maxwell's cores are about 40% more powerful than Kepler architecture, so the cross-generation core counts are not linearly comparable.

For point of clarity, the GTX Titan X is a single-GPU solution card, using one GM200 chip. The memory is fully allocated to the one chip and not a split metric.

The GM200 uses the same display & video engines as in the GM204 that's found in the GTX 980. The GM200's double-precision throughput is 1/32 the rate of single-precision performance, like the GTX 980. The Titan X is listed as offering 7TFLOPS of single-precision performance.

Titan X operates on a 1000MHz base clock with a "typical boost clock speed" of 1075MHz.

Memory Subsystem

Memory is specified as 12GB of GDDR5 on a 384-bit memory interface, using six 64-bit memory controllers. The memory operates at a 7GHz memory clock with 336.5GB/s memory bandwidth (GPU). This memory bandwidth is approximately 50% greater than the GTX 980's 224GB/s, and about the same as the 780 Ti's 336GB/s. Note that the 780 Ti's differing architecture meant the GTX 980, even with a smaller pipe for memory transactions, still outperformed it in almost all gaming use cases for which we've tested.

The memory subsystem is of particular note for the Titan X. NVidia has heavily branded the new card for maximum (ultra) graphics settings on 4K games, a task that's memory-intensive and hammers bits down the pipe.

NVIDIA's GTX Titan X Performance

To showcase the Titan X's performance, NVIDIA demonstrated an Epic Games real-time render displaying upwards of ~30 million polygons per second in a massive landscape. We're told the output was rendered live and on a single Titan X. Attendees of the keynote were also informed that "Deep Learning" time requirements were decreased from ~43 days (with a 16 core Xeon CPU) to about 2-3 days.

Additional Architecture Notes

The GTX 980 debuted a lot of the technology that feeds into the Titan X, including software solutions and game graphics settings. MFAA (multi-frame anti-aliasing) and PhysX received additional marketing effort alongside the launch of the Titan X, though readers of our GTX 980 review will already be well-acquainted with the terminology.

Asynchronous Time Warp, also introduced with the 980, was also given note in the Titan X unveil presentation. This is perhaps a slight competitive jab at AMD, who introduced their Liquid VR solution at GDC, also hoping to diminish time warp in VR.

Cooling & Chassis

The Titan X uses a copper vapor chamber to cool the GM200, but also sticks to a more standard aluminum heatsink (dual-slot) for dissipation. For its fan, the Titan X has stuck with the "squirrel-cage" blower fan, as found in all higher-end nVidia reference designs in recent years.

Functionally, nVidia has opted-out of a backplate on the Titan X, noting to the press that this was to ensure maximum airflow to the device in multi-card configurations. The GTX 980 reference card launched with a backplate that had a removable segment for increased airflow, but in the case of the Titan X, this has been removed in its entirety.

NVidia has changed its design for the Titan X, coloring its aluminum enclosure black instead of the original silver/alloy look of the Titan.

Power & Titan X Overclocking

Titan X is rated for 250W TDP and uses 1x8-pin & 1x6-pin power connectors from the PSU. The GM200 GPU on a reference Titan X will use a 6+2 phase VRM, affording stability in overclocking tasks. The maximum power target is 110%, allowing up to 275W of power for OCing.

NVidia's Titan X uses polarized capacitors and molded inductors to reduce board noise and coil whine.

NVidia notes that the Titan X has achieved 1.4GHz overclocks using the reference cooler in internal testing. We will validate with our own benchmarks once home from GTC.

For more GTC panels and Titan X coverage, be sure to subscribe to our YouTube channel (including edits of the keynote), twitter, and facebook accounts. This article will be updated with additional graphics after 12PM PST today.

- Steve "Lelldorianx" Burke.