Battlefield 1 marks the arrival of another title with DirectX 12 support – sort of. The game still supports DirectX 11, and thus Windows 7 and 8, but makes efforts to shift Dice and EA toward the new world of low-level APIs. This move comes at a bit of a cost, though; our testing of Battlefield 1 has uncovered some frametime variance issues on both nVidia and AMD devices, resolvable by reverting to DirectX 11. We'll explore that in this content.

In today's Battlefield 1 benchmark, we're strictly looking at GPU performance using DirectX 12 and DirectX 11, including the recent RX 400 series, GTX 10 series, GTX 9 series, and RX 300 series GPUs. Video cards tested include the RX 480, RX 470, RX 460, 390X, and Fury X from AMD and the GTX 1080, 1070, 1060, 970, and 960 from nVidia. We've got a couple others in there, too. We may separately look at CPU performance, but not today.

This BF1 benchmark bears with it extensive testing methodology, as always, and that's been fully detailed within the methodology section below. Please be sure that you check this section for any questions as to drivers, test tools, measurement methodology, or GPU choices. Note also that, as with all Origin titles, we were limited to five device changes per game code per day (24 hours). We've got three codes, so that allowed us up to 15 total device tests within our test period.

Battlefield 1 Settings Explained

Vertical Sync: See our V-Sync entry in our specs dictionary. Toggling this disabled the FPS lock to refresh rate. If experiencing a 60FPS lock, this is likely why.

Field of View: Defines the viewable area (horizontally) of the screen. Increasing this can distort / stretch graphics, but provides greater peripheral view. We recommend increasing from the 55 default.

Dx12 Enabled: Toggles the new-ish DirectX 12 low-level API. Read our benchmarks for more on this. You will lose overlay support for some software.

Resolution Scale: Scaling percentage as multiplied against the resolution. If you wanted to render 4K graphics to a 1080p display – perhaps as an owner of a GTX 1080 or similar – this would be a good way to do that. Leave this set to 100% for most reliable resolution settings.

UI Scale Factor: Scales the size of the UI. Useful for UltraWides.

GPU Memory Restriction: Disable this for benchmarking. If the game begins to get overly aggressive on VRAM consumption, this setting will dynamically change quality to fit the demands to the card.

Graphics Quality: Preset options for the below settings. Spans Low, Medium, High, Ultra, and Custom.

Texture Quality: Higher quality textures will increase the perceived depth and grit in cloth, gun damage, rocks, and anything that's textured – which is almost everything. Increased texture quality will impact VRAM most heavily. 2GB users should be wary.

Texture Filtering: Anisotropic Filtering. Does not really impact performance, but helps with reducing fuzziness as textures near their vanishing point.

Lighting Quality: Light and shadow quality. Impacts crepuscular rays, fineness of shadow edges.

Effects Quality: We are not sure if this impacts blood decals in addition to effects (smoke/gas). The game's description is exceptionally vague.

Post Process Quality: Affects filtration FX applied after the raster/render/lighting processes in the pipeline.

Mesh Quality: Impacts the polygon density of meshes on objects, e.g. rocks or tanks. Lower settings will increase 'blockiness,' but improve performance by reducing geometric complexity.

Terrain Quality: Presently unclear on exact impact. Likely smoothness of terrain detail / jaggies.

Undergrowth Quality: Modifies grass and foliage pop-in and visibility.

Antialiasing Post: Temporal anti-aliasing (TAA) and FXAA are the only options.

Ambient Occlusion: Impacts shading between objects where edges meet.

Battlefield 1 Maximum Settings (4K Ultra on GTX 1080)

The above video shows some of our gameplay while learning about Battlefield 1's settings. This includes our benchmark course (a simple walk through Avanti Savoia), our testing in Argonne Forest to get a quick look at FPS during 64-player multiplayer matches, and a brief tab through the graphics settings. The next embed contains our video version of this benchmark, though you'll get a little bit more information if sticking to the written word. Both options are here.

Battlefield 1 PC Graphics Card Benchmark [Video]

Test Methodology

We tested using our GPU test bench, detailed in the table below. Our thanks to supporting hardware vendors for supplying some of the test components.

AMD's 16.10.2 drivers contain the largely the same driver optimizations as released in the AMD 16.10.1 hotfix. Here's a note from AMD's driver team on this matter:

“We have been optimizing ahead of launch for BF1 since the beta, so yes, 16.10.1 is already fairly optimized. 16.10.2 will add a couple of quality fixes and may add another +1-2% of performance in DX12 cases, but yes, the direction was that 16.10.1 would be good enough to perform testing for early access since we’ve done a lot of optimizations already.”

For our tests, because they began a few days ago, we used AMD 16.10.1 drivers (which contain Battlefield 1 optimizations) for AMD GPUs. NVidia driver package 375.57 (press drivers) were used for Pascal and Maxwell GPUs. These drivers contain Battlefield 1 optimizations.

| GN Test Bench 2015 | Name | Courtesy Of | Cost |

| Video Card | This is what we're testing! | - | - |

| CPU | Intel i7-5930K CPU 3.8GHz | iBUYPOWER | $580 |

| Memory | Corsair Dominator 32GB 3200MHz | Corsair | $210 |

| Motherboard | EVGA X99 Classified | GamersNexus | $365 |

| Power Supply | NZXT 1200W HALE90 V2 | NZXT | $300 |

| SSD | HyperX Savage SSD | Kingston Tech. | $130 |

| Case | Top Deck Tech Station | GamersNexus | $250 |

| CPU Cooler | NZXT Kraken X41 CLC | NZXT | $110 |

Game settings were manually controlled for the DUT. All games were run at presets defined in their respective charts. Our test courses are all manually conducted. In the case of our bulk data below, the same, easily repeatable test was conducted a minimum of three times per device, per setting, per API. This ensures data integrity and helps to eliminate potential outliers. In the event of a performance anomaly, we conduct additional test passes until we understand what's going on. In NVIDIA's control panel, we disable G-Sync for testing (and disable FreeSync for AMD, where relevant). Note that these results were all done with the newest drivers, including the newest game patches, and may not be comparable to previous test results. Also note that tests between reviewers should not necessarily be directly compared as testing methodology may be different. Just the measurement tool alone can have major impact.

We execute our tests with PresentMon via command line, a tool built by Microsoft and Intel to hook into the operating system and accurately fetch framerate and frametime data. Our team has built a custom, in-house Python script to extract average FPS, 1% low FPS, and 0.1% low FPS data (effectively 99/99.9 percentile metrics). The test pass is executed for 30 seconds per repetition, with a minimum of 3 repetitions. This ensures an easily replicated test course for accurate results between cards, vendors, and settings. You may learn about our 1% low and 0.1% low testing methodology here:

Or in text form here.

Windows 10-64 Anniversary Edition was used for the OS.

Partner cards were used where available and tested for out-of-box performance. Frequencies listed are advertised clock-rates. We tested both DirectX 11 and DirectX 12.

Please note that we use onPresent to measure framerate and frametimes. Reviewers must make a decision whether to use onPresent or onDisplay when testing with PresentMon. Neither is necessarily correct or incorrect, it just comes down to the type of data the reviewer wants to work with and analyze. For us, we look at frames on the Present. Some folks may use onDisplay, which would produce different results (particularly at the low-end). Make sure you understand what you're comparing results to if doing so, and also ensure that the same tools are used for analysis. A frame does not necessarily equal a frame between software packages. We trust PresentMon as the immediate future of benchmarking, particularly with its open source infrastructure built and maintained by Intel and Microsoft.

Also note that we are limited on our activations per game code. We can test 5 hardware components per code within a 24-hour period. We've got three codes, so we can test a total of 15 configurations per 24 hours.

Battlefield 1 has a few critical settings that require tuning for adequate benchmarking. Except where otherwise noted, we disabled GPU memory restrictions for testing; this setting triggers dynamic quality scaling, creating unequal tests. We also set resolution render scale to 100% to match render resolution to display resolution. Field of View was changed to 80-degrees to more appropriately fit what a player would use, since the default 55-degree FOV is a little bit silly for competitive FPS players. This impacts FPS and should also be accounted for if attempting to cross-compare results. V-Sync and adaptive sync are disabled. Presets are used for quality, as defined by chart titles. Game performance swings based on test location, map, and in-game events. We tested in the Italian Avanti Savoia campaign level for singleplayer, and we tested on Argonne Forest for multiplayer. You can view our test course in the above, separate video.

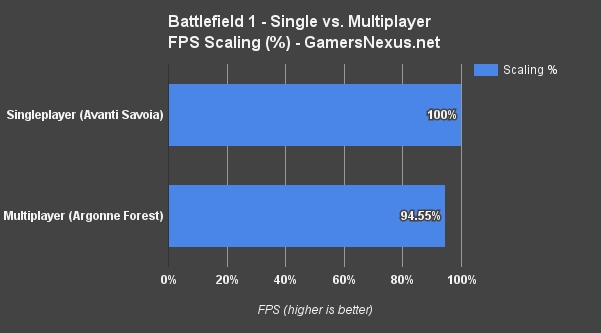

The campaign was used as primary test platform, but we tested multiplayer to determine the scaling between singleplayer and multiplayer. Multiplayer is not a reliable test platform when considering our lack of control (pre-launch) over servers, tick rate, and network interference with testing. Thankfully, the two are actually pretty comparable in performance. FPS depends heavily on the map, as always, but even on 64 player-count servers, assuming the usual map arrangement where you never see everyone at once, are not too abusive on the GPU.

Note that we used the console command gametime.maxvariablefps 0 to disable the framerate cap, in applicable test cases. This removes the Battlefield 1 limitation / FPS cap of 200FPS.

Also note that low-end CPUs would be valuable to test for DirectX 12 performance, given the ability to offload draw calls from the CPU to the GPU. In our tests, however, the low-end performance is bad enough that we're sticking to our recommendations made in the following content, even for low-end CPU scenarios. We'll visit that topic soon enough, though. The Origin activation limitation has prevented us from analyzing CPU performance straight away.

Battlefield 1 – Multiplayer vs. Singleplayer FPS Scaling

Because the Italian campaign level was our primary test platform for a repeatable benchmark, we decided to run through some multiplayer tests on-the-fly to compare versus our singleplayer GPU performance analysis. Contrary to our singleplayer tests, these multiplayer tests were not conducted with any particular test pattern. We wanted a wide sweep of real, in-game performance to better understand the game mode that will undoubtedly draw the most people. Here is some of that data:

| MP 4K High - Raw Data from GTX 1080 | SP 4K High - Raw Data from GTX 1080 | |||||||

| Dx11 | AVG FPS | 1% LOW | 0.1% LOW | Dx11 | AVG FPS | 1% LOW | 0.1% LOW | |

| Argonne | 67 | 57 | 55 | Avanti Savoia | 69 | 60 | 54 | |

| Argonne | 71 | 59 | 55 | vs | Avanti Savoia | 69 | 60 | 58 |

| Argonne | 72 | 55 | 52 | Avanti Savoia | 69 | 61 | 58 | |

| Argonne | 72 | 61 | 58 | |||||

And 1440p:

| MP 1440p Ultra - Raw Data from GTX 1080 | SP 1440p Ultra - Raw Data from GTX 1080 | |||||||

| Dx11 | AVG FPS | 1% LOW | 0.1% LOW | Dx11 | AVG FPS | 1% LOW | 0.1% LOW | |

| Argonne | 116 | 85 | 77 | vs | Avanti Savoia | 115 | 85 | 70 |

| Argonne | 110 | 87 | 79 | Avanti Savoia | 116 | 94 | 72 | |

| Argonne | 105 | 82 | 69 | Avanti Savoia | 116 | 92 | 70 | |

| Argonne | 110 | 83 | 75 | |||||

The game actually scales fairly linearly between our two test cases. Note that, as always, specific game events or the introduction of a lot of geometric complexity at once – like multiple tanks on screen and at a high LOD – will impact FPS. Similarly, flying empty skies or staring at low density objects will impact FPS in the opposite direction. For our tests, we have a planned mix of geometry, effects, crepuscular rays, and in-game events.

The chart we've been showing reveals the scaling between our multiplayer, non-repeated, real gameplay and the repeated singleplayer gameplay scenario. We're seeing about 5% scaling (worse in multiplayer) between the two. To this end, subtract about 5% from our singleplayer results to understand likely performance for multiplayer on each device.

And that sort of spoils some of our performance results, so let's jump right into DirectX 11 at 4K with High settings.

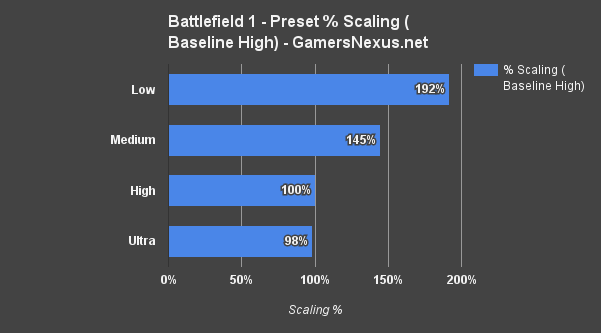

Battlefield 1 – Low, Medium, High, & Ultra FPS Scaling

We prioritized “Ultra” and “High” for our tests, but if you'd like to roughly extrapolate for your device, we compiled a couple of cards for the above scaling performance between settings (baseline of “high”).

Battlefield 1 4K Benchmark with DirectX 11 – GTX 1080 vs. GTX 1070, R9 Fury X, RX 480

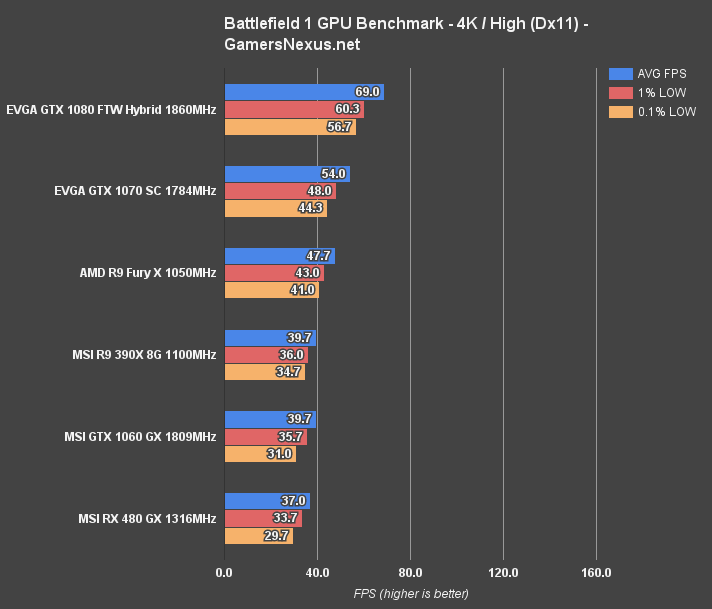

We settled on “High” for 4K testing, although “Ultra” really only decreased FPS by a few points. EVGA's GTX 1080 FTW Hybrid sits at the top of the bench, pushing an average FPS of 69 with 1% lows of 60 and 0.1% lows of 56.7, an indicator of consistent frametimes with low latency and variance.

The GTX 1070 SC is operating at an average of 54FPS for 4K/High, with lows dipping to 44FPS. Following this, the Fury X runs 47.7FPS AVG, flanked by the MSI R9 390X and GTX 1060 Gaming X cards. The 390X and 1060 Gaming X are effectively tied in their averages and 1% lows, with the R9 390X imperceptibly favored in 0.1% lows. The RX 480 8GB Gaming X is operating at 37FPS AVG at 4K/High. These three cards – the 1060, 390X, and 480 – are bordering on unplayable for a higher-speed shooter. If you really wanted 4K gaming with these devices, which seems an odd fit anyway, you'd want to dip settings closer to medium.

Let's look at how the same settings perform under Dx12.

Battlefield 1 4K Benchmark – DirectX 12 vs. DirectX 11

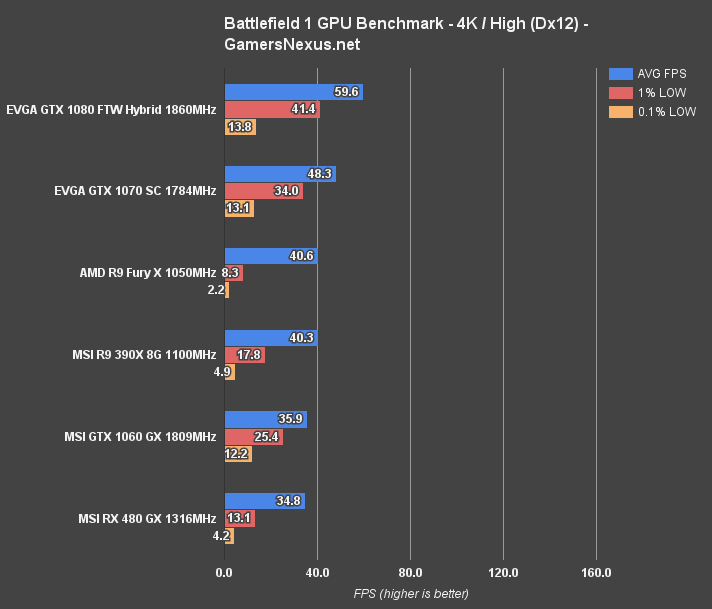

Here's 4K/High (same settings and scenario as above) with DirectX 12 rather than Dx 11.

The GTX 1080 is now operating at 59.6FPS AVG, or about 10FPS slower than in Dx11, and with significantly worse 1% and 0.1% low values than in DirectX 11. This is precisely why we use this testing methodology – those stutters would not appear in the data if we just used averages (they'd be smoothed over), and the stutters are perceptible in gameplay as sudden hitches between frame delivery. In this instance, Dx11 should provide smoother performance.

Looking at the next card down, the GTX 1070 sits at about 48FPS average with similarly low 0.1% performance, trailed next by the R9 Fury X and R9 390X. The Fury X has the worst frametime performance on this chart. We suspect that this is at least partially a result of its more limited VRAM, though the 390X isn't much better.

We believe some of these frametime hits are a result of Dx12 memory management falling more on the ISVs than on the IHVs, and the ISVs are still learning how to deal with memory allocation for games. There's likely other optimization issues in there, too, but we don't know the full extent of how Battlefield 1 was built for Dx12.

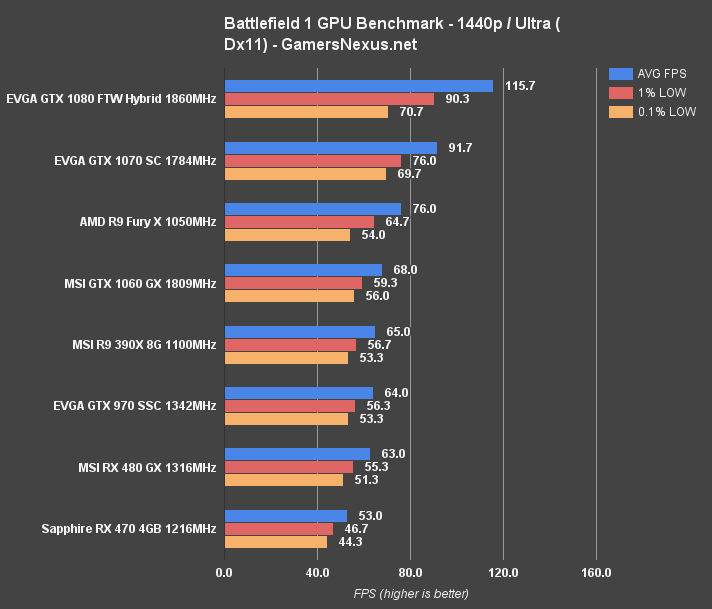

Battlefield 1 1440p Benchmark (Dx 11) – RX 480 vs. GTX 1060, 1070, More

Let's move back to DirectX 11 with 1440p and Ultra settings.

The GTX 1080 FTW Hybrid is chart-topping with nearly 120FPS, for those of you with 120Hz displays. The card's running 1% and 0.1% low values north of 60FPS, and is followed most immediately by the GTX 1070 SC at 91.7FPS AVG, also with tightly timed lows. Really, almost everything we're showing on this chart is fairly playable at 1440p with Ultra settings, including the RX 480 Gaming X. The RX 470 isn't really the best option, but you could tune settings down to High to hit that 60FPS marker if really desired. Granted, most RX 470 owners are probably not running on 1440p displays.

Anyway, the R9 Fury X outpaces the GTX 1060 by about 8FPS, or about 12%, with the R9 390X following the GTX 1060 by a few frames. The cards performing in the 60s will occasionally dip when major in-game events happen, but should generally remain playable at 1440p.

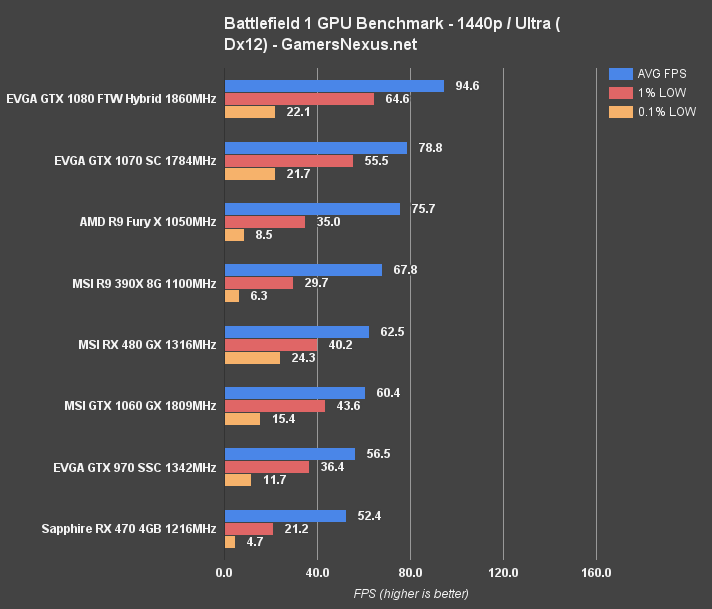

Battlefield 1 1440p Benchmark (Dx 12) – RX 480 vs. RX 470, GTX 1060, GTX 1070

Bouncing back to Dx12 at 1440p/Ultra, we now see the GTX 1080 FTW Hybrid is performing about 22% worse in its averages than with Dx11. The lows also take a big hit, dipping down to 22FPS in the worst few frames of the benchmark. This is true across the board, with nVidia holding higher lows than AMD, technically, but both are disagreeable. The fact that nVidia is the “least worst” doesn't win it any points, in this particular test. Dx12 performance remains poor all the way down the stack when looking at onPresent intervals.

Not every single test run exhibits the variance, but it happens enough to be seen during gameplay. If you were to play multiplayer long enough, some of those 'hitches' eventually smooth-over, but there are still detectable stutters. The RX 480, for instance, had one test pass with 1% low values at 61.5FPS and 0.1% low values at 60.9FPS – both completely agreeable – though other test passes each exhibited at least one or two noticeable stutters, outputting 6FPS 0.1% lows and 30FPS 1% lows.

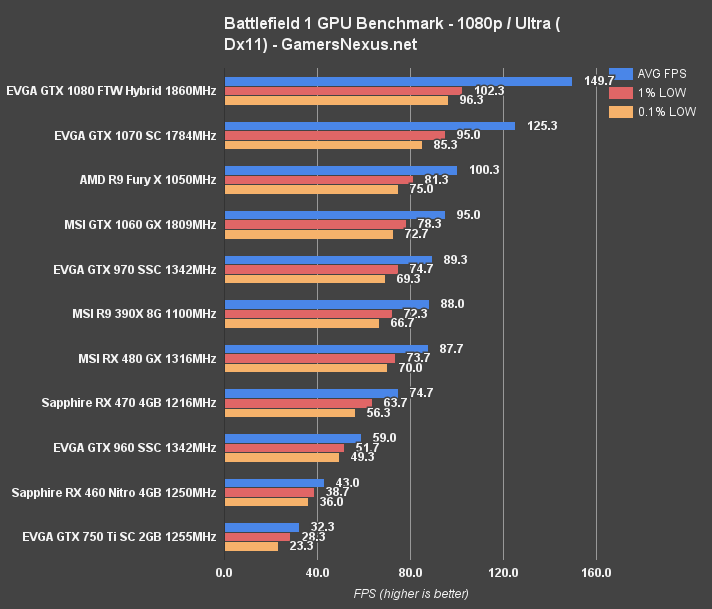

Battlefield 1 1080p Benchmark (Dx 11) – GTX 970 vs. GTX 960, RX 470, 460, etc.

1080p retains its domination over the display market, though that'll shift toward 1440p as current-gen GPUs begin saturating the market. With DirectX 11 and Ultra settings, 1920x1080 debuts larger charts for us, containing everything down to the GTX 960, GTX 750 Ti, RX 460, and RX 470.

The GTX 1080 FTW Hybrid is beginning to approach the game's 200FPS framerate cap before console modifications, trailed by the GTX 1070 at 125FPS AVG, then the R9 Fury X at 100FPS AVG. Neither is really the “right” card for 1080p gaming, given their output at 1440p and 4K. All devices on the bench have tight frametimes from both AMD and nVidia, with the 750 Ti struggling enough to mandate a settings reduction closer to low/medium. The RX 460 would operate reasonably at medium, for the most part.

As for cards closer to the baseline 60FPS for 1080p/Ultra, you'd be able to get away with an RX 470 pretty easily with its 75FPS AVG, or with a GTX 960 right around 60FPS.

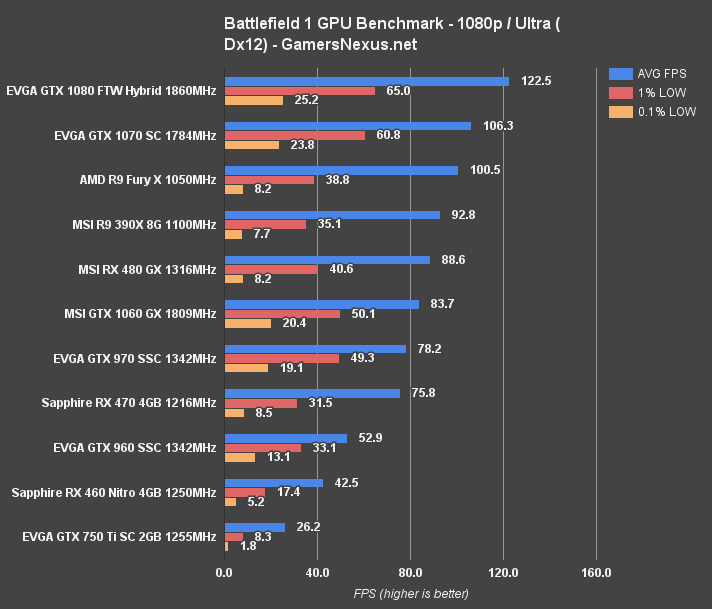

Here's a look at the Dx12 charts – more of the same.

Best GPUs for Battlefield 1

We're happy to report that frametime variance is not a major distinction point between nVidia and AMD with Battlefield 1. That's the good news. With DirectX 11, both vendors have tightly timed low performance relative to the average FPS. Card hierarchy seems to generally make sense and reflect the prices – another rarity.

Unfortunately, DirectX 12 is variable on each vendor, with generally poor low performance values and occasional stuttering. The stutters are buried a bit as multiplayer sessions are stretched over a longer period of time, but still present. Averages are effectively identical for AMD between Dx12 and Dx11 (when using a high-end CPU), and they're better for nVidia with Dx11 than Dx12. Dx12 also has more variance overall, between both vendors, and so we're basing this conclusion on Dx11 performance. Again, even without the variance, the average framerate is effectively equal on AMD and is slightly negatively scaled on nVidia. This will make the performance and dollar arguments a little bit less complicated.

For 1080p gaming with Ultra settings, owners of last gen's GTX 960 and R9 380X will be able to play reasonably well, with a few small tweaks down to “High” if 60FPS is strongly desired. The RX 480 (~$260) and GTX 1060 (~$265) are both more than capable of performing at 1080p, with the RX 480 a few frames ahead in Dx12, though with worse stutters, and the GTX 1060 ahead in Dx11. Both these ~$260 cards also scale well to 1440p and are fully capable of 1440p at Ultra settings, where we see framerates north of 60FPS for each vendor.

If you wanted to go cheaper for 1080p, the RX 470 (if ever $180) or RX 480 4GB ($200) card would both be capable of handling 1080p/Ultra with FPS greater than 70 AVG. NVidia's GTX 1050 and 1050 Ti are also worth considering, but we don't have post-able results for those cards just yet.

4K/high performance does well on a GTX 1070 ($390) and reasonably on an R9 Fury X, if you already own one.

Aside from the Dx12 variance, the game is fairly well optimized overall and each vendor has game-ready, day-one drivers. We'd suggest looking at your ideal card's performance in other games before a purchase, but 1080p is done easily with an RX 470 and, one could speculate, a GTX 1050 Ti. The GTX 1060 and RX 480 handle 1080p easily, and both cards can run 1440p if desired. 4K demands a 1070 or 1080 from nVidia (if sustaining high/ultra settings) or an R9 Fury X from AMD.

Editorial, Test Lead: Steve “Lelldorianx” Burke

Test Technician: Andie “Draguelian” Burke

Video Producer: Andrew “ColossalCake” Coleman