The Witcher 3 Texture Quality Comparison – VRAM Usage & FPS Benchmarks

Posted on

During the GTA V craze, we posted a texture resolution comparison that showcased the drastic change in game visuals from texture settings. The GTA content also revealed VRAM consumption and the effectively non-existent impact on framerates by the texture setting. The Witcher 3 has a similar “texture quality” setting in its game graphics options, something we briefly mentioned in our Witcher 3 GPU benchmark.

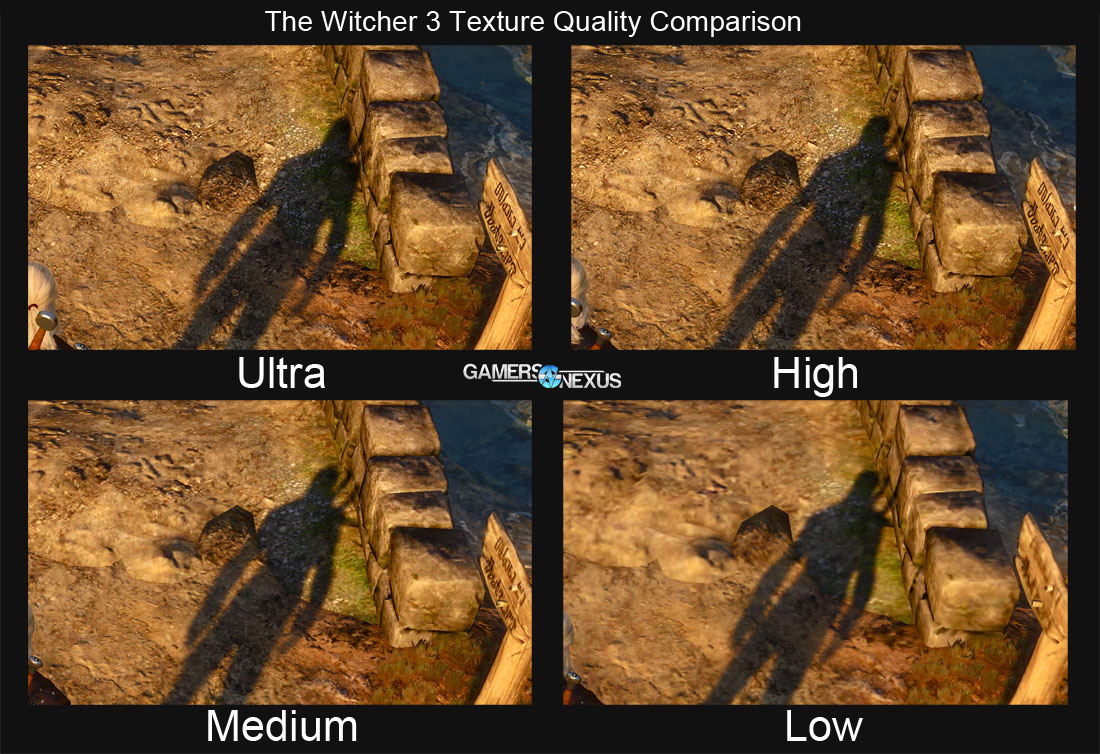

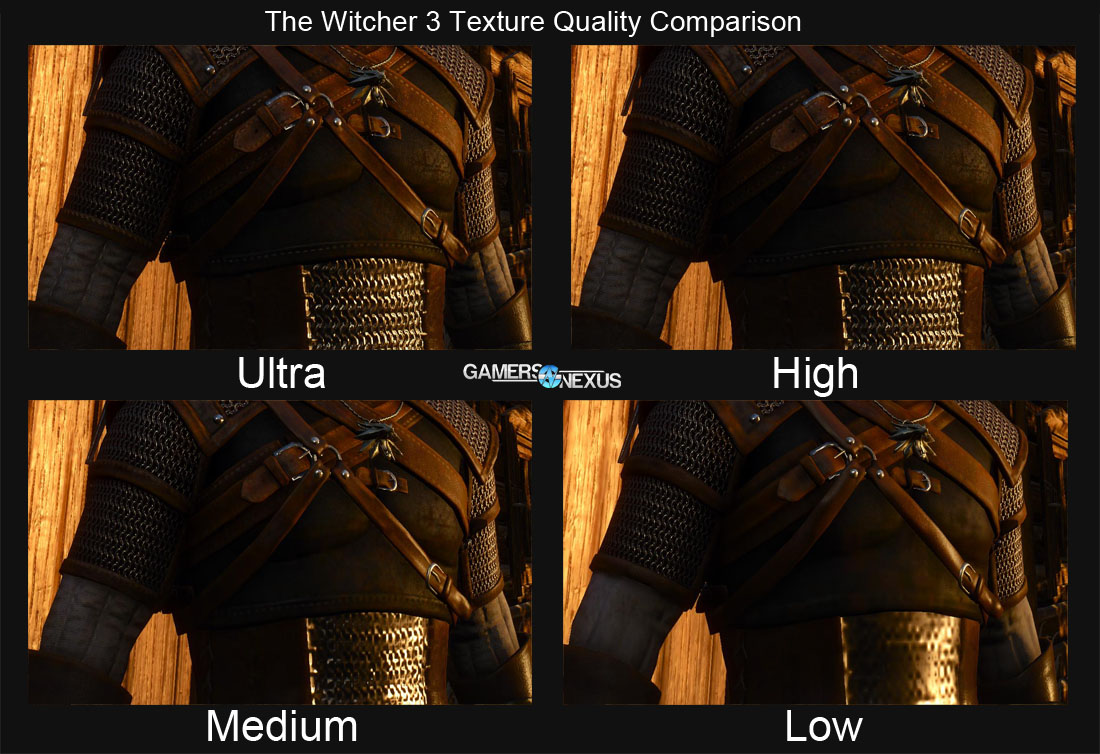

This Witcher 3 ($60) texture quality comparison shows screenshots with settings at Ultra, High, Normal, and Low using a 4K resolution. We also measured the maximum VRAM consumption for each setting in the game, hoping to determine whether VRAM-limited devices could benefit from dropping texture quality. Finally, in-game FPS was measured as a means to determine the “cost” of higher quality textures.

Other The Witcher 3 content includes:

- The Witcher 3 GPU Benchmark.

- The Witcher 3 Crash Fix Guide.

- Witcher 3 benchmark course – max graphics at 4K.

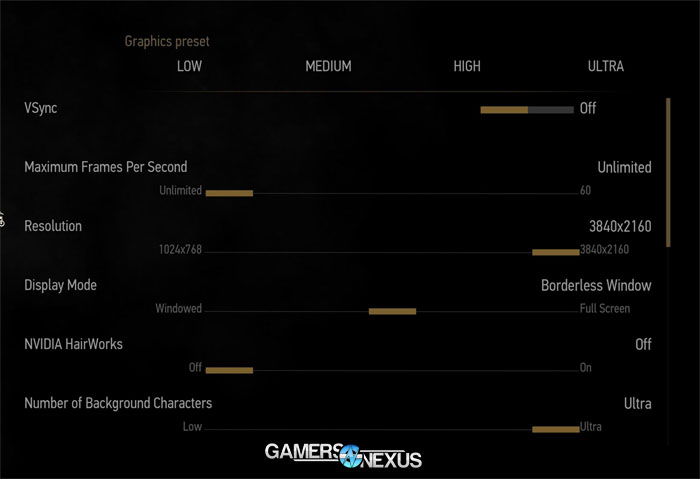

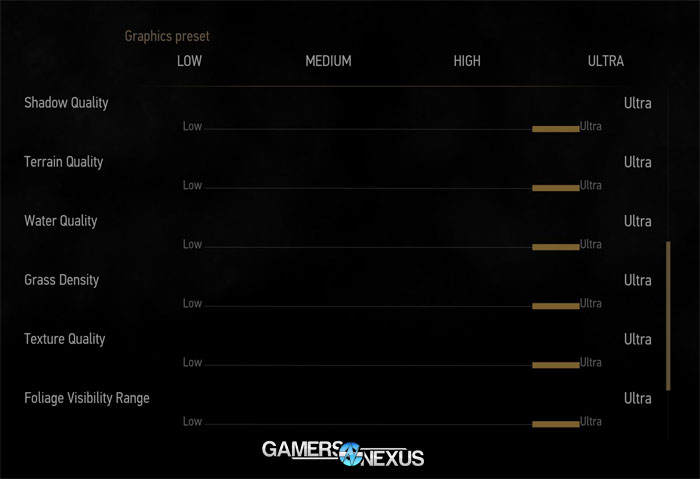

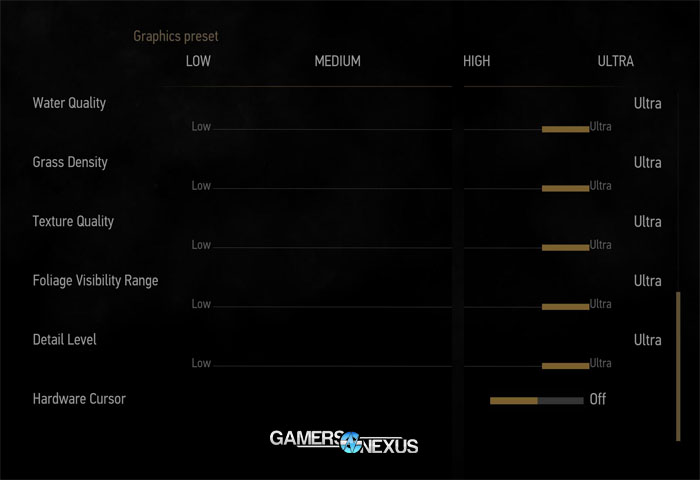

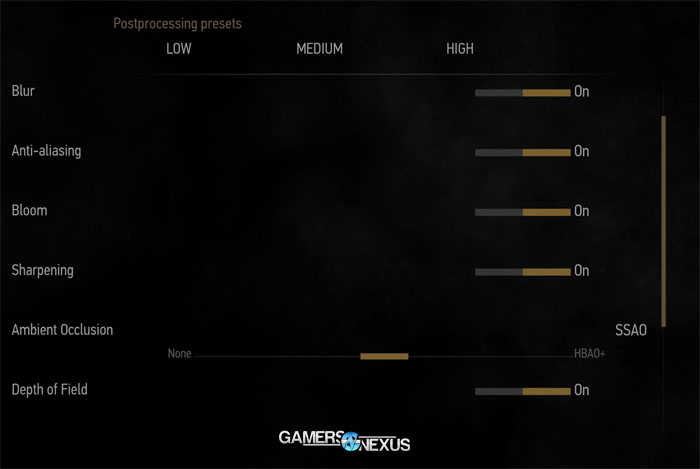

The Witcher 3 Graphics Settings

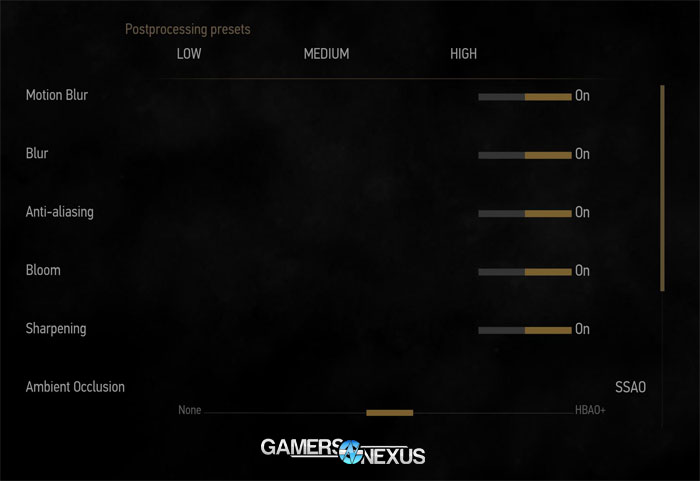

The below screenshots are sourced from our Witcher video card benchmark. This is the entire collection of graphics settings within the Witcher.

Comparison Methodology

We used similar methodology to our GTA V texture comparison article. The Titan X was put to use for benchmarking.

For purposes of this graphics comparison, we took easily replicable steps on each texture resolution setting to ensure accuracy of results. All graphics settings were configured to their maximum value on the “graphics” tab with the exception of anti-aliasing (disabled), HairWorks (disabled), and AO (SSAO). Screen resolution was set to 4K for the screenshots, but benchmarks were taken at 1080p and 4K (only reporting on 1080).

We traveled to preselected locations and paused the game. Once here, we stood on designated “landmarks” and took the screenshots.

We face two issues with presentation of screenshots as data: They're massive, consuming large amounts of server bandwidth and greatly hindering page load time, and they're comparative, so we've got to find a way to show three shots at once. In order to mitigate the impact of each issue, we used a selection marquee of 546x330, selected a detailed portion of the 4K image, and then pasted it into the documents shown below. There is no scaling involved in this process.

Because file sizes were still an issue, we then scaled the finalized document into an 1100-width image, embedded below. Clicking on each image will bring up its native resolution in a new tab; no scaling occurred in the saving of these images. They're much larger images and will require longer to load.

| GN Test Bench 2015 | Name | Courtesy Of | Cost |

| Video Card | GTX Titan X 12GB | NVIDIA | $1000 |

| CPU | Intel i7-4790K CPU | CyberPower | $340 |

| Memory | 32GB 2133MHz HyperX Savage RAM | Kingston Tech. | $300 |

| Motherboard | Gigabyte Z97X Gaming G1 | GamersNexus | $285 |

| Power Supply | NZXT 1200W HALE90 V2 | NZXT | $300 |

| SSD | HyperX Predator PCI-e SSD | Kingston Tech. | TBD |

| Case | Top Deck Tech Station | GamersNexus | $250 |

| CPU Cooler | Be Quiet! Dark Rock 3 | Be Quiet! | ~$60 |

The Witcher 3 Texture Resolution Screenshots & Comparison

Click to enlarge. 2x resolution image here.

Click to enlarge. 2x resolution image here.

Click to enlarge. 2x resolution image here.

Click to enlarge. 2x resolution image here.

Click to enlarge. 2x resolution image here.

The difference is mostly inconsequential between “High” and “Ultra,” but the medium and low resolution textures present a sharp decline in visual fidelity. The nominal high/ultra disparity is backed-up by our benchmarks, which reflect the near-zero increase in quality.

The Witcher 3 Texture Quality VRAM Consumption

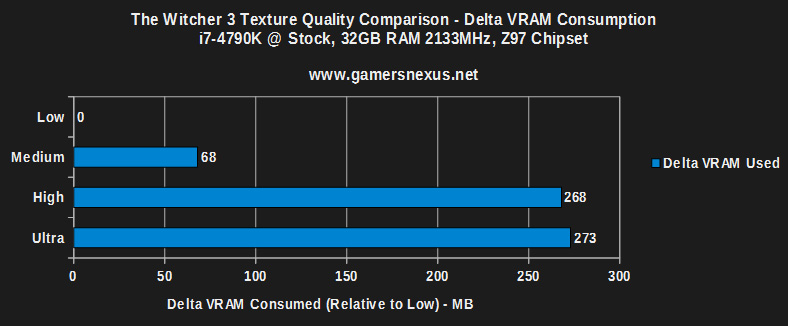

To best demonstrate VRAM consumption, we built a delta value chart over the “low” setting as a baseline (0). Note that VRAM consumption for texture quality at 1080 and 4K is the same, so the value does not scale with resolution (as other values will – like AA).

“Low” is used as our baseline (0MB consumed), with the other settings presented as deltas over low. The VRAM usage is in MB.

The VRAM consumption difference at the high-end is unnoticeable to the user. Over a span of five tests conducted for parity, we saw a 3MB-5MB VRAM value gain by switching to Ultra textures from High.

Moving from Low to Medium creates a ~68-73MB increase in VRAM consumption, which is still effectively unnoticeable insofar as memory used. The processing impact will be looked at separately.

Medium to High starts to create a more noticeable impact, which jumps from a total of ~68MB consumed to ~268MB consumed (~+200MB). Still, after subtracting for Windows and background processes (system and logging utilities), we never saw the Witcher 3 exceed ~1800MB of VRAM. This value might increase in certain areas of the game, but that's what it was for the starting village.

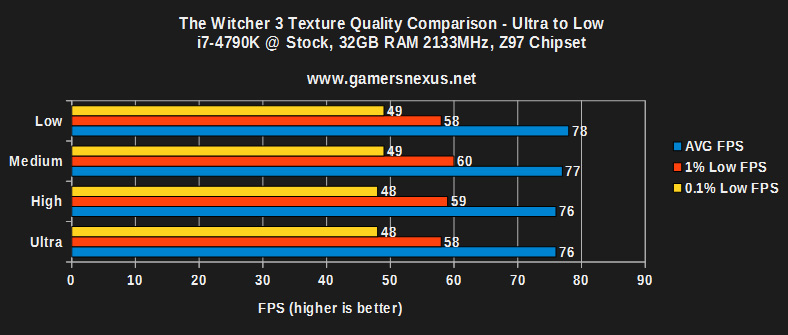

The Witcher 3 Texture Quality FPS Benchmark

Using the same test methodology as deployed in our Witcher 3 benchmark – we won't retype all of that here – we tested the Witcher 3 performance using various texture settings. The rest of the game's graphics settings were configured to their maximum value, with resolution locked to 1920x1080 (to reduce unrelated load, though 4K was also tested separately), AA disabled, HairWorks disabled, and SSAO enabled.

Performance was measured using FRAPS and analyzed with a spreadsheet. We tested for average FPS, 1% low FPS, and 0.1% low FPS (effectively the lowest 1% and 0.1% framerates, rather than using outlier minimum values).

FPS impact is marginal given the other settings. We'd suggest running Ultra or High, if you've got cycles to spare.

Conclusion: Texture Quality Impact to FPS is Unnoticeable

VRAM consumption isn't anything close to what we saw with GTA V, which exhibited ~800MB increases for higher texture qualities. At less than 300MB consumed for Ultra (relative consumption to low), unless VRAM is tight, it's easy to run the game with the highest texture quality settings. Our FPS benchmarks had to be conducted using additional passes to ensure parity of data given the tiny difference we were looking at, which further supports the usage of higher quality textures unless severely limited.

Visually, Ultra and High are fairly similar and tough to tell apart, but medium and low have a profound impact on graphics fidelity.

- Steve “Lelldorianx” Burke.